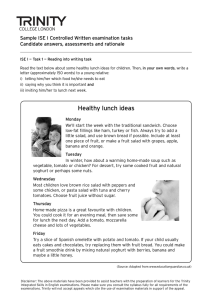

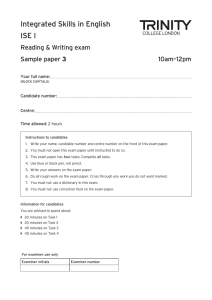

CEFR Calibration Report - Trinity College London

Anuncio