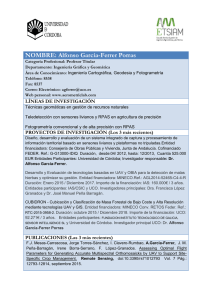

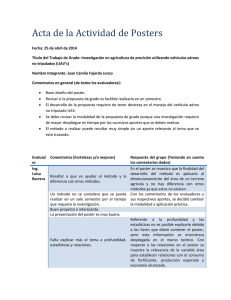

Monitorización 3D de cultivos y cartografía de malas hierbas mediante vehículos aéreos no tripulados para un uso sostenible de fitosanitarios

Anuncio