Industrial Requirements on Evolution of an Embedded System Architecture 2013

Anuncio

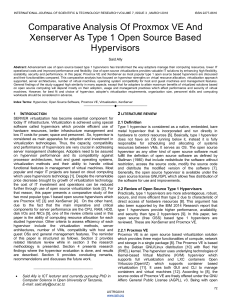

2013 IEEE 37th Annual Computer Software and Applications Conference Workshops Industrial Requirements on Evolution of an Embedded System Architecture Daniel Hallmans1,2 1 ABB AB Ludvika, Sweden [email protected] Thomas Nolte2 MRTC/Mälardalen University Västerås, Sweden [email protected] 2 Abstract—Management of obsolete software- and hardwarecomponents along with the addition of new components and functionalities in existing embedded systems can be a very difficult or almost impossible task. If there at the same time is a requirement put on the system that it should be in operation for more than 30 years, then the evolution of the embedded system architecture over such a long duration of time will be an even more challenging problem to solve. A large number of different industries, for example the process and power transmission industries, are faced with this type of challenges on a daily basis. The contribution of our research presented in this paper is a set of questions and answers on how it is possible to replace and update an old control system, with examples inherent in process and power transmission applications. We also look at different methods that can be used during the development of new systems such that they will provide a natural support for evolvability, targeting future control system applications. Keywords— Evolution, obsolete components, systems, life cycle, virtualization, architecture I. Figure 1: Simplified picture of a 30-40 year life cycle for a product, highlighting update of functions and hardware A typical case in many industries is that a customer requires that the system should be in operation for at least 3040 years, as depicted in Figure 1. During this time the control system, or parts of it, typically has to be replaced and/or updated. The change of the control equipment can be due to, for example, changes in requirements that are impossible to implement in the original control system, and/or hardware components that cannot anymore be supplied as spare parts. One good example of this can be taken from the avionics industry were the onboard computers hardware has to be replaced as it becomes obsolete, [1]. embedded INTRODUCTION The process and power industries are more and more depending on computer control to meet an increasing demand in efficiency and effectiveness of their product portfolio. This influences the evolution of their corresponding Embedded Systems Architectures (ESAs), which is driven by the need for an increasing computing power and networking bandwidth to cope with larger and more advanced systems. Along with more stringent requirements on safety, security, and faster time to market, and powerful tools for engineering, this all put together increases the need to have an understanding of the effects of potential changes made in the system architecture. In this context it is important to investigate different technologies from an evolvability point of view, i.e., to which extent that a particular technology can cope with potentially increasing computing and networking demands, also including technologies that have been developed outside the traditional industrial field. The introduction of a new technology may evolve the existing solutions, or influence the solutions such that there is a change of paradigm altering the full architecture of these solutions. To understand the impact of a particular new technology, it is important to investigate this new technology thoroughly, and to understand what opportunities that this technology could give us for future developments. In addition, it is also important to be able to maintain and support the evolvement of existing (legacy) control systems that are already in use in industrial systems today. 978-0-7695-4987-3/13 $26.00 © 2013 IEEE DOI 10.1109/COMPSACW.2013.129 Stig Larsson2,3 Effective Change AB Västerås, Sweden [email protected] 3 In this paper we present our work on investigating the evolvability of ESAs in an industrial environment where it is increasingly important to have the ability to evolve the systems and products in different ways. We are describing two types of evolvability requirements: the first is the ability in an organization to accommodate for technological changes and evolve the architecture of a product or system. The second is the capability to ensure that existing systems and products that are in operation can utilize the new technology while maintaining compatibility with the installed equipment. Our hypothesis is that it is possible to make an initial design of a control system that minimizes the cost of a potential evolution if a number of parameters are considered during the design phase. If these parameters can be identified and described, then it will also be possible to design a method that enables us to express to what degree a system, product, architecture, or part of a system, is evolvable. However, this will most likely require that we can foresee what types of changes that will occur in a future evolution of the system. The outline of this paper is as follows: Section II describes the background to the problem of maintaining legacy systems during a long period of time. Section III describes related work in the area of life cycle analysis and management of 668 has to be replaced [1]. Changing the hardware also affects the software running on the product and the corresponding tools used by developers and users. If for example a DSP has to be replaced on a board with a newer generation due to component obsoleteness, then the new DSP can probably not run the same code as the previously one. It would then require that the old code is changed such that it can run on the new DSP and tested such that it behaves correctly. If the code is not stored directly on the device but instead loaded by the user it could also from a user perspective require new tools and instructions for loading the code. If the new board is used as a spare part in a system that also includes 100 boards of the older generation then the user has to have different instructions for the two types of boards. It could for example be different instructions for loading, setting up or debugging. obsolete components in an embedded system. In Section IV we discuss different possible solutions and in Section V we summarize and discuss our work along with possible future work. II. PROBLEM DEFINITION In a large number of industries it is expected that the life cycle of the corresponding products is relatively long. Typical power plants, energy transmission systems, avionics systems etc. have a life time of more than 30 years. This requires that there is a control system that can support the station during that full time. To handle this, one way could be to support the same implementation all through the life-time of the installation. An obvious problem with this approach is that the manufacturer then has to be able to supply the customer with spare parts during the entire life-time of the system. Any new requirements as a result of an evolved system, e.g., new types of communication interfaces to be used, security requirements to be applied etc., must be possible to realize using the same and original hardware and software components. B. Functional /non-functional requirements Legacy functions can stop to work when components are changed in the system. For example, software that previously worked in the original system can stop working when it is recompiled for a new hardware. The reason for this may, for example, be inherent due to a new memory interface, or new library functions. Another solution would be to upgrade the whole system, or parts of it, at given times with, e.g., new hardware, new functions, new communication technologies, tools, operating systems etc. One example could be a system designed 10 or 15 years ago that runs distributed on different types of hardware nodes with communication busses in between. Today the same system would possibly be able to run completely on a more powerful single or multi core CPU. The previously internal busses between the hardware modules could be removed and replaced by corresponding communication paths in the software. Only the external communication interface would still be kept the same. This type of update is only possible if there are no system reasons for the previously distributed design, e.g., redundancy, physical separation of control and protection functionality, or safety functions. In these cases it would not be possible to combine the different systems on one shared hardware device, but instead they have to be located on separate hardware modules. Another example could be a system with requirements on frequent updates of security patches, but the manufacturer of the operating system does not maintain the used generation of the software anymore. In this case a new operating system is needed or the system has to be encapsulated in another secure system that can handle the security requirements. Non functional requirements, such as timing, can change when updating the system with new and faster hardware. One example can be if a DSP emulator is 100% code compatible but differ in performance. Results could be delivered faster or slower than earlier. Maybe the new hardware is optimized for good average throughput whereas the software function actually would benefit more from a low jitter, i.e., bounded best and worst case execution times. For example, caches on a multi-core chip may guarantee good average case execution times, whereas the worst case execution time is unknown or unbounded. The question is how this potentially new nonfunctional behavior of the hardware would affect the performance of the system. The non-functional requirements can be very hard to specify when working with a combination of the new and the updated design. Below we highlight nonfunctional requirements related to performance, safety and cyber security. 1) Performance Changing the type of hardware can also change the performance of the system. If an old DSP is changed to a new one or the new configuration is running on one dedicated core in a multi-core system, then it is not obvious that the performance of the design will increase compared to the original design. Typically with new hardware one would get higher performance but looking at the overall system performance one has to take the whole system into account: communication bandwidth, clock frequency, cache utilization, etc. When running systems on a multi-core then the cache will play an important role for the performance of the software running on that multi-core processor. Making changes to the performance of one system can/will also affect the surrounding systems and tools. In the following, different areas that must be considered when a system or a product is evolving are highlighted and described. The selected areas (obsolete components, functional/non-functional requirements, and testing/ verification) are central to the evolution of the ESAs in focus. The discussion covers both types of evolution, i.e., the evolution of a current solution, and the adaption of existing equipment to a new solution. A. Obsolete components In many cases when designing a control system it is obvious that sooner or later one will run into problems with obsolete components. One example, mentioned above, is from the avionics industry were the onboard computers’ hardware 2) Safety For safety critical systems that are certified on and for one type of hardware along with a specific software it gets even 669 more complicated to make updates with respect to, e.g., new hardware components due to obsolete parts. [2] from 1984 gives an early explanation on how to think about safety features in software systems. [3] gives an overview on designing a safety critical system. There exists several examples of research ongoing in the area on how to update only a part of a system. However, this will not be further investigated in our work due to space limitations. III. RELATED WORK There has been research ongoing for quite some time on the topic of life cycle management and management of obsolete components for embedded systems. One example of a literature review of obsolescent management is presented in [7]. The paper discuss obsolescence management plans that not only takes electronics into account but also other aspects such as mechanical components, software, materials, skills, tooling and test equipment. Another paper that focus on commercial available solutions to replace hardware used in a production line is [8]. A similar replacement strategy is presented in the context of the avionics industry in [1] and for the nuclear industry in [9]. All these papers bring up the topic of obsolete components and how to replace them with different methods, for example emulating of firmware, replacement of device drivers and virtual machines to run the legacy code. There are also other papers that present approaches based on new technology trends, for example [10] targeting migration of real-time legacy code to multi-core platforms, or [11] that presents results from testing virtualization platforms to improve durability and portability of industrial applications. Management of obsolete components along with life cycle analysis is not only important in the embedded industry but can also be found in a number of different other areas such as information systems in larger enterprises. [12] includes a survey of modernization approaches, and [13] shows a method to package legacy code into Web services. Several of these techniques could also be reused in the context of embedded systems that have a requirement on long life times. 3) Cyber Security There is a large change in the requirements for the customers today regarding cyber security. Previously the control system was often seen as an isolated island without any connection to the outside world. This has changed during the last couple of years and there are more and more requirements formulated such that the implementation should be secure. One reason for the changes in such requirements was the discovery of “Stuxnet”. Stuxnet was a computer worm discovered in 2010 that target Siemens industrial control systems. Different variants of the Stuxnet worm infected five Iranian organizations, with the probable target suspected to be uranium enrichment infrastructure, [4], [5]. Many existing standard solutions on how to realize control system security had to be rewritten after the Stuxnet incident, e.g., that a control system is free from cyber attacks as long as it is not directly connected to the Internet, and that technical details about control systems are known only by experts of a particular control system and its insiders [6]. When trying to reuse the original software code in a newer system this could by accident become a cyber security problem. There are several questions that developers have to ask themselves in this situation: is the old operating system safe and secure from a cyber security perspective? Can it handle patch management? How is user management treated? Can whitelistning be a method to allow the old code to run in a secure way? IV. SOLUTIONS It is possible to look at the problem from two ways; one is to update/maintain an old control system that, e.g., has turned obsolete, and the other is to make decisions for the future when designing a new control system to from the beginning to avoid getting into the same type of problems in the future for the developed product with respect to evolvability. C. Testing/Verification When updating an older system with for example new hardware and/or new functions, such as security functions, the system still has to be able to perform the previous functions without any losses in performance etc. In this case the verification of the new system plays an important role. In some cases the requirements for the original system may not be well known. Instead there is a need to verify the functions on a more general level such that the new functionality does not affect the older implementation. The case can also be that the application running in the legacy system consists of a large number of variants that makes it very hard, or even practically impossible, to test all of them. A. Solution 1: Updating and old system One example of an old system can be an embedded system that was delivered to a customer 15 years ago, constructed of state of the art components at that time, e.g., an industrial PC based on PIC 1.0 with an Intel Pentium PRO processor and a number of add-in boards communicating with the processor through the PCI bus. The add-in boards could be equipped with DSP/FPGA and/or communication, as depicted below in Figure 2. Also all types of tools that are interacting with the product have to be tested. or more specifically the interfaces used by the tools. If the interfaces are not well defined from the start, for example the allowed communication speed, this could be complicated or even impossible to verify. If also some of the tools or software is developed by third party developers then it gets even more complicated. Figure 2: Simplified picture of a legacy system consisting of a network interface card, a CPU and an add-in PCI board equipped with DSP/FPGA with an input/output interface 670 Updating the system would then require a replacement of almost all components and only keep the interfaces to the surrounding environment. Designing a new hardware is probably easy in this case due to the fact that the performance of CPUs today is much higher than it was 15 years ago. The same reasoning is valid for communication, e.g., the 33MHZ PCI, [14], gave a maximum performance of 133 MB/s compared to the PCI-Express, [15], which is common today that on one serial lane runs at 250MB/s. If this performance is not good enough it is possible to use a number of lanes in parallel. A similar discussion would also be valid for FPGAs, where a typical FPGA 10-15 years ago was from the X4000 family provided by Xilinx. The largest model at that time, e.g., the XC4085XL, was equipped with up to 7448 logic cells and 100kbit of RAM, [16]. If this chip today is compared to a low cost Spartan-6 FPGA that has 150k Logic cells and 4.8 Mbit of RAM then one can fit up to almost 20 X4000 FPGA in one Spartan FPGA alone. If this is not good enough one could use one of the FPGAs in the high performance range, e.g., a Virtex-7 with 2000k logic cells and 64Mbit of RAM. Typically the new generation of FPGAs is also equipped with much more functionality available directly in the hardware, e.g., hardware support for PCI-Express. Compared to Xilinx 4000 that for PCI access needed external hardware that handled the PCI communication. In Figure 3 we show an example of how the upgrade could look like with a new FPGA and a multi-core CPU running both the CPU and the DSP application from the legacy system. The conclusion is that a hardware update with equal or better performance is no problem. Instead the problem, or challenge, is to get the legacy software to run in the new system. If one gets the software to run then the next problem would be to test the software such that it performs in the same way as in the old original system. B. Solution 2: Making a new design that is future proof If one is planning to create a new design that should last for more than a couple of years then one would sooner or later end up in the same discussion as being made for the replacement case discussed above. The difference is that one can try to prevent some of the problems from happening by making some smart decisions when designing the system. Looking at a possible design we can see two major contributions to the opportunity for future evolution: one is a well structured architecture that makes changes and replacement of functions easy, and the other one is hardware independent solutions, i.e., such that the system can run on any hardware. Interface Network Interface CPU 1) Design 1: Architectural structure In “An introduction to software architecture”, [17], from 1994 it is stated that we can see significant advances in the area of better understanding of the role of architecture in the life-cycle process of a system/product that includes software. Probably if systems that were designed 10, 20, 30 years back had taken the life cycle aspect more in to account, both from a software and hardware point of view, then it would be easier today to upgrade and maintain some of the systems that we are using. A number of studies have been made, looking at the possibilities to improve the evolvability of a system by using a number of different architectural methods. Component based software design [18] is one way forward that can solve some of the problems with evolvability of an embedded system. In [19] Breivold et al. discuss software evolvability as a quality attribute that describes a software system’s ability to easily accommodate future changes. Figure 3: Moving from the legacy system (depicted in Figure 2) to a system based on a multi-core solution Looking at the higher range of FPGAs equipped with hard CPUs it would actually to some extent be possible to replace the whole original system with only one FPGA with one or several hard CPU cores. The ZYNC platform from Xilinx is one example, equipped with one dual core ARM-A9 processor running at 1GHz on chip together with FPGA logic, as depicted in Figure 4. Architectural decisions are not only to make decisions on how the software should be written, but, e.g., they can also be a question on i) what type of hardware that is chosen to be used, ii) is a well defined communication interface used, iii) is there still performance left to be used for updating the functionality of the software in the future? 2) Design 2: Hardware independence A design that is completely hardware independent would probably be impossible to create in all cases, e.g., there could be specific requirements on types of I/O ports, and performance issues when applying a number of hardware independent layers. One solution that has been discussed a lot in later years has been virtualization of hardware. The term ”virtualization” [20] [21] [22] was introduced in the early 1960s to refer to a virtual environment. The virtual environment presents a simulated computer environment to the guest software isolating it from the hardware. Typically a number of guest software’s can run on one physical machine without knowing anything about each other. Network IP FPGA logic blocks Interface CPU Communication FPGA CPU Network Interface SOC – System on a Chip Figure 4: Moving from the legacy system (depicted in Figure 2) to a system on a chip with similar functionality 671 to apply. In [26] we have made a deeper investigation on using GPUs in the context of embedded systems. Virtualization often suffer from performance penalties, both with respect to resources that are required to run the hypervisor, and with respect to reduced performance of the virtual machine compared to running native on the physical machine. Depending on the hardware or requirements of performance from the application this could make it impossible to virtualize such a system. In later releases of hardware, e.g., Intel VT, [23], and AMD-V, different hardware support has been added to improve the performance of virtualization. A solution for adding new functionality that does not directly have to be located in the legacy software, for example securing an Ethernet connection with the introduction of Whitelisting, firewalls, user management etc, could be to use a hypervisor along with adding of another secure operating system and to route all of the communication through the secure operating system before it reaches the legacy system. The traditional use of virtualization has been by enterprise industries aiming to make better use of general purpose multicore processors, for example by running more than one virtual server on one hardware to more fully utilize the hardware resources of the physical server. The same functionality could be used in an embedded system to run a number of different legacy systems on the same CPU or on the same multi-core CPU and still have some kind of protection between the systems. A discussion of security and virtualization issues can be found in [22]. One typical thing that virtualization should be able to handle is that a buffer overflow in one system should not affect any of the other systems. V. SUMMARY, DISCUSSION AND FUTURE WORK The management of obsolete components and the addition of new components and functionalities in existing embedded software systems can be a hard to almost impossible task. A number of industries such as the process and power industries, nuclear power, and avionics, are all facing the same problems as their systems are expected to run and be in operation for more than 30 years. At some point in time these systems have to be evolved due to several reasons. In this paper we have discussed two specific challenges: 1. One of the major differences when using virtualization in embedded systems, not in all, compared to a more traditional server environment is the requirement for hard real-time performance. This is a requirement that one typically would not find in an enterprise system. A discussion on such systems and related challenges can be found in [10] and [24]. Updating older systems that have been in operation for 10-15 years and are now facing obsolete hardware and software components or new requirements that are not possible to be met with the old hardware and software. If we would upgrade the system to newer hardware, newer software, and/or newer functions, then we would require that the functionality of the upgraded system is verified such that the functionality of the upgraded system is still respecting its original behavior, including known and implicit requirements. Working with older legacy software and also with new development a problem that one can run into is if the virtualization environment does not support the hardware that one is using or planning to use. For a legacy system it can for example be a device driver that is not supported in the new hardware. A solution to this can be to emulate the hardware component inside the operating system or in the virtualization environment to be able to reuse the old legacy code. An example of this can be found in [11] where they discuss on how to emulate a network driver interface used in a legacy nuclear plant installation. 2. Developing new systems that should be evolvable, i.e., easy to update and upgrade in the future. In this case the architecture and the choices of hardware is important. One solution is to already from the start of the design of the system base the system design on a hypervisor to make it more hardware independent. As a further step towards being more independent from a specific hardware solution it could be advisable to specify a number of interfaces between components that can be replaced in the future. Today there are a number of different alternatives for virtualization of X86 processors. It gets more complicated if one is trying to replace, e.g., a DSP. Typically the code for a DSP has been written to run on bare metal, without any operating system, and also with a very close connection to the hardware. If the performance requirements would allow then it could be interesting to test if it is possible to emulate the old environment and then run the emulated hardware on for example an X86 processor instead. Another interesting alternative could be to use a more powerful computational device such as the Graphical Processing Unit (GPU) for general purpose computing. A high performance GPU can today be equipped with over 3000 cores where all cores are running at a clock speed of 1GHz (Nvidia GeForce GTX 690, 3072 cores, 1Ghz, 4GB of memory, [25]). The GPU is designed to solve parallel problems and not typical problems that one would find in a CPU/DSP. If we are only able to use some of the cores for running our CPU/DSP code then it may still give a high performance, making it an interesting solution Our ongoing and future work is concerning evaluation of a number of techniques and technologies that makes it possible to handle both the problems discussed above. The key question is how to enable the upgrade of an existing and old embedded system. The next step in our research will be to look at a system with obsolete components along with new functions that have to be added in parallel with the old functionality, for example new security features. The system should in the end be verified such that the changes do not interfere with the existing functions of the system. To get a realistic understanding of the challenges and possible solutions when updating and replacing components in systems, we are currently investigating different technologies that involve both hardware and software components. As a first case we have looked at the possibility to use GPUs [26] in embedded systems. We will continue the GPU investigation 672 with an evaluation of what type of functions that can be executed in the GPU. Typically we are looking for the possibility to run high performance DSP applications with support by the GPU cores. The next case will be to look at how to use virtualization to replace hardware in legacy systems. We will look at X86 and different DSP architectures. Based on the results from upgrading legacy systems we aim at describing a method on how decisions should be taken today when designing new systems such that they are more evolvable to future changes in requirements or with respect to updating and/or replacing of hardware and software components. [11] A. Ribière, “Using virtualization to improve durability and portability of industrial applications”, in IEEE Intl. Conf. on Industrial Informatics (INDIN'08), pp. 1545-1550, 2008. [12] S. Comella-Dorda, K. Wallnau, R. C. Seacord, and J. Robert, “A Survey of Legacy System Modernization Approaches”, Tech. Note. CMU/SEI-2000-TN-003, 2000. [13] Z. Zhang, H. Yang, “Incubating Services in Legacy Systems for Architectural Migration,” in 11th Asia-Pacific Software Engineering Conference, pp. 196–203, 2004. [14] “Wikipedia, PCI bus.” [Online]. Available: http://en.wikipedia.org/wiki/Conventional_PCI. [Accessed: 01-May-2013]. [15] “Wikipedia, PCI-Express.” [Online]. Available: http://en.wikipedia.org/wiki/PCI_Express. [01-May-2013]. [16] “Xilinx XC4000 data sheet.” [Online]. Available: http://www.xilinx.com/support/documentation/data_sheets/4 000.pdf. [Accessed: 01-May-2013]. [17] D. Garlan and M. Shaw, “An introduction to software architecture,” Computer Science Department. Paper 724. http://repository.cmu.edu/compsic/724, 1994. [18] F. Lüders, I. Crnkovic, and A. Sjögren, “Case Study : Componentization of an Industrial Control System,” in 26th Annual Intl. Computer Software and Applications Conference (COMPSAC'02), pp. 67-74, 2002. [19] H. P. Breivold, I. Crnkovic, and M. Larsson, “Software architecture evolution through evolvability analysis,” Journal of Systems and Software, vol. 85, no. 11, pp. 25742592, 2012. ACKNOWLEDGMENT The research presented in this paper is supported by The Knowledge Foundation (KKS) through ITS-EASY, an Industrial Research School in Embedded Software and Systems, affiliated with the school of Innovation, Design and Engineering (IDT) at Mälardalen University (MDH), Sweden. REFERENCES [1] J. Luke, J. W. Bittorie, W. J. Cannon, and D. G. Haldeman, “Replacement Strategy for Aging Avionics Computers,” IEEE AES Systems Magazine, vol. 14, no. 3, pp. 7-11, 1999. [2] N. G. Leveson, “Software Safety in Computer-Controlled Systems,” Computer, vol. 17, no. 2, pp. 48-55, 1984. [3] D. William R., “Designing Safety Critical Computer Systems,” Computer, vol. 36, no. 11, pp. 40–46, 2003. [4] “Wikipedia, Stuxnet.” [Online]. Available: http://en.wikipedia.org/wiki/Stuxnet. [01-May-2013]. [5] R. Langner, “Stuxnet: Dissecting a Cyberwarfare Weapon,” Security & Privacy, vol. 9, no. 3, pp. 49-51, 2011. [20] “Wikipedia, Virtualization.” [Online]. Available: http://en.wikipedia.org/wiki/Virtualization. [01-May-2013]. [6] T. Miyachi, H. Narita, H. Yamada, and H. Furuta, “Myth and Reality on Control System Security Revealed by Stuxnet,” in IEEE SICE Annual Conference (SICE'11), pp. 1537-1540, 2011. [21] S. Campbell and M. Jeronimo, “An Introduction to Virtualization.” Intel, pp. 1-15, 2006. [22] H. Douglas and C. Gehrmann, “Secure Virtualization and Multicore Platforms State-of-the-art report”, SICS Technical Report, Swedish Institute of Compute Sceince, 2009. [23] R. Uhlig, G. Neiger, D. Rodgers, A-.L. Santoni, F.C.M. Martins, A.V. Anderson, S.M. Bennett, A. Kagi, F.H. Leung, and L. Smith, “Intel virtualization technology”, Computer, vol. 38, no. 5, pp. 48-56, 2005. T. Sarfi, “COTS VXI hardware combats obsolescence and reduces cost,” in IEEE AUTOTESTCON, pp. 177-183, 2002. [24] A. Ribière, “Emulation of Obsolete Hardware in Open Source Virtualization Software,” in IEEE Intl. Conf. on Industrial Informatics (INDIN'10), pp 354-360, 2010. A. Aguiar, and F. Hessel, “Embedded systems’ virtualization: The next challenge?,” in 21st IEEE Intl. Symp. on Rapid System Prototyping (RSP'10), 2010. [25] “Hardware | GeForce.” [Online]. Available: http://www.geforce.co.uk/hardware. [01-May-2013]. [26] D. Hallmans, M. Åsberg, and T. Nolte, “Towards using the Graphics Processing Unit (GPU) for embedded systems” in IEEE Intl. Conf. on Emerging Technologies & Factory Automation (ETFA'12), pp. 1–4, 2012. [7] [8] [9] [10] F. J. Romero Rojo, R. Roy, and E. Shehab, “Obsolescence Management for Long-life Contracts : State of the Art and Future Trends”, The International Journal of Advanced Manufacturing Technology, vol. 49, no. 9-12, pp. 12351250, 2010. F. Nemati, J. Kraft, and T. Nolte, “Towards migrating legacy real-time systems to multi-core platforms,” in IEEE Intl. Conf. on Emerging Technologies and Factory Automation (ETFA'08), pp. 717-720, 2008. 673