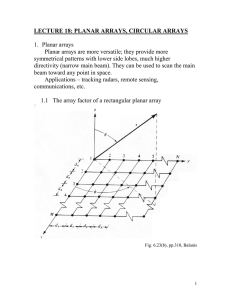

Optimum Array Processing (Detection, Estimation, and Modulation Theory, Part IV) ( PDFDrive )

Anuncio