- Ninguna Categoria

Dynamic Optimization Textbook: Elements & Applications

Anuncio

ELEMENTS

OF DYNAMIC

OPTIMIZATION

Alpha C. Chiang

Professor of Economics

The University of Connecticut

New York

Caracas

New Delhi

Lisbon

Paris

St. Louis

London

San Juan

McGraw-Hill, Inc.

San Francisco

Madrid

Singapore

Mexico

Auckland

Sydney

· Milan

Tokyo

Bogota

Montreal

Toronto

ELEMENTS O F DYNAMIC OPTIMIZATION

International Editions 1992

Exclusive rights by McGraw-Hill Book Co-Singapore for manufacture and

export. This book cannot be re-exported from the country to which it is

consigned by McGraw-Hill.

Copyright

©

1992 by McGraw-Hill, Inc. All rights reserved. Except as

permitted under the United States Copyright Act of 1976, no part of this

publication may be reproduced or distributed in any form or by any means,

or stored in a data base or retrieval system, without the prior written

permission of the publisher.

5 6 7 8 9 0 KHL SW 9 6 5

Library of Congress Cataloging-in-Publicaton Data

Chiang, Alpha C., (date).

Elements of dynamic optimization

p.

em.

I

Alpha C. Chiang.

Includes bibliographical references and index.

ISBN 0-07-010911-7

1. Mathematical optimization.

I. Title.

HB143.7.C45

2. Economics, Mathematical.

1992

330'.01'51'- dc20

91-37803

This book was set in Century Schoolbook by Science Typographers, Inc.

The editor was Scott D. Stratford;

the production supervisor was Denise L. Puryear.

The cover was designed by John Hite.

Project supervision was done by Science Typographers, Inc.

Art was prepared electronically by Science Typographers, Inc.

When ordering this title, use ISBN 0-07-112568-X.

Printed in Singapore.

CONTENTS

Preface

Part 1

1

1.1

1.2

1.3

1.4

Part 2

2

2.1

2.2

2.3

2.4

2.5

3

3.1

3.2

3.3

3.4

Introduction

The Nature of Dynamic Optimization

xi

1

3

Salient Features of Dynamic Optimization Problems

Variable Endpoints and Transversality Conditions

The Objective Functional

Alternative Approaches to Dynamic Optimization

4

8

12

17

The Calculus of Variations

25

The Fundamental Problem of the Calculus

of Variations

27

The Euler Equation

Some Special Cases

Two Generalizations of the Euler Equation

Dynamic Optimization of a Monopolist

Trading Off Inflation and Unemployment

28

37

45

49

54

Transversality Conditions

for Variable-Endpoint Problems

59

The General Transversality Condition

Specialized Transversality Conditions

Three Generalizations

The Optimal Adjustment of Labor Demand

59

63

72

75

vii

viH

CONTENTS

4

4.1

4.2

4.3

4.4

5

5.1

5.2

5.3

5.4

5.5

6

6.1

6.2

6.3

Part 3

7

Second-Order Conditions

79

Second-Order Conditions

First and Second Variations

79

81

91

95

Infinite Planning Horizon

98

The Concavity ;convexity Sufficient Condition

The Legendre Necessary Condition

Methodological Issues of Infinite Horizon

The Optimal Investment Path of a Firm

The Optimal Social Saving Behavior

Phase-Diagram Analysis

The Concavity /Convexity Sufficient Condition

Again

98

103

111

117

130

Constrained Problems

133

Four Basic Types of Constraints

134

144

148

Some Economic Applications Reformulated

The Economics of Exhaustible Resources

Optimal Control Theory

159

Optimal Control: The Maximum Principle

161

7.1

7.2

7.3

7.4

7.5

The Simplest Problem of Optimal Control

162

167

177

181

7.6

7.7

The Political Business Cycle

8

8.1

8.2

8.3

8.4

8.5

9

9.1

9.2

9.3

9.4

10

10.1

The Maximum Principle

The Rationale of the Maximum Principle

Alternative Terminal Conditions

The Calculus of Variations and Optimal Control

Theory Compared

Energy Use and Environmental Quality

191

193

200

More on Optimal Control

205

An Economic Interpretation of the Maximum Principle

Problems with Several State and Control Variables

Antipollution Policy

205

210

214

221

234

Infinite-Horizon Problems

240

Transversality Conditions

240

244

253

264

The Current-Value Hamiltonian

Sufficient Conditions

Some Counterexamples Reexamined

The Neoclassical Theory of Optimal Growth

Exogenous and Endogenous Technological Progress

Optimal Control with Constraints

275

Constraints Involving Control Variables

275

'·

PRJ:P'A.CE

10.2

10.3

10.4

10.5

The Dynamics of a Revenue-Maximizing Firm

State-Space Constraints

Economic Examples of State-Space Constraints

Limitations of Dynamic Optimization

Answers to Selected Exercise Problems

Index

ix

292

298

307

313

315

323

PREFAC E

In recent years I have received many requests to expand my Fundamental

Methods of Mathematical Economics to include the subject of dynamic

optimization. Since the existing size of that book would impose a severe

space constraint, I decided to present the topic of dynamic optimization in a

separate volume. Separateness notwithstanding, the present volume can be

considered as a continuation of Fundamental Methods of Mathematical

Economics.

As the title Elements of Dynamic Optimization implies, this book is

intended as an introductory text rather than an encyclopedic tome. While

the basics of the classical calculus of variations and its modern cousin,

optimal control theory, are explained thoroughly, differential games and

stochastic control are not included. Dynamic programming is explained in

the discrete-time form; I exclude the continuous-time version because it

needs partial differential equations as a prerequisite, which would have

taken us far afield.

Although the advent of optimal control theory has caused the calculus

of variations to be overshadowed, I deem it inadvisable to dismiss the topic

of variational calculus. For one thing, a knowledge of the latter is indispens­

able for understanding many classic economic papers written in the calcu­

lus-of-variations mold. Besides, the method is used even in recent writings.

Finally, a background in the calculus of variations facilitates a better and

fuller understanding of optimal control theory. The reader who is only

interested in optimal control theory may, if desired, skip Part 2 of the

present volume. But I would strongly recommend reading at least the

following: Chap. 2 (the Euler equation), Sec. 4.2 (checking concavity1 con­

vexity), and Sec. 5.1 (methodological issues of infinite horizon, relevant also

to optimal control theory).

Certain features of this book are worth pointing out. In developing the

Euler equation, I supply more details than most other books in order that

the reader can better appreciate the beauty of the logic involved (Sec. 2.1).

xi

xii

PREFACE

In connection with infinite-horizon problems, I attempt to clarify some

common misconceptions about the conditions for convergence of improper

integrals (Sec. 5.1). I also try to argue that the alleged counterexamples in

optimal control theory against infinite-horizon transversality conditions

may be specious, since they involve a failure to recognize the presence of

implicit fixed terminal states in those examples (Sec. 9.2).

To maintain a sense of continuity with Fundamental Methods of

Mathematical Economics, I have written this volume with a comparable

level of expository patience, and, I hope, clarity and readability. The dis­

cussion of mathematical techniques is always reinforced with numerical

illustrations, economic examples, and exercise problems. In the numerical

illustrations, I purposely present the shortest-distance problem-a simple

problem with a well-known solution-in several different alternative formu­

lations, and use it as a running thread through the book.

In the choice of economic examples, my major criterion is the suitabil­

ity of the economic models as illustrations of the particular mathematical

techniques under study. Although recent economic applications are natural

candidates for inclusion, I have not shied away from classic articles. Some

classic articles are not only worth studying in their own right, but also turn

out to be excellent for illustrative purposes because their model structures

are uncluttered with secondary complicating assumptions. As a by-product,

the juxtaposition of old and new economic models also provides an interest­

ing glimpse of the development of economic thought. For instance, from the

classic Ramsey growth model (Sec. 5.3) through the neoclassical growth

model of Cass (Sec. 9.3) to the Romer growth model with endogenous

technological progress (Sec. 9.4), one sees a progressive refinement in the

analytical framework. Similarly, from the classic Hotelling model of ex­

haustible resources (Sec. 6.3) to the Forster models of energy use and

pollution (Sec. 7. 7 and Sec. 8.5), one sees the shift in the focus of societal

concerns from resource-exhaustion to environmental quality. A comparison

of the classic Evans model of dynamic monopolist (Sec. 2.4) with the more

recent model of Leland on the dynamics of a revenue-maximizing firm (Sec.

10.2) also illustrates one of the many developments in microeconomic

reorientation.

In line with my pedagogical philosophy, I attempt to explain each

economic model in a step-by-step manner from its initial construction

through the intricacies of mathematical analysis to its final solution. Even

though the resulting lengthier treatment requires limiting the number of

economic models pre<;ented, I believe that the detailed guidance is desirable

because it serves to minimize the trepidation and frustration often associ­

ated with the learning of mathematics.

In the writing of this book, I have benefited immensely from the

numerous comments and suggestions of Professor Bruce A. Forster of the

University of Wyoming, whose keen eyes caught many sins of commission

and omission in the original manuscript. Since I did not accept all his

i·

'

PREFACE

xiii

suggestions, however, I alone should be held responsible for the remaining

imperfections. Many of my students over the years, on whom I tried the

earlier drafts of this book, also helped me with their questions and reac­

tions. Scott D. Stratford, my editor, exerted the right amount of encourage­

ment and pressure at critical moments to keep me going. And the coopera­

tive efforts of Joseph Murphy at McGraw-Hill, and Sarah Roesser, Cheryl

Kranz, and Ellie Simon at Science Typographers, Inc., made the production

process smooth as well as pleasant. Thanks are also due to Edward T.

Dowling for ferreting out some typographical errors that lurked in the

initial printing of the book. Finally, my wife Emily again offered me

unstinting assistance on manuscript preparation. To all of them, I am

deeply grateful.

Alpha C. Chiang

ELEMENTS OF DYNAMIC OPTIMIZATION

PART

1

INTRODUCTION

CHAPTER

1

THE

NATURE

OF DYNAMIC

OPTIMIZATION

Optimization i s a predominant theme i n economic analysis. For this reason,

the classical calculus methods of finding free and constrained extrema and

the more recent techniques of mathematical programming occupy an impor­

tant place in the economist's everyday tool kit. Useful as they are, such tools

are applicable only to static optimization problems. The solution sought in

single

such problems usually consists of a

optimal magnitude for every

choice variable, such as the optimal level of output per week and the optimal

price to charge for a product. It does not call for a schedule of optimal

sequential action.

In contrast, a

optimization problem poses the question of

dynamic

what is the optimal magnitude of a choice variable in each period of time

within the planning period (discrete-time case) or at each point of time in a

T] (continuous-time case). It is even possible to

given time interval, say

[0,

consider an infinite planning horizon, so that the relevant time interval is

oo)-literally "from here to eternity." The solution of a dynamic opti­

[0,

mization problem would thus take the form of an

optimal time path

for

every choice variable, detailing the best value of the variable today, tomor­

row, and so forth, till the end of the planning period. Throughout this book,

we shall use the asterisk to denote optimality. In particular, the optimal

time path of a (continuous-time) variable y will

I

be denoted by

y*(t).

3

4

PART 1: INTRODUCTION

1.1

SALIENT FEATURES OF DYNAMIC

OPTIMIZATION PROBLEMS

Although dynamic optimization is mostly couched in terms of a sequence of

time, it is also possible to envisage the planning horizon as a sequence of

stages in an economic process. In that case, dynamic optimization can be

viewed as a problem of multistage decision making. The distinguishing

feature, however, remains the fact that the optimal solution would involve

more than one single value for the choice variable.

Multistage Decision Making

_

The multistage character of dynamic optimization can be illustrated with a

simple discrete example. Suppose that a firm engages in transforming a

certain substance from an i nitial state A (raw material state) into a termi­

nal state Z (finished product state) through a five-stage production process.

In every stage, the firm faces the problem of choosing among several

possible alternative subprocesses, each entailing a specific cost. The ques­

tion is: How should the firm select the sequence of subprocesses through

the five stages in order to minimize the total cost?

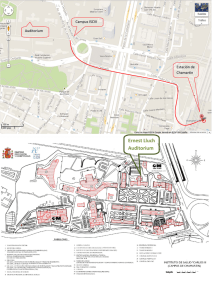

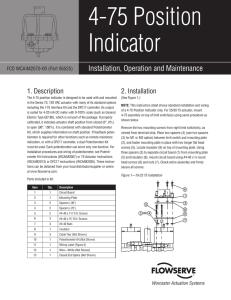

In Fig. 1.1, we illustrate such a problem by plotting the stages

horizontally and the states vertically. The initial state A is shown by the

leftmost point (at the beginning of state 1); the terminal state Z is shown by

the rightmost point (at the end of stage 5). The other points B, C, . . . , K

show the various intermediate states into which the substance may be

transformed during the process. These points (A, B, ... , Z) are referred to

as vertic es. To indicate the possibility of transformation from state A to

state B, we draw an arc from point A to point B. The other arc AC shows

!�

-·

State

0 '-----+----+--1- Stage

1

2

3

4

5

�'-y---'-y----1

----J'-y-----J

Stage 1

FIGURE 1.1

Stage

2

Stage 3

Stage 4

Stage 5

5

CHAPTER 1: THE NATURE OF DYNAMIC OPTIMIZATION

that the substance can also be transformed into state C instead of state B.

Each arc is assigned a specific value-in the present example, a cost-shown

in a circle in Fig. 1.1. The first-stage decision is whether to transform the

raw material into state B (at a cost $2) or into state C (at a cost of $4), that

is, whether to choose arc AB or arc AC. Once the decision is made, there

will arise another problem of choice in stage 2, and so forth, till state Z is

reached. Our problem is to choose a connected sequence of arcs going from

left to right, starting at A and terminating at Z, such that the sum of the

values of the component arcs is minimized. Such a sequence of arcs will

constitute an optimal path.

The example in Fig. 1.1 is simple enough so that a solution may be

found by enumerating all the admissible paths from A to Z and picking the

one with the least total arc values. For more complicated problems, how­

ever, a systematic method of attack is needed. This we shall discuss later

when we introduce dynamic programming in Sect. 1.4. For the time being,

let us just note that the optimal solution for the present example is the path

ACEHJZ, with $14 as the minimum cost of production. This solution serves

to point out a very important fact: A myopic, one-stage-at-a-time optimiza­

tion procedure will not in general yield the optimal path! For example, a

myopic decision maker would have chosen arc AB over arc AC in the first

stage, because the former involves only half the cost of the latter; yet, over

the span of five stages, the more costly first-stage arc AC should be selected

instead. It is precisely for this reason, of course, that a method that can take

into account the entire planning period needs to be developed.

The Continuous-Variable Version

The example in Fig. 1. 1 is characterized by a discrete stage variable, which

takes only integer values. Also, the state variable is assumed to take values

belonging to a small finite set, {A, B, . . , Z). If these variables are continu.

State

z

I

I

I

I

A

I

I

I

I

I

o�------_,--8�

T

FIGURE 1.2

PART 1: INTRODUCTION

6

ous, we may instead have a situation as depicted in Fig. 1.2, where, for

illustration, we have drawn only five possible paths from A to Z. Each

possible path is now seen to travel through an infinite number of stages in

the interval [0,T]. There is also an infinite number of states on each path,

each state being the result of a particular choice made in a specific stage.

For concreteness, let us visualize Fig. 1.2 to be a map of an open

terrain, with the stage variable representing the longitude, and the state

variable representing the latitude. Our assigned task is to transport a load

of cargo from location A to location Z at minimum cost by selecting an

appropriate travel path. The cost associated with each possible path de­

pends, in general, not only on the distance traveled, but also on the

topography on that path. However, in the special case where the terrain is

completely homogeneous, so that the transport cost per mile is a constant,

the least-cost problem will simply reduce to a shortest-distance problem.

The solution in that case is a straight-line path, because such a path entails

the lowest total cost (has the lowest path value). The straight-line solution

is, of course, well known-so much so that one usually accepts it without

demanding to see a proof of it. In the next chapter (Sec. 2.2, Example 4), we

shall prove this result by using the calculus of variations, the classical

approach to the continuous version of dynamic optimization.

For most of the problems discussed in the following, the stage variable

will represent time; then the curves in Fig. 1.2 will depict time paths. As a

concrete example, consider a firm with an initial capital stock equal to A at

time 0, and a predetermined target capital stock equal to Z at timeT. Many

alternative investment plans over the time interval [0,T] are capable of

achieving the target capital at time T. And each investment plan implies a

specific capital path and entails a specific potential profit for the firm. In

this case, we can interpret the curves in Fig. 1.2 as possible capital paths

and their path values as the corresponding profits. The problem of the firm

is to identify the investment plan-hence the capital path-that yields the

maximum potential profit. The solution of the problem will, of course,

depend crucially on how the potential profit is related to and determined by

the configuration of the capital path.

From the preceding discussion, it should be clear that, regardless of

whether the variables are discrete or continuous, a simple type of dynamic

optimization problem would contain the following basic ingredients:

1

initial point and a given terminal point;

2 a set of admissible paths from the initial point to the terminal point;

3 a set of path values serving as performance indices (cost, profit, etc.)

4

a given

associated with the various paths; and

a specified objective-either to maximize or to minimize the path value

or performance index by choosing the

optimal path .

CHAPTER 1, THE NATURE OF DYNAMIC OPTIMIZATION

7

The Concept of a Functional

The relationship between paths and path values deserves our close atten­

tion, for it represents a special sort of mapping-not a mapping from real

numbers to real numbers as in the usual fu nction, but a mapping from

paths (curves) to real numbers (performance indices). Let us think of the

paths in question as time paths, and denote them by y 1(t), Y n(t), and so on.

Then the mapping is as shown in Fig. 1.3, where V1, Vn represent the

associated path values. The general notation for the mapping should there­

fore be V(y(t)]. But it must be emphasized that this symbol fundamentally

differs from the composite-function symbol g( f(x )]. In the latter, g is a

function of f, and f is in turn a function of x; thus, g is in the final

analysis a function of x. In the symbol V(y(t)), on the other hand, the y(t)

component comes as an integral unit-to indicate time paths-and there­

fore we should not take V to be a function of t. Instead, V should be

understood to be a function of "y(t)" as such.

To make clear this difference, this type of mapping is given a distinct

name: fu nctio nal . To further avoid confusion, many writers omit the "(t)"

part of the symbol, and write the functional a s V[y] or V{y), thereby

underscoring the fact that it is the change in the position of the entire y

path-the variatio n in they path-as against the change in t, that results

in a change in path value V. The symbol we employ is V[y ]. Note that when

the symbol y is used to indicate a certain state, it is suffixed, and appears as,

say, y(O) for the initial state or y(T ) for the terminal state. In contrast, in

the path connotation, the t in y(t) is not assigned a specific value. In the

following, when we want to stress the specific time interval involved in a

Set of admissible paths

(curves)

Set

of path values

(real line)

FIGURE 1.8

. PART 1:

8

$

$

MC

INTRODUCTION

MC

PI t-------J'--.!"'RI

P2._------�'--t- MR2

1

I

I

I

I

I

I

_._.-4�>-Q

Q'2 Q\

0 _______

0

__

I

.____._!

...._ Q

_

_

(b)

(a)

FIGURE

I

I

I

I

1.4

path or a segment thereof, we shall use the notation y[O, T] or y[O, T ). More

often, however, we shall simply use the y(t) symbol, or use the term "y

path". The optimal time path is then denoted by y*(t), or they* path.

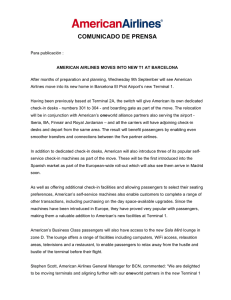

As an aside, we may note that although the concept of a functional

takes a prominent place primarily in dynamic optimization, we can find

examples of it even in elementary economics. In the economics of the firm,

the profit-maximizing output is found by the decision rule MC = MR

(marginal cost = marginal revenue). Under purely competitive conditions,

where the MR curves are horizontal as in Fig. 1.4a, each MR curve can be

represented by a specific, exogenously determined price, P0• Given the MC

curve, we can therefore express the optimal output as Q* = Q*(P0), which

is a function, mapping a real number (price) into a real number (optimal

output). But when the MR curves are downward-sloping under imperfect

competition, the optimal output of the firm with a given MC curve will

depend on the specific position of the MR curve. In such a case, since the

output decision involves a mapping from curves to real numbers, we in fact

have something in the nature of a functional: Q* Q*[MR]. It is, of

course, precisely because of this inability to express the optimal output as a

function of price that makes it impossible to draw a supply curve for a firm

under imperfect competition, as we can for its competitive counterpart.

=

1 .2 VARIABLE ENDPOINTS

AND TRANSVERSALITY

CONDITIONS

In our earlier statement of the problem of dynamic optimization, we simpli­

fied matters by assuming a given initial point (a given ordered pair (0, A)}

and a given terminal point [a given ordered pair (T, Z)]. The assumption of

CHAPTER 1: THE NATURE OF DYNAMIC OPTIMIZATION

9

a given initial point may not be unduly restrictive, because, in the usual

problem, the optimizing plan must start from some specific initial position,

say, the current position. For this reason, we shall retain this assumption

throughout most of the book. The terminal position, on the other hand, may

very well turn out to be a flexible matter, with no inherent need for it to be

predetermined. We may, for instance, face only a fixed terminal time, but

have complete freedom to choose the terminal state (say, the terminal

capital stock). On the other hand, we may also be assigned a rigidly specified

terminal state (say, a target inflation rate), but are free to select the

terminal time (when to achieve the target). In such a case, the terminal

point becomes a part of the optimal choice. In this section we shall briefly

discuss some basic types of variable terminal points.

We shall take the stage variable to be continuous time. We shall also

retain the symbols 0 andT for the initial tim e and terminal tim e, and the

symbols A and Z for the initial and terminal states. When no confusion can

arise, we may also use A and Z to designate the initial and terminal points

(ordered pairs), especially in diagrams.

Types of Variable Terminal Points

As the first type of variable terminal point, we may be given a fixed terminal

timeT, but a free terminal state. In Fig. 1.5a, while the planning horizon is

fixed at time T, any point on the vertical line t T is acceptable as a

terminal point, such as Z1, Z2, and Z3• In such a problem, the planner

obviously enjoys much greater freedom in the choice of the optimal path

and, as a consequence, will be able to achieve a better-or at least no worse

-optimal path value, V*, than if the terminal point is rigidly specified.

This type of problem is commonly referred to in the literature as a

fixed-time-horizon p robl em, or fixed-tim e probl em, meaning that the termi­

nal time of the problem is fixed rather than free. Though explicit about the

time horizon, this name fails to give a complete description of the problem,

since nothing is said about the terminal state. Only by implication are we to

understand that the terminal state is free. A more informative characteriza­

tion of the problem is contained in the visual image in Fig. 1.5a . In line with

this visual image, we shall alternatively refer to the fixed-time problem as

the vertical-terminal -line probl em .

T o give an economic example of such a problem, suppose that a

monopolistic firm is seeking to establish a (smooth) optimal price path over

a given planning period, say, 12 months, for purpose of profit maximization.

The current price enters into the problem as the initial state. If there is no

legal price restriction in force, the terminal price will be completely up to

the firm to decide. Since negative prices are inadmissible, however, we must

eliminate from consideration all P < 0. The result is a truncated v ertical

term inal lin e, which is what Fig. 1.5a in fact shows. If, in addition, an

=

10

PART 1: INTRODUCTION

y

y

t=T

T

(a)

(b)

y

Z=,P(T)

(c)

FIGURE 1.5

official price ceiling is expected to be in force at the terminal time t = T,

then further truncation of the vertical terminal line is needed.

The second type of variable-terminal-point problem reverses the roles

played by the terminal time and terminal state; now the terminal state Z is

stipulated, but the terminal time is free. In Fig. 1.5b, the horizontal line

y = Z constitutes the set of admissible terminal points. Each of these,

depending on the path chosen, may be associated with a different terminal

time, as exemplified by T1, T 2, and T3• Again, there is greater freedom of

choice as compared with the case of a fixed terminal point. The task of the

planner might, for instance, be that of producing a good with a particular

quality characteristic (steel with given tensile strength) at minimum cost,

but there is complete discretion over the length of the production period.

This permits the rise of a lengthier, but less expensive production method

that might not be feasible under a rushed production schedule.

CHAPTER 1: THE NATURE OF DYNAMIC OPTIMIZATION

11

This type of problem i s commonly referred t o as a fixed-endpoint

A possible confusion can arise from this name because the word

"endpoint" is used here to designate only the terminal state Z, not the

entire endpoint in the sense of the ordered pair (T, Z). To take advantage of

the visual image of the problem in Fig. 1.5b, we shall alternatively refer to

the fixed-endpoint problem as the horizontal-terminal-line problem .

Turning the problem around, it is also possible in a problem of this

type to have minimum production time (rather than minimum cost) as the

objective. In that case, the path with T1 as the terminal time becomes

preferable to the one ending at T2, regardless of the relative cost entailed.

This latter type of problem is called a time-optimal problem.

In the third type of variable-terminal-point problem, neither the termi­

nal time T nor the terminal state Z is individually preset, but the two are

tied together via a constraint equation of the form Z = c/J(T). As illustrated

in Fig. 1.5c, such an equation plots as a terminal curve (or, in higher

dimension, a terminal surface) that associates a particular terminal time

(say, T1) with a corresponding terminal state (say, Z1). Even though the

problem leaves both T and Z flexible, the planner actually has only one

degree of freedom in the choice of the terminal point. Still, the field of

choice is obviously again wider than if the terminal point is fully prescribed.

We shall call this type of problem the terminal-curve (or terminal-surface)

problem .

problem .

To give an economic example of a terminal curve, we may cite the case

of a custom order for a product, for which the customer is interested in

having both ( 1) an early date of completion, and (2) a particular quality

characteristic, say, low breakability. Being aware that both cannot be

attained simultaneously, the customer accepts a tradeoff between the two

considerations. Such a tradeoff may appear as the curve Z = c/J(T) in Fig.

1.5c, where y denotes breakability.

The preceding discussion pertains to problems with finite planning

horizon, where the terminal time T is a finite number. Later we shall also

encounter problems where the planning horizon is infinite (T--+ oo) .

Transversality Condition

The common feature of variable-terminal-point problems is that the planner

has one more degree of freedom than in the fixed-terminal-point case. But

this fact automatically implies that, in deriving the optimal solution, an

extra condition is needed to pinpoint the exact path chosen. To make this

clear, let us compare the boundary conditions for the optimal path in the

fixed- versus the variable-terminal-point cases. In the former, the optimal

path must satisfy the boundary (initial and terminal) conditions

y(O) =A

and

y(T)

=

Z

In the latter case, the initial condition

( T, A, and Z all given)

y(O) =A still applies by assumption.

12

PART 1 , INTRODUCTION

But since T andjor Z are now variable, the terminal condition y(T) = Z is

no longer capable of pinpointing the optimal path for us. As Fig. 1.5 shows,

all admissible paths, ending at Z1, Z2, or other possible terminal positions,

equally satisfy the condition y(T) Z. What is needed, therefore, is a

terminal condition that can_conclusively distinguish the optimal path from

the other admissible paths. Such a condition is referred to as a transversal­

ity condition, because it normally appears as a description of how the

optimal path crosses the terminal line or the terminal curve (to "transverse"

means to "to go across").

=

Variable Initial Point

Although we have assumed that only the terminal point can vary, the

discussion in this section can be adapted to a variable initial point as well.

Thus, there may be an initial curve depicting admissible combinations of the

initial time and the initial state. Or there may be a vertical initial line t = 0,

indicating that initial time 0 is given, but the initial state is unrestricted. As

an exercise, the reader is asked to sketch suitable diagrams silnilar to those

in Fig. 1.5 for the case of a variable initial point.

If the initial point is variable, the characterization of the optimal path

must also include another transversality condition in place of the equation

y(O) = A, to describe how the optimal path crosses the initial line or initial

curve.

EXERCISE 1.2

1

2

Sketch suitable diagrams similar to Fig. 1.5 for the case of a variable initial

point.

In Fig. 1.5a, suppose that y represents the price variable. How would an

official price ceiling that is expected to take effect at time t = T affect the

diagram?

3 In Fig. 1.5c, let y denote heat resistance in a product, a quality which takes

longer production time to improve. How would you redraw the terminal

curve to depict the tradeoff between early completion date and high heat

resistance?

1.3 THE OBJECTIVE FUNCTIONAL

The Integral Form of Functional

An

optimal path is, by definition, one that maximizes or minimizes the path

value V[y ]. Inasmuch as any y path must perforce travel through an

interval of time, its total value would naturally be a sum. In the discrete-

CHAPTER 1: THE NATURE OF DYNAMIC OPTIMIZATION

13

stage framework of Fig. 1.1, the path value is the sum of the values of its

component arcs. The continuous-time counterpart of such a sum is a

definite integral, J;{ (arc value) dt. But how do we express the "arc value"

for the continuous case?

To answer this, we must first be able to identify an "arc" on a

continuous-time path. Figure 1.1 suggests that three pieces of information

are needed for arc identification: (1) the starting stage (time), (2) the

starting state, and (3) the direction in which the arc proceeds. With continu­

ous time, since each arc is infinitesimal in length, these three items are

represented by, respectively: (1) t, (2) y(t), and (3) y' (t) = dy jdt. For

instance, on a given path y1, the arc associated with a specific point of time

t0 is characterized by a unique value y1(t0) and a unique slope y{(t0). If

there exists some function, F, that assigns arc values to arcs, then the value

of the said arc can be written as F[t0, y1(t0), y {( t0 )] . Similarly, on another

path, y11, the height and the slope of the curve at t = t0 are y11(t0) and

yJ(t0), respectively, and the arc value is F[t0, y11(t0), y1{(t0)]. It follows that

the general expression for arc values is F[t, y(t), y'(t)}, and the path-value

functional-the sum of arc values-can generally be written as the definite

integral

(1.1)

V[yJ

=

f TF [ t , y ( t ) , y'(t) ] dt

0

It bears repeating that, as the symbol V[y] emphasizes, it is the variation in

they path (y1 versus y11) that alters the magnitude of V. Each different y

path consists of a different set of arcs in the time interval [0, T], which,

through the arc-value-assigning function F, takes a different set of arc

values. The definite integral sums those arc values on each y path into a

path value.

If there ¥e two state variables, y and z, in the problem, the arc values

on both the y and z paths must be taken into account. The objective

functional should then appear as

( 1 .2)

V[y, z ]

=

foTF [ t , y ( t ) , z(t),y'( t ) , z' ( t ) ] dt

A problem with an objective functional in the form of (1.1) or ( 1.2)

constitutes the standard problem. For simplicity, we shall often suppress

the time argument (t) for the state variables and write the integrand

function more concisely as F(t, y, y') or F(t, y, z, y', z').

A Microeconomic Example

(1.1) may arise, for instance, in the

case of a profit-maximizing, long-range-planning monopolistic firm with a

dynamic demand function Qd = D(P, P'), where P' = dPjdt. In order to

A functional of the standard form in

14

PART 1: INTRODUCTION

set Q, = Qd (to allow no inventory accumulation or decumulation), the

firm's output should be Q = D(P, P'), so that its total-revenue function is

R =PQ =R(P,P')

Assuming that the total-cost function depends only on the level of output,

we can write the composite function

C

=

C(Q) = C[D(P, P')]

It follows that the total profit also depends on P and P':

7r = R - C

Summing

functional

7r

= R(P,

P')- C[D(P, P')]

=

7r(P, P')

over, say, a five-year period, results in the objective

t71'(P, P') dt

0

t

which conforms to the general form of (1.1), except that the argument

in

the F function happens to be absent. However, if either the revenue

function or the cost function can shift over time, then that function should

contain t as a separate argument; in that case the 71' function would also

have t as an argument. Then the corresponding objective functional

t7T

(t, P, P') dt

0

would be exactly in the form of (1.1). As another possibility, the variable t

can enter into the integrand via a discount factor e-pt.

To each price path in the time interval [0, 5), there must correspond a

particular five-year profit figure, and the objective of the firm is to find the

optimal price path P*[O, 5] that maximizes the five-year profit figure.

A Macroeconomic Example

Let the social welfare of an economy at any time be measured by the utility

from consumption, U = U(C). Consumption is by definition that portion of

output not saved (and not invested). If we adopt the production function

Q = Q(K, L), and assume away depreciation, we can then write

C

= Q(

K,

L) - I

= Q(K, L)

- K'

where K' = I denotes net investment. This implies that the utility function

be rewritten as

can

U(C)

=

U[Q(K, L)- K']

CHAPTER 1: THE NATURE OF DYNAMIC OPTIMIZATION

15

If the societal goal is to maximize the sum of utility over a period

[0, T], then its objective functional takes the form

fTU [ Q( K, L) - K') dt

0

This exemplifies the functional in (1.2), where the two state variables y and

refer in the present example to K and L, respectively.

Note that while the integrand function of this example does contain

both K and K' as arguments, the L variable appears only in its natural

form unaccompanied by L'. Moreover, the t argument is absent from the F

function, too. In terms of (1.2), the F function contains only three argu­

ments in the present example: F[y(t), z(t),y'(t)], or F[K, L, K'].

z

Other Forms of Functional

Occasionally, the optimization criterion in a problem may not depend on

any intermediate positions that the path goes through, but may rely exclu­

sively on the position of the terminal point attained. In that event, no

definite integral arises, since there is no need to sum the arc values over an

interval. Rather, the objective functional appears as

V [y)

(1.3)

= G[T, y( T ) ]

where the G function is based on what happens at the terminal time T

only.

It may also happen that both the definite integral in (1.1) and the

terminal-point criterion in (1.3) enter simultaneously in the objective func­

tional. Then we have

(1.4)

V[y]

=

f0TF(t ,y(t) ,y' ( t) ] dt + G (T,y( T ) ]

where the G function may represent, for instance, the scrap value of some

capital equipment. Moreover, the functionals in (1.3) and ( 1.4) can again be

expanded to include more than one state variable. For example, with two

state variables y and z, (1.3) would become

(1.5)

V[y, z]

= G [ T,y( T ) , z(T)]

A problem with the type of objective functional i n (1.3) is called a

problem of Mayer. Since only the terminal position matters in V, it is also

known as a terminal-control problem. If (1.4) is the form of the objective

functional, then we have a problem of BoZza.

PART 1: lNTRODUCTION

16

Although the problem of Bolza may seem to be the more general

formulation, the truth is that the three types of problems-standard,

Mayer, and Bolza-are all convertible into one another. For example, the

functional (1. 3) can be transformed into the form (1.1) by defining a new

variable

( 1 . 6)

z( t)

=

G[t, y( t)]

It should be noted that it is

function in (1.6). Since

with initial condition

"t"

rather than

"T"

z( O) = 0

that appears in the

G

( 1 .7)

(z'( t ) dt =z ( t ) i� = z ( T) - z ( O)

0

= G[T,y(T)]

=

z ( T)

[by the initial condition ]

[by ( 1 .6) ]

the functional in (1. 3), G[T, y(T)], can be replaced by the integral in (1.7) in

the new variable z(t). The integrand, z'(t) = dG[t, y(t)]jdt, is easily recog­

nized as a special case of the function F[t, z(t), z'( t)], with the arguments t

and z( t) absent; that is, the integral in (1.7) still falls into the general form

of the objective functional (1.1). Thus we have transformed a problem of

Mayer into a standard problem. Once we have found the optimal z path, the

optimal y path can be deduced through the relationship in (1.6).

By a similar procedure, we can convert a problem of Bolza into a

standard problem; this will be left to the reader. An economic example of

this type of problem can be found in Sec. 3.4. In view of this convertibility,

we shall deem it sufficient to couch our discussion primarily in terms of the

standard problem, with the objective functional in the form of an integral.

EXERCISE 1.3

1

In a so-called "time-optimal problem," the objective is to move the state

variable from a given initial value y(O) to a given terminal value y(T) in

the least amount of time. In other words, we wish to minimize the

functional V[y] = T - 0.

(a) Taking it as a standard problem, write the specific form of the F

function in (1.1) that will produce the preceding functional.

(b) Taking it as a problem of Mayer, write the specific form of the G

function in (1.3) that will produce the preceding functional.

2 Suppose we are given a function D(t) which gives the desired level of the

state variable at every point of time in the interval [0, T ]. All deviations

from D(t), positive or negative, are undesirable, because they inflict a

negative payoff (cost, pain, or disappointment). To formulate an appropriate

dynamic minimization problem, which of the following objective functionals

, QJIAPTBJl l :

17

THE NATURE OF DYNAMIC OPTIMIZATION

would be acceptable? Why?

(a) f[!y(t) - D(t)) dt

(b) /0T[y(t) - D(t))2 dt

(c) f[ID(t) - y(t)P dt

(d) f:iD(t) - y(t)l dt

3

4

Transform the objective functional (1.4) of the problem of Bolza into the

format of the standard problem, as in (L 1) or (1.2). [ Hint: Introduce a new

variable z(t) = G[t, y(t)), with initial condition z(O)

0.]

=

Transform the objective functional (1.4) of the problem of Bolza into the

format of the problem of Mayer, as in (1.3) or (1.5). [Hint: Introdu�e a new

variable z(t) characterized by z'(t) = F[t, y(t), y'(t)), with initial condition

z(O)

= 0.)

1.4 ALTERNATIVE APPROACHES TO

DYNAMIC OPTIMIZATION

To tackle the previously stated problem of dynamic optimization, there are

three major approaches. We have earlier mentioned the calculus of varia­

tions and dynamic programming. The remaining one, the powerful modern

generalization of variational calculus, goes under the name of optimal

control theory. We shall give a brief account of each.

,,

The Calculus of Variations

Dating back to the late 17th century, the calculus of variations is the

classical approach to the problem. One of the earliest problems posed is that

of determining the shape of a surface of revolution that would encounter

the least resistance when moving through some resisting medium (a surface

of revolution with the minimum area).1 Issac Newton solved this problem

and stated his results in his Principia, published in 1687. Other mathe­

maticians of that era (e.g., John and James Bernoulli) also studied problems

of a similar nature. These problems can be represented by the following

general formulation:

( 1.8)

=

j TF[ t, y( t ) , y' (t)] dt

Maximize or minimize

VJ:y]

subject to

y(O) =A

and

y( T )

1This problem will be discussed in Sec. 2.2, Example

0

= Z

3.

(A given)

( T, Z given)

18

PART [, INTRODUCTION

Such a problem, with an integral functional in a single state variable, with

completely specified initial and terminal points, and with no constraints, is

known as the fundamental problem (or simplest problem) of calculus o£

variations.

In order to make such problems meaningful, it is necessary that the

functional be integrable (i.e., the integral must be convergent). We shall

assume this condition is met whenever we write an integral of the general

form, as in (1.8). Furthermore, we shall assume that all the functions that

appear in the problem are continuous and continuously differentiable. This

assumption is needed because the basic methodology underlying the calcu­

lus of variations closely parallels that of the classical differential calculus.

The main difference is that, instead of dealing with the differential dx that

changes the value of y = f(x), we will now deal with the " variation" of an

entire curve y(t) that affects the value of the functional V[y ]. The study of

variational calculus will occupy us in Part 2.

Optimal Control Theory ·

The continued study of variational problems has led to the development of

the more modern method of optimal control theory. In optimal control

theory, the dynamic optimization problem is viewed as consisting of three

(rather than two) types of variables. Aside from the time variable t and the

state variable y(t), consideration is given to a control variable u(t). Indeed,

it is the latter type of variable that gives optimal control theory its name

and occupies the center of stage in this new approach to dynamic optimiza­

tion.

To focus attention on the control variable implies that the state

variable is relegated to a secondary status. This would be acceptable only if

the decision on a control path u(t) will, once given an initial condition on y,

unambiguously determine a state-variable path y(t) as a by-product. For

this reason, an optimal control problem must contain an equation that

relates y to u :

dy

dt

= f [ t , y(t) , u(t)]

Such an equation, called an equation of motion (or transition equation or

state equation), shows how, at any moment of time, given the value of the

state variable, the planner's choice of u will drive the state variable y over

time. Once we have found the optimal control-variable path u*(t), the

equation of motion would make it possible to construct the related optimal

state-variable path y*(t).

CHAPTER 1: THE NATURE OF DYNAMIC OPTIMIZATION

19

The optimal control problem corresponding

tions problem (1.8) is as follows:

Maximize or minimize

( 1 .9)

=

f [ t, y(t), u ( t)J

y(O) = A

y( T )

and

the calculus-of-varia­

V[ u ] = foTF [ t , y(t), u ( t ) ) dt

y'( t)

subject to

to

= Z

(A given)

(T, Z given)

Note that, in (1.9), not only does the objective functional contain u as an

argument, but it has also been changed from V[y] to V[ u ]. This reflects the

fact that u is now the ultimate instrument of optimization. Nonetheless,

this control problem is intimately related to the calculus-of-variations prob­

lem (1.8). In fact, by replacing y'(t) with u(t) in the integrand in (1.8), and

adopting the differential equation y'(t) = u(t) as the equation of motion, we

immediately obtain ( 1.9).

The single most significant development in optimal control theory is

known as the maximum principle. This principle is commonly associated

with the Russian mathematician L. S. Pontryagin, although an American

mathematician, Magnus R. Hestenes, independently produced comparable

work in a Rand Corporation report in 1949.2 The powerfulness of that

principle lies in its ability to deal directly with certain constraints on the

control variable. Specifically, it allows the study of problems where the

admissible values of the control variable u are confined to some closed,

bounded convex set �. For instance, the set � may be the closed interval

[0, 1 ], requiring 0 � u(t) � 1 throughout the planning period. If the marginal

propensity to save is the control variable, for instance, then such a con­

straint, 0 � s(t) � 1 , may very well be appropriate. In sum, the problem

2The maximum principle is the product of the joint efforts of L. S. Pontryagin and his

associates V. G. Boltyanskii, R. V. Gamkrelidze, and E. F. Mishchenko, who were jointly

awarded the 1962 Lenin Prize for Science and Technology. The English translation of their

work, done by K. N. Trirogoff, is

The Mathematical Theory of Optimal Processes, Interscience,

New York, 1962.

Hestenes' Rand report is titled A General Problem in the Calculus of Variations with

Applications to Paths of Least Time. But his work was not easily available until he published a

paper that extends the results of Pontryagin: "On Variational Theory and Optimal Control

Theory," Journal of SIAM, Series A, Control , Vol. 3, 1 96 5, pp. 23-48. The expanded version of

this work is contained in his book

Calculus of Variations and Optimal Control Theory, Wiley,

New York, 1966 .

Some writers prefer to call the principle

the minimum principle, which is the more

appropriate name for the principle in a slightly modified formulation of the problem. We shall

use the original name, the maximum principle.

20

PART 1: INTRODUCTION

addressed by optimal control theory is (in its simple form) the same as in

(1.9), except that an additional constraint,

u ( t)

E

�

for 0 :::;;

t ::5 T

may be appended to it. In this light, the control problem (1.9) constitutes a

special (unconstrained) case where the control set � is the entire real line.

The detailed discussion of optimal control theory will be undertaken in

Part 3.

Dynamic Programming

Pioneered by the American mathematician Richard Bellman,3 dynamic

programming presents another approach to the control problem stated in

(1.9). The most important distinguishing characteristics of this approach

are two: First, it embeds the given control problem in a family of control

problems, with the consequence that in solving the given problem, we are

actually solving the entire family of problems. Second, for each member of

this family of problems, primary attention is focused on the optimal value of

the functional, V*, rather than on the properties of the optimal state path

y*(t) (as in the calculus of variations) or the optimal control path u*(t) (as

in optimal control theory). In fact, an optimal value function-assigning an

optimal value to each individual member of this family of problems-is used

as a characterization of the solution.

All this is best explained with a specific discrete illustration. Referring

to Fig. 1.6 (adapted from Fig. 1.1), let us first see how the "embedding" of a

problem is done. Given the original problem of finding the least-cost path

from point A to point Z, we consider the larger problem of finding the

least-cost path from each point in the set {A, B, C, . . . , Z} to the terminal

point Z. There then exists a family of component problems, each of which is

associated with a different initial point. This is, however, not to be confused

with the variable-initial-point problem in which our task is to select one

initial point as the best one. Here, we are to consider every possible point as

a legitimate initial point in its own right. That is, aside from the genuine

initial point A, we have adopted many pseudo initial points ( B, C, etc). The

problem involving the pseudo initial point Z is obviously trivial, for it does

not permit any real choice or control; it is being included in the general

problem for the sake of completeness and symmetry. But the component

3Richard E. Beilman, Dynamic Programming, Princeton University Press, Princeton, NJ,

1957.

CHAPTER

1 : THE NATURE OF DYNAMIC OPTIMIZATION

21

State

A

o

.,

I

I

I

I

I

____ ____ _

f

.L__ _ ;

1

'---y---1

Stage

..

2

f. ___

- ---,.-- +

3

+

4

5

'---y---1'-----y-J

Stage 4

1

. ,. s�

Stage 5

FIGURE 1.6

problems for the other pseudo initial points are not trivial. Our Origi­

nal problem has thus been " embedded" in a family of meaningful problems.

Since evecy component problem has a unique optimal path value, it is

possible to write an optimal value function

V*

=

V*( i )

( i = A , B, . . . , Z)

which says that we can determine an optimal path value for evecy possible

initial point. From this, we can also construct an optimal policy function,

which will tell us how best to proceed from any specific initial point i, in

order to attain V*(i) by the proper selection of a sequence of arcs leading

from point i to the terminal point Z.

The purpose of the optimal value function and the optimal policy

function is easy to grasp, but one may still wonder why we should go to the

trouble of embedding the problem, thereby multiplying the task of solution.

The answer is that the embedding process is what leads to the development

of a systematic iterative procedure for solving the original problem.

Returning to Fig. 1 .6, imagine that our immediate problem is merely

that of determining the optimal values for stage 5, associated with the three

initial points I, J, and K. The answer is easily seen to be

( 1 .10)

V* ( I ) = 3

V*(J)

=

1

V* ( K )

=2

Having found the optimal values for I, J, and K, the task of finding the

least-cost values V*(G) and V*(H) becomes easier. Moving back to stage 4

and utilizing the previously obtained optimal-value information in (1.10), we

can determine V *(G) as well as the optimal path GZ (from G to Z) as

PART 1: INTRODUCTION

22

follows:

( 1 . 11)

V* ( G ) = min{value of arc GI + V* ( I ) , value of arc GJ

= min{ 2

+

3, 8 ·+ 1 } = 5

+

V*( J)}

[The optimal path GZ is GIZ.]

The fact that the optimal path from G to Z should go through I is

indicated by the arrow pointing away from G; the numeral on the arrow

shows the optimal path value V*(G). By the same token, we find

( 1 . 12)

V* ( H ) = min{ value of arc HJ + V* ( J ) , value of arc HK + V* ( K) }

= min{4 + 1 , 6 + 2 ) = 5

[The optimal path HZ is HJZ.]

Note again the arrow pointing from H toward J and the numeral on it. The

set of all such arrows constitutes the optimal policy function, and the set of

all the numerals on the arrows constitutes the optimal value function. With

the knowledge of V*(G) and V*(H), we can then move back one more stage

to calculate V*(D), V*(E), and V*(F )-and the optimal paths DZ, EZ,

and FZ-in a similar manner. And, with two more such steps, we will be

back to stage 1, where we can determine V*(A) and the optimal path AZ,

that is, solve the original given problem.

The essence of the iterative solution procedure is captured in Bellman's

principle of optimality, which states, roughly, that if you chop off the first

arc from an optimal sequence of arcs, the remaining abridged sequence

must still be optimal in its own right-as an optimal path from its own

initial point to the terminal point. If EHJZ is the optimal path from E to

Z, for example, then HJZ must be the optimal path from H to Z.

Conversely, if HJZ is already known to be the optimal path from H to Z,

then a longer optimal path that passes through H must use the sequence

HJZ at the tail end. This reasoning is behind the calculations in ( 1 . 1 1) and

(1.12). But note that in order to apply the principle of optimality and the

iterative procedure to delineate the optimal path from A to Z, we must find

the optimal value associated with every possible point in Fig. 1.6. This

explains why we must embed the original problem.

Even though the essence of dynamic programming is sufficiently clari­

fied by the discrete example in Fig. 1.6, the full version of dynamic program­

ming includes the continuous-time case. Unfortunately, the solution of

continuous-time problems of dynamic programming involves the more ad­

vanced mathematical topic of partial differential equations. Besides, partial

differential equations often do not yield analytical solutions. Because of this,

we shall not venture further into dynamic programming in this book. The

rest of the book will be focused on the methods of the calculus of variations

and optimal control theory, both of which only require ordinary differential

equations for their solution.

CHAPTER 1: THE NATURE OF DYNAMIC OPTIMIZATION

23

EXERCISE 1.4

1

From Fig. 1.6, find V*(D), V*( E), and V*( F). Determine the optimal

paths DZ, EZ, and FZ.

2

On the basis of the preceding problem, find V * ( B ) and V*(C). Determine

the optimal paths BZ and CZ.

3 Verify the statement in Sec. 1 . 1 that the minimum cost of production for

the example in Fig. 1.6 (same as Fig. 1.1) is $14, achieved on the path

ACEHJZ.

4

Suppose that the arc values in Fig. 1.6 are profit (rather than cost) figures.

For every point i in the set {A, B, . . . , Z}, find

(a)

(b)

the optimal (maximum-profit) value V* ( i ), and

the optimal path from i to Z.

. 2

PART

THE CALCULUS

OF VARIATIONS

- ·.

- ,.

.

.

'

·: ·

CHAPTER

2

THE

FUNDAMENTAL

PROBLEM OF

THE CALCULUS

OF VARIATIONS

We shall begin the study of the calculus of variations with the fundamental

problem:

( 2. 1)

Maximize or minimize

V [y )

subject to

y(O) = A

and

y( T )

=

=

j0TF[ t, y ( t) , y' ( t ) ) dt

Z

( A given)

( T, Z given)

The maximization and minimization problems differ from each other in the

second-order conditions, but they share the same first-order condition.

The task of variational calculus is to select from a set of admissible y

paths (or trajectories) the one that yields an extreme value of V[y ]. Since

the calculus of variations is based on the classical methods of calculus,

requiring the use of first and second derivatives, we shall restrict the set of

admissible paths to those continuous curves with continuous derivatives. A

smooth y path that yields an extremum (maximum or minimum) of V[y] is

called an extremal. We shall also assume that the integrand function F is

twice differentiable.

27

PART 2: THE CALCULUS.OF VARIATIONS

28

y

FIGURE 2.1

In locating an extremum of V[ y ], one may be thinking of either an

absolute (global) extremum or a relative (local) extremum. Since the calcu­

lus of variations is based on classical calculus methods, it can directly deal

only with relative extrema. That is, an extremal yields an extreme value of

V only in comparison with the immediately " neighboring" y paths.

2.1 THE EULER EQUATION

The basic first-order necessary condition in the calculus of variations is the

Euler equation. Although it was formulated as early as 1744, it remains the

most important result in this branch of mathematics. In view of its impor­

tance and the ingenuity of its approach, it is worthwhile to explain its

rationale in some detail.

With reference to Fig. 2.1, let the solid path y*(t) be a known

extremal. We seek to find some property of the extremal that is absent in

the (nonextremal) neighboring paths. Such a property would constitute a

necessary condition for an extremal. To do this, we need for comparison

purposes a family of neighboring paths which, by specification in (2.1), must

pass through the given endpoints (0, A) and (T, Z). A simple way of

generating such neighboring paths is by using a perturbing curve, chosen

arbitrarily except for the restrictions that it be smooth and pass through the

points 0 and T on the horizontal axis in Fig. 2.1, so that

( 2 .2)

p( O)

=

p(T)

=

0

We have chosen for illustration one with relatively small p values and small

slopes throughout. By adding Ep(t) to y*(t), where E is a small number, and

by varying the magnitude of E, we can perturb the y*(t) path, displacing it

to various neighboring positions, thereby generating the desired neighbor­

ing paths. The latter paths can be denoted generally as

( 2.3)

y(t) = y* (t) + Ep(t)

[implying y'(t) = y*' ( t ) + Ep ' (t)]

f

,F

CHAPTER 2: THE FUNDAMENTAL PROBLEM OF CALCULUS OF VARIATIONS

29

with the property that, as e -> 0, y(t) -> y*(t). To avoid clutter, only one of

these neighboring paths has been drawn in Fig. 2.1.

The fact that both y*(t) and p(t) are given curves means that each

value of e will determine one particular neighboring y path, and hence one

particular value of V[y ]. Consequently, instead of considering V as a

functional of the y path, we can now consider it as a function of the

variable e-V(e). This change in viewpoint enables us to apply the familiar

methods of classical calculus to the function V = V(e). Since, by assump­

tion, the curve y*(t)-which is associated with e 0-yields an extreme

value of V, we must have

=

-

dV

de

(2.4)

\

.�o

= 0

This constitutes a defining property o f the extremal. It follows that

dV1 de = 0 is a necessary condition for the extremal.

As written, however, condition (2.4) is not operational because it

involves the use of the arbitrary variable E as well as the arbitrary perturb­

ing function p(t). What the Euler equation accomplishes is to express this

necessary condition in a convenient operational form. To transform (2.4)

into an operational form, however, requires a knowledge of how to take the

derivatives of a definite integral.

Differentiating a Definite Integral

Consider the definition integral

(2.5)

I( x )

=

tF( t, x ) dt

a

where F(t, x ) is assumed to have a continuous derivative Fx(t, x) in the

time interval [a, b]. Since any change in x will affect the value of the F

function and hence the definite integral, we may view the integral as a

function of x-I(x). The effect of a change in x on the integral is given by

the derivative formula:

( 2 .6)

dl

d =

X

b

J Fx(t, x ) dt

a

[ Leibniz 's rule]

In words, to differentiate a definite integral with respect to a variable x

which is neither the variable of integration (t) nor a limit of integration

(a or b), one can simply differentiate through the integral sign with respect

to x.

The intuition behind Leibniz's rule can be seen from Fig. 2.2a, where

the solid curve represents F(t, x), and the dotted curve represents the

displaced position of F(t, x) after the change in x. The vertical distance

between the two curves (if the-change is infinitesimal) measures the partial

.. .

30

PART 2: THE CALCULUS OF VARIATIONS

F

F

0

0

b

a

(a)

1

a

(b)

F(t, x)

b

F

F(t,x)

0

b

a

(c)

FIGURE 2.2

derivative F/t, x) at each value of t. It follows that the effect of the change

in x on the entire integral, dljdx, corresponds to the area between the two

curves, or, equivalently, the definite integral of Fx(t, x) over the interval

[a, b]. This explains the meaning of (2.6).

The value of the definite integral in (2.5) can also be affected by a

change in a limit of integration. Defining the integral alternatively to be

J( b, a)

( 2 .7)

=

fF(t, x) dt

a

we have the following pair of derivative formulas:

(2 .8)

iJJ

(2 .9)

iJJ

F ( t, x ) i t-b = F( b, x )

ab =

aa

= - F (t, x ) l t -a = - F( a , x )

In words, the derivative of a definite integral with respect to its upper limit

31

CHAPTER 2 : THE FUNDAMENTAL PROBLEM OF CALCULUS OF VARIATIONS

o f integration b is equal to the integrand evaluated a t t =

derivative with respect to its lower limit of integration a is the

the integrand evaluated at

t = a.

b; and the

negative of

In Fig. 2.2b, an increase in b is reflected in a rightward displacement

of the right-hand boundary of the area under the curve. When the displace­

ment is infinitesimal, the effect on the definite integral is measured by the

value of the F function at the right-hand boundary-F(b, x). This provides

the intuition for (2.8). For an increase in the lower limit, on the other hand,

the resulting displacement, as illustrated in Fig.

2.2c,

ment of the left-hand boundary, which

the area under the curve.

This is why there is a negative sign

reduces

in (2.9).

is a rightward move­

The preceding derivative formulas can also be used in combination. If,

for instance, the definite integral takes the form

K( X )

( 2 . 10)

where

x

=

fb(x)F( t, X ) dt

a

F, but also affects the

(2.6) and (2.8), to get the

not only enters into the integrand function

upper limit of integration, then we can apply both

total derivative

( 2.11)

dK

b(x)

- = f Fx ( t, x ) dt + F [ b(x) , x ] b'( x )

dX

a

The first term on the right, an integral, follows from (2.6); the second ter-m,

representing the chain

EXAMPLE 1

dK db( x )

·

db( x ) �

The derivative of

rule,

EXAMPLE 2

J;e-x dt

(2.8).

with respect to

x

is, by Leibniz's

Similarly,

.!!_

dx

EXAMPLE 3

is based on

}2f3x1 dt = J2[3 !_x1 dt = £23tx1-1 dt

dx

To differentiate

J:x3t2 dt

with respect to

x

which appears in

the upper limit of integration, we need the chain rule as well as

result is

d

dx

i2x3t2 dt = [-d i2x

l d(2x)

3t2 dt -- = 3(2x } 2

0

d(2x}

0

dx

•

(2.8).

2 = 24x 2

The

PART 2, THE CALCULUS OF VARIA'nONS

32

Development of the Euler Equation

For ease of understanding, the Euler equation will be developed in four

steps.

Step i Let us first express V in terms of E, and take its derivative.

Substituting (2.3) into the objective functional in (2.1), we have

[

]

. V( e ) = (F t, y* ( t) + ep(t) , y*' (t) + ep' (t) dt

----- .____....

0

y(t)

y'(t)

obtain the derivative dVjd e , Leibniz's rule tells u s t o differentiate

( 2.12 )

To

through the integral sign:

( 2 .13)

[ by ( 2 .3 ) ]

Breaking the last integral in (2.13) into two separate integrals, and setting

dV1de = 0, we get a more specific form of the necessary condition for an

extremal as follows:

( 2 . 1 4)

While this form of necessary condition is already free of the arbitrary

variable e , the arbitrary perturbing curve p(t ) is still present along with its

derivative p ' (t). To make the necessary condition fully operational, we must

also eliminate p(t) and p '(t ) .

Step ii To that end, we first integrate the second integral in (2.14) by

parts, by using the formula:

( 2.15 )

Let

f.t-b du = vu ,t-b f.t-b dv

t-a V

t-a - t-a U

v = Fy'

and

[ u = u(t), v = v(t)}

u = p(t). Then we have

dv

dFy'

dv = dt

=

dt

dt

dt

and

du

=

du

dt = p' ( t ) dt

dt

Substitution of these expressions into (2.15)-with

a =

0 and

b = T-

CHAPTER 2:

TQE FUNDAMENTAL PROBLEM OF CALCULUS OF VARIATIONS

- 33

gives us

( 2 . 16)

since the first term to the right of the first equals sign must vanish under

assumption (2.2). Applying (2. 16) to (2.14) and combining the two integrals

therein, we obtain another version of the necessary condition for the

extremal:

( 2 . 17)

Step iii Although p'(t) is no longer present in (2. 17), the arbitrary p(t)

still remains. However, precisely because p(t) enters in an arbitrary way,

we may conclude that condition (2. 1 7) can be satisfied only if the bracketed

expression [ FY - dF .jdt] is made to vanish for every value of t on the

Y

extremal; otherwise, the integral may not be equal to zero for some admissi­

ble perturbing curve p(t). Consequently, it is a necessary condition for an

extremal that

( 2 . 18)

Fy -

d

dt

- F., = 0

for all

J

t

E

[0, T ]

[Euler equation]

Note that the Euler equation is completely free of arbitrary expressions, and

thus be applied as soon as one is given a differentiable F(t, y, y')

function.

The Euler equation is sometimes also presented in the form

can

( 2 . 18' )

which is the result of integrating (2. 18) with respect to

t.

Step iv The nature of the Euler equation (2. 18) can be made clearer when

we expand the derivative dFy•/dt into a more explicit form. Because F is a

function with three arguments (t, y, y'), the partial derivative Fy' should

also be a function of the same three arguments. -The-total derivative dFY.jdt

-therefore consists of three terms:

dFy'

dt

--

aFy' aFy' dy aFy' dy'

+

- + -at

ay dt

ay' dt

= F1y + Fyy•Y' ( t ) + Fy•y•Y" ( t)

=

-

-

34

PART

2: THE CALCULUS OF VARIATIONS

Substituting this into (2. 18), multiplying through by - 1, and rearranging,

we arrive at a more explicit version of the Euler equation:

Fy•y•Y"(t) + Fyy'Y' ( t) + Fey' - Fy = 0

( 2 . 19)

for all

[ Euler equation ]

t E [0, T ]

This expanded version reveals that the Euler equation is in general a

second-order nonlinear differential equation. Its general solution will thus

contain two arbitrary constants. Since our problem in (2.1) comes with two

boundary conditions (one initial and one terminal), we should normally

possess sufficient information to definitize the two arbitrary constants and

obtain the definite solution.

EXAMPLE

4

Find the extremal of the functional

V(y] =

with boundary conditions

have the derivatives

fo\ 12ty + y'2 ) dt

y(O) = 0

and

y(2) = 8.

FY. = 2y'

By

(2.19),

Since

F = 12ty + y'2,

we

and

the Euler equation is

2y" (t) - 12t = 0

which, upon integration, yields

or

y"(t) = 6t

y'(t) = 3t 2 + c1,

and

[ general solution]

To definitize the arbitrary constants c1 and c2 , we first set t = 0 in the

general solution to get y(O) = c2 ; from the initial condition, it follows that

c2 = 0. Next, setting t = 2 in the general solution, we get y(2) = 8 + 2c1;

from the terminal condition, it follows that c1 = 0. The extremal is thus the

cubic function

y*(t) = t3

EXAMPLE 5

[ definite solution ]

Find the extremal of the functional

V[y] =

with boundary conditions

3t + (y' )ll2 • Thus,

' - 1 /2

Fy' = l(

2 y )

f [ st + (y') 1 12] dt

1

y(1) = 3

and

y(5) = 7.

'

- /2

Fy'y' = - . ( y' ) 3

Here we have

and

F=

35

CHAPTER 2 : THE FUNDAMENTAL PROBLEM OF CALCULUS OF VARIATIONS

The Euler equation (2.19) now reduces to

The only way to satisfy this equation is to have a constant y', in order that

y" = 0. Thus, we write y'(t) = c1, which integrates to the solution

[general solution 1

To definitize the arbitrary constants c1 and c 2 , we first set t = 1 to find

y(1) = c1 + c 2 = 3 (by the initial condition), and then set t = 5 to find

y(5) = 5c1 + c2 7 (by the terminal condition). These two equations give us

c1 = 1 and c2 = 2. Therefore, the extremal takes the form of the linear

=

function

y* ( t )

EXAMPLE 6

=t+2

[definite solution1

Find the extremal of the functional

= t(t + y 2 + 3y') dt

with boundary conditions y(O) 0 and y(5) = 3. Since F

V[y1

have

=

0

=

t

+

y2

+

3y', we

and

By (2.18), we may write the Euler equation as 2y

y* ( t )

=0

= 0, with solution

Note, however, that although this solution is consistent with the initial

condition y(O) = 0, it violates the terminal condition y(5) 3. Thus, we

must conclude that there exists no extremal among the set of continuous