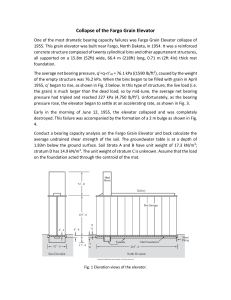

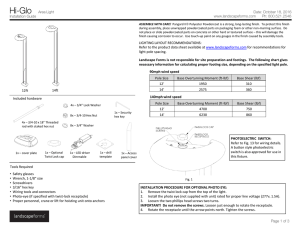

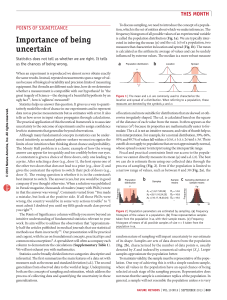

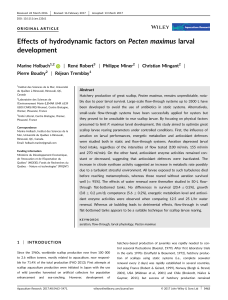

ALGORITHM FOR ESTIMATING LENGTH OF BENT OBJECTS Downloaded from ascelibrary.org by Universidad Nacional De Ingenieria on 10/23/18. Copyright ASCE. For personal use only; all rights reserved. By Edmond W. Holroyd III1 ABSTRACT: A prototype algorithm was developed to measure the length of fish larvae, some of which were bent. The algorithm starts with the longest straight line spanning the object, then repeatedly segments the line as points are forced to lie along the center line of the object. There is less than 5% length improvement with the transition from four to eight line segments for objects with a general length/width ratio of about 10. Most improvements in length were of less than one pixel. Additional segmentation to 16 or more lines may be appropriate for objects with a greater ratio, but the number of segments should not exceed the length/width ratio. The algorithm logic can be transferred to other program languages and software packages for routine image analysis. INTRODUCTION 1 and the word ‘‘image’’ will denote one of the larvae viewed in isolation. Computer automation of labor-intensive measurements of length, width, and area is a common goal for many applications. Images can be recorded by photography, video, scanners, and digital camera and imported into an image processing system on a computer. Software packages can then isolate the individual specimens in view and determine their areas and straight-line dimensions. For example, Jeffries et al. (1980) determine the greatest straight line lengths between extreme perimeter pixels of zooplankton. Holroyd (1987) determines snow particle lengths by the extent of the perpendicular projections of perimeter pixels along a linear regression line. Such straight-line dimensions, however, can be insufficient for some objects, such as fish larvae. Whether live or preserved, larvae are usually not straight and present challenges for measuring their total lengths from nose to tail. Software measurement of the length of a specimen along its spine can be accomplished manually by following the centerline of the specimen with a cursor. However, an automated measurement system is desired to reduce manual labor. Some software packages offer skeletonization (Russ 1992), by which the image is eroded by repeatedly removing edge pixels until only thin lines remain. However, irregularities in the image edges cause branching in the resulting lines. Special logic is then necessary for selecting those line segments that actually represent the centerline of the image. Furthermore, skeletonization lines end at the center of curvature of the ends of the image and do not extend to the image edges. Therefore, lengths determined from skeletonization are too short by an amount similar to the widths of the image near its end points. Fig. 1 is a view of fish larvae provided by Darrel E. Snyder (Larval Fish Laboratory, Colorado State University) as a challenge to develop an algorithm for estimating lengths of bent objects. The common staple at the top provides a scale of 12.5 mm length. There is background noise in the image from debris and from the bottom of the dish as well as faint shadows of the larvae to their right caused by directed illumination. The toning has been reversed to show opaque objects on a bright background. There are some nearly transparent parts of the larvae that are difficult to detect. In this report, the word ‘‘scene’’ will denote the entire view of Fig. 1 U.S. Bureau of Reclamation, P.O. Box 25007, D-8260, Denver, CO 80225-0007. E-mail: [email protected] Note. Discussion open until September 1, 1999. To extend the closing date one month, a written request must be filed with the ASCE Manager of Journals. The manuscript for this technical note was submitted for review and possible publication on December 18, 1998. This technical note is part of the Journal of Computing in Civil Engineering, Vol. 13, No. 2, April, 1999. 䉷ASCE, ISSN 0887-3801/99/0002-0130 – 0134/$8.00 ⫹ $.50 per page. Technical Note No. 19833. IMAGE PREPARATION A threshold was selected to isolate the larvae from the background noise. Two small objects that were not larvae were allowed to remain in the scene so that they could be excluded from consideration by having a small area. Holes in the staple and some images were artificially filled by hand. Some software packages can do such isolation, exclusion, and filling automatically. A new eight-bit scene was created in which the background was pure white (gray scale value = 255), and each larval image, including the two small objects, was filled with consecutive identification numbers beginning with 2 and numbered from top to bottom in order of encounter. Again, some image processing software packages (including Optimas, TNTmips, and TNTlite) can automatically isolate such larval images, produce a similar raster (computer picture), and color code the images by identification number. The algorithm assumes that such automatic preprocessing has already been done. The result was exported into a file that was a simple array of bytes for processing by the algorithm. The algorithm for determining the bent larval lengths was written to examine that specific file only. It is adaptable for files of other dimensions and for objects of differing length/width ratios. ALGORITHM STEPS General Overview The algorithm first scans through the scene for some basic information. For each larval image, it records the identification number, the minimum, average, and maximum line and column numbers, the span of the image in the horizontal and vertical directions, and the total area, all in pixel units. It writes these numbers to a file for future reference. In the discussion to follow, variable names will be used to avoid repeated definitions and long descriptions; such names are somewhat arbitrary. The algorithm was developed specifically for the images in Fig. 1. They have a length/width ratio of approximately 10. Objects with a larger ratio (such as worms) can benefit from a greater number of iteration steps. Objects with a smaller ratio might use a lesser number of iterations, approaching none at all for objects (such as eggs) that are unlikely to be bent. Arrays Two workspace arrays of an arbitrary 200 by 200 pixels were created. The dimensions need only be able to contain the largest image expected. The algorithm only scans the actual 130 / JOURNAL OF COMPUTING IN CIVIL ENGINEERING / APRIL 1999 J. Comput. Civ. Eng., 1999, 13(2): 130-134 Downloaded from ascelibrary.org by Universidad Nacional De Ingenieria on 10/23/18. Copyright ASCE. For personal use only; all rights reserved. FIG. 1. Initial Image of Larvae, with 12.5 mm Staple for Scale span of the object, so excess dimension is harmless in terms of computational speed. The first array, IM (the image), contains either zeros or the identification number of the image in the proper pixel locations. The second array, IR (image rim), contains either zeros or ones at the larval edges. The algorithm operates by means of ‘‘boxes,’’ ‘‘line segments,’’ and ‘‘anchors.’’ The boxes contain parts of the segmented image. The anchors are the points along the box edges at which the line segments fold to follow the centerline of the image. This development extends to the creation of only eight boxes and lines. Additional segmentation to 16 or more is possible for long, thin objects, having a greater length/width ratio. For eight line segments, there must be nine anchors. Four arrays of dimension 8 keep track of box limits: LT (top line number), LB (bottom line number), KL (left column number), and KR (right column number). Two arrays of dimension 9 keep track of the anchor locations: LL (anchor line number) and KK (anchor column number). One array of dimension 8, RMAX, records the length of the line segments between the nine anchor points. The summation of those lengths is the total length of the larva. There are some other ‘‘bookkeeping’’ arrays. One pair of dimension 8 records the line number (LX) or column number (KX) at which a box is subdivided and along which an anchor point is located. Such lines or columns are excluded from consideration in a search within the box for the farthest rim location from an anchor point. Another array of dimension 8, ISBENT, contains a flag to indicate if any part of a line segment falls outside of the larval image. This array is for information only and does not affect the algorithm. Only the scene between the extreme line and column numbers for each image is examined. Only those parts of the scene having the desired identification number are copied into the upper left corner of the image workspace; the rest remains zeroed. For illustrative purposes, Fig. 2 shows the algorithm steps for larva 16 (which is in middle of the left side of Fig. 1 and looks like a question mark), the bent shape of which is particularly challenging to analyze. The top and left extremities of the image workspace have line and column numbers of 1. The bottom and right extremities of the image have line and column numbers equal to the span of the image in those directions. These are the first box limits entered into their arrays. The first box is shown in step 0 in the upper left of Fig. 2. The image workspace, IM, is scanned with a 3 by 3 array. If the center of this array is nonzero and hence the identification number, then if any of the eight neighbors is a zero, the center position of the 3 by 3 array is flagged with a 1 as an edge indicator in the IR rim array workspace. First Line The rim image is scanned to identify the pair of pixels with the greatest separation. The distance between them, illustrated as the black line in Step 1 in the lower left of Fig. 2, is the first RMAX. Their coordinates are the first and ninth entries in the anchor list, with the topmost pixel being listed first. The line is then examined to see if any part falls outside of the larval image. If so, the ISBENT flag for the first line is set to 1. Subsequent Segmentation Extraction The algorithm scans through the scene again to analyze each larval image, following the order of the identification numbers. The algorithm then performs a number of segmentations to generate two, four, and eight line segments. A program loop index, KUT, ranges from 1 to 8, with steps of 8, 4, and 2 with JOURNAL OF COMPUTING IN CIVIL ENGINEERING / APRIL 1999 / 131 J. Comput. Civ. Eng., 1999, 13(2): 130-134 in continuity of the image along the cutting line, but that has not been a problem with this scene.) Downloaded from ascelibrary.org by Universidad Nacional De Ingenieria on 10/23/18. Copyright ASCE. For personal use only; all rights reserved. New Lines The new boxes are considered in the correct order from the start to the end of the image. If the box is not at the extreme end of an image, then it has two anchor points associated with it, and both are fixed in position along the centerline of the image. The length of the line segment is the distance between those anchor points. If the box is at the start or end of an image, then one anchor is fixed and the other is free to float to a new position. The rim image, IR, is scanned within the box to find the farthest point (new anchor) of the image from the fixed anchor point. Scanning is not permitted along the last cutting line (a line or column). The new line length is then recorded. It is this feature of the algorithm that makes the line segments fold around major bends of any orientation to find the extremities of the image. It also lets the line segments extend to the edge of the image, unlike skeletonization. The new line is then checked for any points falling outside the image, indicating that the image is still bent within that box. New line segments are illustrated along the bottom of Fig. 2. Total Length FIG. 2. Numbered Steps in Algorithm for Image 16, Giving Type of Product Produced in Each Step each successive segmentation. KUT is therefore only 1 for the first segmentation, 1 and 5 for the second, and 1, 3, 5, and 7 for the third. For each KUT the algorithm divides a box into two, often unequal parts and finds where the new box boundary crosses the centerline of the larval image. That location is a new anchor point. The algorithm needs to keep careful track of the order of the new boxes along the image, particularly for bent images. Dividing Boxes The height and width of a box are derived from the limits stored in the bookkeeping arrays. If they are equal, the algorithm checks whether the anchor points of the last line segment are on the top and bottom or on the sides of the box. If on the sides, the box is divided along a column so that the new anchors are also on the sides. Similarly, a horizontal division retains the top and bottom pattern. Otherwise, the algorithm determines which is larger, the width or the height of the box. The longer dimension is subdivided at the position of the average of the two anchor points, using integer truncation. This may produce new boxes of unequal dimensions, especially if one anchor was on a side edge and the other on a top or bottom edge. Successive new boxes are illustrated along the top of Fig. 2. The extents of the image within each new box are then determined and carefully stored at the appropriate locations in the bookkeeping arrays. It is very important to make sure that the boxes remain in the correct order along the image from start to end. Interior anchor points are always retained. Those at the start or end of the series of line segments are movable. New intermediate anchor points are declared to be at the position of the center of the larval image along the cutting line. All interior anchor points are illustrated along the middle of Fig. 2. (The present version of the algorithm fails to protect against a break Computer determination of the length of a curved line is frequently by means of a sum of shorter straight line segments. As the number of segments increases and their lengths decrease, the total length approaches the real length. However, the practical limit of precision is the size of the pixels comprising the image. Images that are generally straight have no algorithm challenges. Their initial lengths are similar to final calculations. Bent images, however, are the reason for the algorithm development. Running the algorithm to 16 line segments might smooth out the upper right in Fig. 2, but there could be problems from having boxes not extending across the local width of an image. There would not be much gain in the length because it is already within a few pixels of the true length. Future algorithm development could investigate fitting a spline to the anchor points to get a curved fit that should follow the centerline of the image better than the straight line segments. ILLUSTRATIONS Fig. 3 shows in a smaller format the progress on all images in the scene. The images are shaded gray. Black lines are intermediate line segments, and the white lines are the final lines of eight segments. Images 4 and 11 were not analyzed because they were too small, as determined by an area threshold specific to the Fig. 1 scene. Images that were nearly straight have overlapping line segments that may not be resolvable. Bent images show the progress of the line folding. Sometimes the floating of the ends of the first and last line segments can be seen as the folding progresses. Only image 13 appears to have a strange behavior on the last folding. As a result of the particular shape of the broad head of a bent larva, the last line segment folds back rather than proceeding to a rim position on the extreme right. The total length of the image is not significantly affected by this reversing fold. However, extending the algorithm to 16 line segments will probably produce a significant error for this particular image. It appears that the number of segments should not greatly exceed the length/width ratio of an image that is 132 / JOURNAL OF COMPUTING IN CIVIL ENGINEERING / APRIL 1999 J. Comput. Civ. Eng., 1999, 13(2): 130-134 Downloaded from ascelibrary.org by Universidad Nacional De Ingenieria on 10/23/18. Copyright ASCE. For personal use only; all rights reserved. FIG. 3. Line Fitting Progress on All Images in Scene that there is not much improvement with the increased segmentation. Bent images plot to the right. There are sometimes significant jumps in total length as the line segments fold around a bend in the image. The greatest improvement going from four to eight segments is about 5% for image 16. For two-thirds of the images, the last improvement was less than one pixel, and so further improvement cannot be expected for them. It appears that further division of the images to 16 line segments would generally produce an improvement of less than 2%. DISCUSSION FIG. 4. Length measurements Get Closer to Eight-Line Segment Value As Number of Segments Increases not bent because of the possibility that a final box may not contain opposite edges across an image. FINAL LENGTHS As the algorithm progresses from one to eight line segments, the total length of each image usually increases. Fig. 4 shows a graph indicating the convergence toward the final (unknown) value. The vertical axis consists of three values of the number of line segments, 1, 2, and 4. The horizontal axis gives on a logarithmic scale the difference in length from the eightsegment value, expressed as a fraction of the eight-segment length. Straight images have points plotting on the left, indicating An algorithm has been developed that will approximate the length of larvae even if they are bent and in random orientations. It operates by folding line segments so that anchor points fall along the centerline of the image. The concept appears simple, but the program logic requires careful bookkeeping of box and line segment coordinates in the proper order. Lengths converge rapidly to a ‘‘final’’ value with eight line segments for these particular images. It appears that the number of segments should not exceed the general length/width ratio of the unbent objects. Neither should the number approach the pixel length of the object, because pixels cannot be subdivided. It is suspected that excess segmentation will start to produce irregularities of measurement of images with unusual shapes. Boxes may no longer extend across the local width of the images. Side bulges, like those in image 15, may fold the lines away from the general center. The reverse folding of image 13 should be taken as a warning against further segmentation of images of this particular length-to-width ratio. This algorithm appears general in nature and could be used for other natural and artificial objects that are long but possibly bent. Some diverse possibilities are plankton, worms, algal strands, wood pulp fibers, elbow macaroni, and oxbow lakes. The algorithm is not a full package and needs to be integrated JOURNAL OF COMPUTING IN CIVIL ENGINEERING / APRIL 1999 / 133 J. Comput. Civ. Eng., 1999, 13(2): 130-134 into processes already available in commercial software systems. The algorithm coding was in FORTRAN, but the logic should transfer to the more popular varieties of C. The image processing routines in MIPS (Map and Image Processing System, from MicroImages, Inc., Lincoln, Nebr.) were used to examine the algorithm progress and prepare the resulting figures. Their more modern TNTmips and free TNTlite multipurpose software could incorporate this algorithm. Downloaded from ascelibrary.org by Universidad Nacional De Ingenieria on 10/23/18. Copyright ASCE. For personal use only; all rights reserved. ACKNOWLEDGMENTS Encouragement was received from Straton Spyropoulos, formerly of Optimas Corp., and from Darrel E. Snyder of the Larval Fish Laboratory, Colorado State University, to develop the algorithm for incorporation into the Optimas software. Steve Hiebert of the Bureau of Reclamation provided review and further encouragement. APPENDIX. REFERENCES Holroyd, E. W. III. (1987). ‘‘Some techniques and uses of 2D-C habit classification software for snow particles.’’ J. Atmospheric and Oceanic Technol., 4(3), 498 – 511. Jeffries, H. P., Sherman, K., Maurer, R., and Katsinis, C. (1980). ‘‘Computer-processing of zooplankton samples.’’ Estuarine perspectives, Victor S. Kennedy, ed., Academic, San Diego, 303 – 316. Russ, J. C. (1992). The image processing handbook. CRC Boca Raton, Fla., 315 – 318. 134 / JOURNAL OF COMPUTING IN CIVIL ENGINEERING / APRIL 1999 J. Comput. Civ. Eng., 1999, 13(2): 130-134