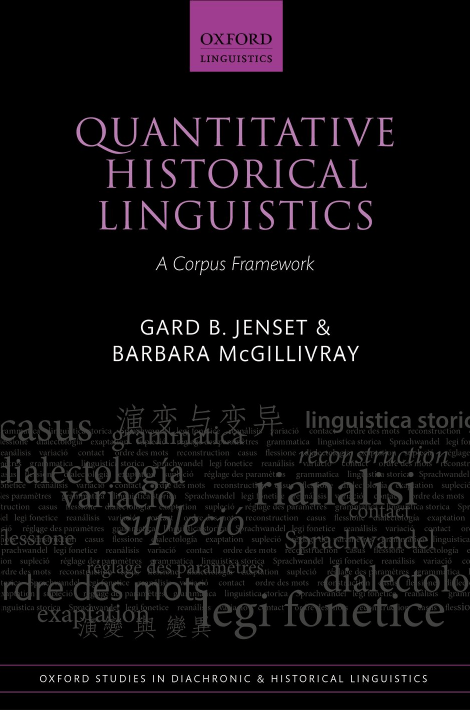

Quantitative Historical Linguistics - A Corpus Framework

Anuncio