(Nios II microprocessor-based acceleration of block

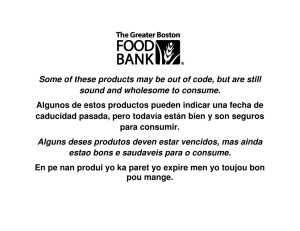

Anuncio