Implementation Matters: Programming Best Practices for

Anuncio

Implementation Matters: Programming Best

Practices for Evolutionary Algorithms

J.J. Merelo, G. Romero, M.G. Arenas, P.A. Castillo,

A.M. Mora, and J.L.J. Laredo

Dpto. de Arquitectura y Tecnologı́a de Computadores. Univ. of Granada, Spain

{jmerelo,gustavo,mgarenas,pedro,amorag,juanlu}@geneura.ugr.es

Abstract. While a lot of attention is usually devoted to the study of

different components of evolutionary algorithms or the creation of heuristic operators, little effort is being directed at how these algorithms are

actually implemented. However, the efficient implementation of any application is essential to obtain a good performance, to the point that

performance improvements obtained by changes in implementation are

usually much bigger than those obtained by algorithmic changes, and

they also scale much better. In this paper we will present and apply

usual methodologies for performance improvement to evolutionary algorithms, and show which implementation options yield the best results

for a certain problem configuration and which ones scale better when

features such as population or chromosome size increase.

1

Introduction

The design of evolutionary algorithms (EAs) usually includes a methodology for

making them as efficient as possible. Efficiency is measured using metrics such as

the number of evaluations to solution; implicitly seeking to reduce running times.

However, the same amount of attention is not given to designing an implementation as efficient as possible, even as small changes in it can have a much bigger

impact in the overall running time than any algorithmic improvement. This lack

of interest, or attention, in the actual implementation of algorithms proposed

results in the quality of scientific programming being, on average, worse than

what is usually found in companies [1] or released software.

It can be argued that the time devoted to an efficient implementation can be

better employed pursuing scientific innovation or a precise description of the algorithm; however, the methodology for making improvements in program running

time is well established in computer science: there are several static or dynamic

analysis tools which look at memory and running time (called monitors), and

thus, it can be established how much memory and time the program takes, and

then which parts of it (variables, functions) are responsible for that, for which

This work has been supported in part by the CEI BioTIC GENIL (CEB09-0010)

Programa CEI del MICINN (PYR-2010-13) project, the Junta de Andalucı́a TIC3903 and P08-TIC-03928 projects, and the Jaén University UJA-08-16-30 project.

J. Cabestany, I. Rojas, and G. Joya (Eds.): IWANN 2011, Part II, LNCS 6692, pp. 333–340, 2011.

c Springer-Verlag Berlin Heidelberg 2011

334

J.J. Merelo et al.

profilers are used. Once this methodology has been included into the design

process of scientific software, it does not need to take much more time than,

say, running statistical tests. In the same way that these tests establish scientific accuracy, an efficient implementation makes results better and more easily

reproducible and understandable.

Profiling the code that implements an algorithm also allows to detect potential bugs, see whether code fragments are executed as many times as they

should, and detect the which parts of the code can be optimized in order to

obtain the most impact on performance. After profiling, the deepest knowledge

on the structure underlying the algorithm will allow a more efficient redesign,

balancing algorithmic with computational efficiency; this deep knowledge also

allows to find out computational techniques that can be leveraged in the search

for new evolutionary techniques. For instance, knowing how a sorting algorithm

scales with population size would allow the EA designer to choose the best option for a particular population size, or eliminate sorting completely using a

methodology that avoids sorting altogether, possibly finding new operators or

selection techniques for EAs.

In this paper, we will comment the enhancements applied to a program written

in Perl [2–4] which implements an evolutionary algorithm, and also a methodology for its analysis, proving the impact of the identification of bottlenecks in

a program, and its elimination through common programming techniques. This

impact can go up to several orders of magnitude, but of course it depends on the

complexity of the fitness function and the size of the problem it is applied to, as

has been proved in papers such as the one by Laredo et al. [5]. In principle, the

methodology and tools that have been used are language-independent, and can

be found in any programing language, however the performance improvements

and the options for changing a program will depend on the language implied.

From a first baseline or straightforward implementation of an EA, we will show

techniques to measure the performance obtained with it, and how to derive a set

of rules that improve its efficiency. Given that research papers are not commonly

focused on detailing such techniques, best programming practices for EAs use to

remain hidden and can not benefit the rest of the community. A typical research

paper do not detail these techniques, so that this knowledge remains hidden and

can not benefit the rest of the community. This work is an attempt to highlight

those techniques and encourage the community to reveal how published results

are obtained.

The rest of this paper is structured as follows: Section 2 presents a comprehensive review of the approaches found in the bibliography. Section 3 briefly describes the methodology followed in this study and discusses the results obtained

using different techniques and versions of the program. Finally, conclusions and

future work are presented in Section 4.

2

State of the Art

EA implementation has been the subject of many works by our group [6–10]

and by others [11–14]. Much effort has been devoted looking for new hardware

Implementation Matters: Programming Best Practices

335

platforms to run EAs as GPUs [14] of specialized hardware [15]) than trying to

maximize the potential of usual hardware.

As more powerful hardware is available every year researchers have pursuit the

invention of new algorithms [16–18] forgiving how important efficiency is. There

has been some attempts to calculate the complexity of EAs with the intention of

improving it: by avoiding random factors [19] or by changing the random number

generator [20].

However, even on the most modern systems, EA experimentation can be a

extremely long process because every algorithm run can last several hours (or

days), and it must be repeat several times in order to obtain accurate statistics.

And that just in the case of knowing the optimal set of parameters. Sometimes

the experiments must be repeated with different parameters to discover the

optimal combination (systematic experimentation). So in the following sections

we pay attention to implementation details, making improvements in an iterative

process.

3

Methodology, Experiments and Results

The initial version of the program is taken from [2], and it is shown in

Tables 1 and 2. A canonical EA with proportional selection, two individual

elite, mutation and crossover is implemented. The problem used is MaxOnes

(also called OneMax)[21], where the function to optimize is simply the number

of ones in a bit-string, with chromosomes changing in length from 16 to 512.

The initial population has 32 individuals, and the algorithm runs for 100 generations. The experiments are performed with different chromosome and population sizes, since the algorithms implemented in the program have different

complexity with respect to those two parameters. These runs have been repeated

30 times for statistical accuracy reasons.

Running time in user space (as opposed to wallclock time, which includes

time spent in other user and system processes) is measured each time a change

is made.

In these experiments, the first improvement tested is to include a fitness cache

[16, 2], that is, a data structure called hash which remembers the values already

computed for the fitness function.

This change trades off memory for fast access, as has been mentioned above,

increasing speed but also the memory needed to store the precomputed values.

This is always a good option if there is plenty of memory available, but if this

aspect is not checked and swapping (virtual memory in other OSs) is activated,

it might imply a huge decrease in performance: parts of program data will start

to be swapped out to disk, resulting in a huge performance decrease. However, a

quick calculation beforehand will tell us if we should worry about this and turn

cache off if that is the case.

It is also convenient to look for the fastest way of computing the fitness

function, using language-specific data structures, functions and expressions1 .

1

Changes can be examined in the code repository at http://bit.ly/bOk3z3

336

J.J. Merelo et al.

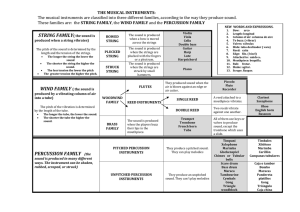

Table 1. First version of the program used in the experiments (main program). An

evolutionary algorithm is implemented.

my $chromosome length = shift || 16;

my $population size = shift || 32;

my $generations = shift || 100;

my @population = map(random chromosome($chromosome length), 1..$population size);

map( compute fitness( $ ), @population );

for ( 1..$generations ) {

my @sorted population =sort{$b->{’fitness’}<=>$a->{’fitness’}}@population;

my @best = @sorted population[0,1];

my @wheel = compute wheel( \@sorted population );

my @slots = spin( \@wheel, $population size );

my @pool;

my $index = 0;

do {

my $p = $index++ % @slots;

my $copies = $slots[$p];

for (1..$copies) {

push @pool, $sorted population[$p];

}

} while ( @pool <= $population size );

@population = ();

map( mutate($ ), @pool );

for ( my $i = 0; $i < $population size/2 -1 ; $i++ ) {

my $first = $pool[rand($#pool)];

my $second = $pool[ rand($#pool)];

push @population, crossover( $first, $second );

}

map( compute fitness( $ ), @population );

push @population, @best;

}

As can be seen in the results shown in Figure 1-left, running time of the initial

version grows more than linearly. For big instances of the problem run time can

became to long to be practical. For example, for chromosomes of length 16

running time of the program version used as baseline is half of the third version.

For chromosomes of length 256 the run time difference is an order of magnitude

greater. This lead us to think that optimizing the EA implementation is more

valuable than any other algorithmic change. Also it must be remarked than code

changes have been minimal.

In order to obtain further improvements, a profiler (one of the tools described in

the introduction to this paper) has to be used. In Perl, Devel::DProf carries out

an analysis of the different subroutines; however, a sentence-by-sentence analysis

has to be done. Thus, the Devel::NYTProf Perl module (developed at the New

York Times) is used. The results of applying this profiler show that operators like

crossover and mutation are used quite a lot, and some improvements can be made

over them; however, the function which takes the most time is the one that sorts

the population. Changing the default Perl sort, which implements quicksort [22],

to another version using mergesort [23] meant a small improvement, but using

Sort::Key, the best one available in the Perl language yields the best results.

Implementation Matters: Programming Best Practices

337

Table 2. First version of the program used in the experiments (subrutines)

sub compute wheel {

my $population = shift;

my $total fitness;

map( $total fitness += $ ->{’fitness’}, @$population );

my @wheel = map( $ ->{’fitness’}/$total fitness, @$population);

return @wheel;

}

sub spin {

my @slots = map( $ *$slots, @$wheel );

my ( $wheel, $slots ) = @ ;

return @slots;

}

sub random chromosome {

my $length = shift;

my $string = ’’;

for (1..$length) {

$string .= (rand >0.5)?1:0;

}

{ string => $string,

fitness => undef };

}

sub mutate {

my $chromosome = shift;

my $clone = { string => $chromosome->{’string’}, fitness => undef };

my $mutation point = rand( length( $clone->{’string’} ));

substr($clone->{’string’}, $mutation point, 1,

( substr($clone->{’string’}, $mutation point, 1) eq 1 )?0:1 );

return $clone;

}

sub crossover {

my ($chrom 1, $chrom 2) = @ ;

my $chromosome 1 = { string => $chrom 1->{’string’} };

my $chromosome 2 = { string => $chrom 2->{’string’} };

my $length = length( $chromosome 1 );

my $xover point 1 = int rand( $length -1 );

my $xover point 2 = int rand( $length -1 );

if ( $xover point 2 < $xover point 1 ) {

my $swap = $xover point 1;

$xover point 2 = $swap;

$xover point 1 = $xover point 2;

}

$xover point 2 = $xover point 1 + 1 if ( $xover point 2 == $xover point 1

);

my $swap chrom = $chromosome 1;

substr($chromosome 1->{’string’}, $xover point 1, $xover point 2 $xover point 1 + 1,

substr($chromosome 2->{’string’}, $xover point 1, $xover point 2 $xover point 1 + 1) );

substr($chromosome 2->{’string’}, $xover point 1, $xover point 2 $xover point 1 + 1,

substr($swap chrom->{’string’}, $xover point 1, $xover point 2 $xover point 1 + 1) );

return ( $chromosome 1, $chromosome 2 );

}

sub compute fitness {

my $chromosome = shift;

my $unos = 0;

for ( my $i = 0; $i < length($chromosome->{’string’}); $i ++ ) {

$unos += substr($chromosome->{’string’}, $i, 1 );

}

$chromosome->{’fitness’} = $unos;

}

338

J.J. Merelo et al.

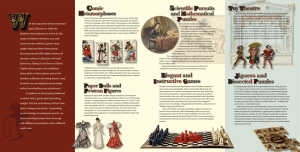

Fig. 1. Log-log plot of running time for different chromosome (left) and population

sizes (right). Solid-line corresponds to the baseline version. (Left) Dashed version uses

a cache, and dot-dashed one changes fitness calculation. (Right) Dashed version changes

fitness calculation, while dot-dashed one uses best-of-breed sorting algorithm for the

population. Values are averages for 30 runs.

Figure 1-right shows how run time grows with population size for a fixed

chromosome size of 128. The algorithm is run 100 times regardless of whether the

solution is found or not. The EA behavior is similarly to the previous analysis.

The most efficient version, using Sort::Key, is an order of magnitude more

efficient than the first attempt and the difference grows with the population size.

Adding up both improvements, for the same problem size, almost two order of

magnitude better results are obtained without changing our basic algorithm.

It should be noted that since these improvements are algorithmically neutral,

they do not have a noticeable impact on results, being statistically indistinguishable from the one obtained by the baseline program.

4

Conclusions and Future Work

This work shows how good programming practices and a deep knowledge of data

and control structures of a programming language can yield an improvement of

up to two orders of magnitude in an evolutionary algorithm (EA). Our tests

consider a well known problem whose results can be easily extrapolated to others. An elimination of bottlenecks after the profiling of the implementation of

an evolutionary algorithm can give better results than a new algorithm with

different, and likely more complex algorithms or a change of parameters in the

existing algorithm. A cache of evaluations can be used on a wide variety of EA

problems. Moreover, a profiler program can be applied on every implementation,

to detect bottlenecks and concentrate efforts on solving them.

Implementation Matters: Programming Best Practices

339

From these experiments, we conclude that applying profilers to identify the

bottlenecks of evolutionary algorithm implementations, and then careful and informed programming to optimize those fragments of code, greatly improves running time of evolutionary algorithms without degrading algorithmic performance.

Several other techniques can improve EA performance; for instance

mutithreading can be used to take advantage of symmetric multiprocessing and

multicore machines; message passing techniques can be applied to divide the

work for execution on clusters, and vectorization for execution on a GPU, are

three of the more well known and usually employed, but almost every best practice in programming can be applied successfully to improve EAs. In turn, these

techniques will be incorporated to the Algorithm::Evolutionary [16] Perl

library. A thorough study of the interplay between implementation and the algorithmic performance of the implemented techniques will also be carried out.

References

1. Merali, Z.: Computational science: Error, why scientific programming does not

compute. Nature 467(7317), 775–777 (2010)

2. Merelo-Guervós, J.J.: A Perl primer for EA practitioners. SIGEvolution 4(4), 12–19

(2010)

3. Wall, L., Christiansen, T., Orwant, J.: Programming Perl, 3rd edn. O’Reilly &

Associates, Sebastopol (2000)

4. Schwartz, R.L., Phoenix, T., foy, B.D.: Learning Perl, 5th edn. O´Reilly & Associates (2008)

5. Laredo, J., Castillo, P., Mora, A., Merelo, J.: Exploring population structures for

locally concurrent and massively parallel evolutionary algorithms. In: Computational Intelligence: Research Frontiers, pp. 2610–2617. IEEE Press, Los Alamitos

(2008)

6. Merelo-Guervós, J.J.: Algoritmos evolutivos en Perl. Ponencia presentada en el V

Congreso Hispalinux, disponible en (November 2002),

http://congreso.hispalinux.es/ponencias/merelo/ae-hispalinux2002.html

7. Merelo-Guervós, J.J.: OPEAL, una librerı́a de algoritmos evolutivos en Perl. In:

Alba, E., Fernández, F., Gómez, J.A., Herrera, F., Hidalgo, J.I., Merelo-Guervós,

J.J., Sánchez, J.M. (eds.) Actas primer congreso español algoritmos evolutivos,

AEB 2002, Universidad de Extremadura, pp. 54–59 (February 2002)

8. Arenas, M., Foucart, L., Merelo-Guervós, J.J., Castillo, P.A.: JEO: a framework

for Evolving Objects in Java. In: [24], pp. 185–191,

http://geneura.ugr.es/pub/papers/jornadas2001.pdf

9. Castellano, J., Castillo, P., Merelo-Guervós, J.J., Romero, G.: Paralelización de

evolving objects library usando MPI. In: [24], pp. 265–270

10. Keijzer, M., Merelo, J.J., Romero, G., Schoenauer, M.: Evolving objects: A general

purpose evolutionary computation library. In: Collet, P., Fonlupt, C., Hao, J.-K.,

Lutton, E., Schoenauer, M. (eds.) EA 2001. LNCS, vol. 2310, pp. 231–244. Springer,

Heidelberg (2002)

11. Fogel, D., Bäck, T., Michalewicz, Z.: Evolutionary Computation: Advanced algorithms and operators. Taylor & Francis, Abington (2000)

12. Setzkorn, C., Paton, R.: JavaSpaces–An Affordable Technology for the Simple

Implementation of Reusable Parallel Evolutionary Algorithms. Knowledge Exploration in Life Science Informatics, 151–160

340

J.J. Merelo et al.

13. Rummler, A., Scarbata, G.: eaLib – A Java Frameword for Implementation of

Evolutionary Algorithms. Theory and Applications Computational Intelligence,

92–102

14. Wong, M., Wong, T.: Implementation of parallel genetic algorithms on graphics

processing units. Intelligent and Evolutionary Systems, 197–216 (2009)

15. Schubert, T., Mackensen, E., Drechsler, N., Drechsler, R., Becker, B.: Specialized

hardware for implementation of evolutionary algorithms. In: Genetic and Evolutionary Computing Conference, Citeseer, p. 369 (2000)

16. Merelo-Guervós, J.J., Castillo, P.A., Alba, E.: Algorithm: Evolutionary, a

flexible Perl module for evolutionary computation. Soft Computing (2009),

http://sl.ugr.es/000K (to be published)

17. Ventura, S., Ortiz, D., Hervás, C.: JCLEC: Una biblioteca de clases java para

computación evolutiva. In: Primer Congreso Español de Algoritmos Evolutivos y

Bioinspirador, pp. 23–30. Mérida, Spain (2002)

18. Ventura, S., Romero, C., Zafra, A., Delgado, J., Hervás, C.: JCLEC: a Java framework for evolutionary computation. Soft Computing-A Fusion of Foundations,

Methodologies and Applications 12(4), 381–392 (2008)

19. Salomon, R.: Improving the performance of genetic algorithms through derandomization. Software - Concepts and Tools 18(4), 175 (1997)

20. Digalakis, J.G., Margaritis, K.G.: On benchmarking functions for genetic algorithms. International Journal of Computer Mathematics 77(4), 481–506 (2001)

21. Muhlenbein, H.: How genetic algorithms really work: I. mutation and hillclimbing. In: Munner, R., Manderick, B. (eds.) Proceedings of the Second Conference

on Parallel Problem Solving from Nature (PPSN II). pp. 15–25. North-Holland,

Amsterdam (1992)

22. Hoare, C.: Quicksort. The Computer Journal 5(1), 10 (1962)

23. Cole, R.: Parallel merge sort.In: 27th Annual Symposium on Foundations of Computer Science 1985, pp. 511–516 (1986)

24. UPV. In: Actas XII Jornadas de Paralelismo, UPV, Universidad Politécnica de

Valencia (2001)