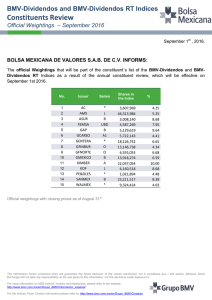

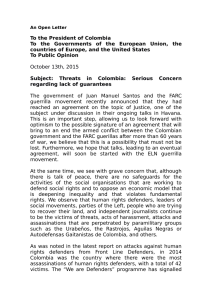

See discussions, stats, and author profiles for this publication at: https://www.researchgate.net/publication/229465039 A basic model for evaluating R&D performance: Theory and application in Italy Article in R& D Management · December 2002 DOI: 10.1111/1467-9310.00231 CITATIONS READS 105 1,985 1 author: Mario Coccia Italian National Research Council 446 PUBLICATIONS 16,848 CITATIONS SEE PROFILE Some of the authors of this publication are also working on these related projects: causes of violent crime and terrorism View project Agriculture Innovation View project All content following this page was uploaded by Mario Coccia on 02 May 2018. The user has requested enhancement of the downloaded file. A basic model for evaluating R&D performance: theory and application in Italy Mario Coccia Italian National Research Council, Institute for Economic Researches on Firms and Growth (Ceris ± Cnr), Via Avogadro, 8, Torino, Italy [email protected] Nowadays the public R&D laboratories have a fundamental role in countries' development, supporting businesses as they face the technological challenges in the turbulent world scenarios. Measuring the performance of R&D organisations is crucially important to decisions about the level and direction of public funding for research and development. This research considers the public laboratories like systems and develops a mathematical model based on the measurement of R&D activities with k-indices. The score obtained from the research laboratories evaluation (relev) methodology synthesises in single value financial, scientific and technological aspects. It is an indicator, for R&D manager and policy maker, of performance in relation to other research organisations or in a time series. The method is an instrument of strategic planning and can be used for the improvement of individual activities and the overall performance of public R&D bodies. Performance is the result of the organisation in carrying out activities over an interval of time. 1. Introduction T he public R&D bodies are organisations, which carry out scientific research, technological services and administrative operations. Nowadays they are important players within the industrialised countries since they support the firms in facing the technological challenges presented by an ever more turbulent world scenario. The need to increase the efficiency and efficacy of these organisations places the elaboration of methodologies for measuring productivity in R&D very much to the forefront (Bozeman and Melkers, 1993; Georghiou, 1998; Noyons et al., 1999; Bozeman, 2001). The economic literature on R&D evaluation is based above all on bibliometric and technometric indices. These indicators do not consider the financial resources available though they are important in scientific production and for the personnel employed. Moreover an evaluation must consider the tacit activity which can be compared to the submerged part of an iceberg, it is not visible, but it is very substantial (Polyani, 1966). The measurement and evaluation of public research bodies can be based on their input and output, considering three dimensions: cost, quantity and quality. These three aspects show the real added value which a research body pours into the users. The ideal system of R&D measuring according to Brown and Svenson (1998) should: * * Focus on external vs. internal measurement (Patterson, 1983); Focus on outcomes, not on behaviour; R&D Management 31, 4, 2001. # Blackwell Publishers Ltd, 2001. Published by Blackwell Publishers Ltd, 108 Cowley Road, Oxford OX4 1JF, UK and 350 Main Street, Malden, MA 02148, USA. 453 Mario Coccia * * * Measure outputs by three dimensions: cost, quantity and quality; Simple (6 ±7 indices); Objective and not subjective. The present work has built the relev method (research laboratory evaluation) which measure the activity of each research laboratory by k-indices (X1, X2, X3, ..., Xk). The vector of the values (x1, x2, x3, ..., xk) has a geometric representation in a multidimensional space. The indices are combined in a simple function we have as output a new variable (score) which represents the performance measurement of the bodies evaluated. The linear function takes the following form: 1 X1 2 X2 3 X3 :::k Xk where: = the performance value of each unit (Laboratory) i = scalar values Xi = indices (i = 1, 2, ..., k) The idea of this relev method is born from the models used in financial analysis for to identify bankruptcy risk of corporation (Altman, 1968; Altman, 1983; Friedman, 1985). The relev and the financial models have a common denominator, they analyse the organisations (firms or R&D labs) with a series of indices, which are the operators of a linear function. The differences between these two models are important. The models applied in finance calculate the values i with the statistical techniques (discriminant analysis, Zeta analysis) and they are used in the prediction of corporate bankruptcy. The result is a score and a cut-off point for separating the healthy firms from sick firms (with internal problems). The relev model is more simple because the values i are not calculate with statistical analysis. It is used for measuring the R&D performance and the scores classify the research organisation in a performance final ranking. The present research, after this introduction, describes the research organisations as systems, which develop a process of scientific production (Section 2). The indices used in the model will be drawn in Section 3, while the Section 4 deals with the theoretical description of the methodology built for measuring the R&D performance. An application (Section 5) on public research institutes located in Piemonte (Italy) that belong to different research areas (economics, environmental, technological, etc.) is used to show how the model works. In Section 6, instead, is presented the countercheck of the model with an application of Delphi method. Finally, we draw conclusions in Section 7. 454 R&D Management 31, 4, 2001 2. The system of the research organisation According to Boulding (1985) a system is `anything that is not chaos', instead Forrester (1977) said that a system is a set made up of elements (material and nonmaterial) interacting in order to reach a common goal. The definition of a system as `a collection of parts organised for a purpose' is so general that any phenomenon, or organisation becomes part of some system or other. Often the same part belongs to many systems simultaneously, both `up and down' and `across' (Coyle, 1977). Clearly, mere repetition of `system' adds nothing to understanding, and we need a language for describing systems in order to use the concept effectively in real problems. The dynamic of firms, economies are real problems, and they cause much concern for the decision maker. It is, however, only helpful to treat such problems in system terms, if we can describe the forces which cause the dynamics and to study how these forces might be controlled, removed, or reinforced, in order to improve behaviour (Coyle, 1977). The research bodies are particular systems, set up and run by man, which develop a process of scientific production mainly using public resources or private funds. The elements are connected to each other and they generate an output, basic and applied research, the raw material, which is essential for increasing the wealth of the nations. The research laboratories can be considered feedback systems, since they are influenced by past behaviour. If we consider the research body a system with its input, production processes (of scientific activity) and output, this can be expressed mathematically with the following form: Ssrb = f (P, A, O, ...) where: Ssrb = system of the scientific research bodies P = research personnel A = assets O = organisation The system of the research body is not identified by the sum of the three elements, but is the result of their combination according to certain rules, which form the operational rules of the system. The first component P (research personnel) is the most important in research units, because it is the core of the cognitive dynamics (the means of creation and diffusion of knowledge) towards the outside environment. The knowledge is born at an individual level and amplified within the organisational system (Nonaka, 1994), then it is diffused knowledge for developing the economic system. The component A (assets) represents all the available resources apart from human resources. This includes equipment, laboratories, libraries, credit and so on. Finally, the component O (organisation) affects # Blackwell Publishers Ltd 2001 Evaluating R&D performance thanks to the competence accumulated in specific areas and the availability of advanced equipment, also offer a series of innovative services to the users, such as consultancy, homologation, accreditation, calibration, certification, etc. that Coccia and Rolfo (1999) define technology transfer in boarder sense. As in the case of input indicators, according to Autio and Laamanen (1995) three types of output indicators have been collected. These are: research and technology outputs, commercial outputs, and monetary and resource outputs. Geisler and Rubenstein (1989) add as output indicators such as, change in productions rates, productivity, profit, number of commitments made (monetary information and personnel exchange). 4. The recipient, which absorbs the output, is different according to whether it is a private or a public research body. In the first case they are principally the divisions (production, marketing, etc.) or the companies of the group. In the case of public research bodies, the users are more widespread and include manufacturing industries, public administration bodies, international bodies, professionals and universities. Azzone and Maccarone (1997) consider, when studying an innovation recipient, as critical aspect to measure the effects of technology for understanding the kinds of change occur in the structure of the firm after its introduction. 5. The results of the research bodies are the variables important for the users. If the users are private this may be a profit maximisation and a cost minimisation (Agrell and Martin West, 2001), the income, new products, market shares, etc. If the users are public it may be an increase in the cultural level (for example universities), the solution to social problems (economic growth, reduction of unemployment, ...). both the personnel (P) and the material and non material resources (A); it represents the process by which the economic forces acting on the system are coordinated in relation to the operations to be carried out in order to reach the goals. Brown and Svenson (1998), consulting managers in Chicago, have working on measuring the R&D process and they consider the R&D lab as a system, in particular: 1. The inputs are the resources of the system, which generate the cognitive process. They include the human factor, the information, the ideas, the equipment, the libraries, the organisations and the sources of financing. If the laboratory is in a company the research work is carried out on the basis of specific requests from the marketing, production, planning divisions, etc. If it is public the work will follow the scientific trend of the nation. According to Autio and Laamanen (1995) the indicators used for measuring the inputs are collected in three types: monetary and physical resource inputs, capability inputs, and technology inputs. 2. The production process of a research body transforms the input into output through the realisation of research projects, training courses, technological service, etc. 3. The output of the research laboratories includes the publication of books and reports, projects, software, innovations and patents. These outputs represent explicit transfer of knowledge but some studies have shown that the research bodies, in particular public ones, carry out consistent tacit activities through the diffusion of knowledge with internal training and teaching courses (technology transfer education-oriented). The research bodies, 3. 1. INPUT • • • • Personnel Equipment Funds Information 2. PROCESS • Research activities • Realisation of know-how • Empirical checks • Participation in conferences OUTPUT • Innovations • Patents • Articles published • Books written • Project completed • Presentations made • Training Figure 1. 4. RECIPIENTS • Companies • Public Administration Institutions • Universities, research centres • International organisations 5. RESULTS • To increase in company competitively • To increase in cultural level • To increase in spread of technologies and knowledge • To increase the wealth of the nation The production system of the research bodies. Source: Adaptation from Brown and Svenson (1998). # Blackwell Publishers Ltd 2001 R&D Management 31, 4, 2001 455 Mario Coccia The R&D measurement methods described in the literature are so individually varied and uniquely designed for particular situations. One general approach, according to Werner and Souder (1997), is to classify them into micro and macro level measurement techniques. Macro-level techniques focus the impact of the R&D on society as a whole. Micro-level techniques focus on the impact of a firm's R&D on its own effectiveness (Geisler, 1994; Kostoff, 1993; Rubenstein and Geisler, 1991). The indicators of this article focus both macro-level and micro-level. Many quantitative assessment methods were found in the literature (Pappas and Remer, 1985; Whitley and Frost, 1971) and some are discussed below. 3. Indices for measuring the research activities The assessment of scientific output involves the calculation of indices indicating the production, productivity or impact of research group (Autio and Laamanen, 1995; Luwel et al., 1999; Agrell and Martin West, 2001). The indices, built in this work, measure the productivity (Rantanen, 2001) because in this way it is possible to compare the performance among R&D labs. The production (number of publications, courses, etc.) is divided by the number of research personnel. A basic assumption underlying this approach is that scientific progress is made by scientists who group together to study particular research topics and build upon earlier work of their colleagues (Price, 1963). If the R&D lab is likened to an organism, the researchers are the cells. They are the vital centre of all scientific production. The indices, which measure the scientific performance of research bodies, are a series of seven and they are grouped in four categories. They consider all the aspects of the life of R&D bodies, from the financial to the technological and the scientific (Werner and Souder, 1997). * * * * The financial indices (see Whitley et al., 1998; Mechlin and Berg, 1980) measure two aspects: the dependence of the research bodies on centralised funding and their capacity for self-financing; the latter is also an indicator of the strength of explicit technological transfer. The indices of tacit transfer measure the capacity of the bodies to transfer knowledge in an informal form (Autio and Laamanen, 1995; Azzone and Maccarone, 1997). The bibliometric indices measure the capacity of the bodies to produce scientific publications, both national and international level, on basic and applied research topics (Bozeman and Melkers, 1993; Narin, 1976; Moed et al., 1985). The technometric index shows the capacity of the bodies to produce product and process with patent activities (Autio and Laamanen, 1995). 456 R&D Management 31, 4, 2001 The indices, except the latter one, are indicators of general average productivity of the activities in public research organisations (Luwel et al., 1999). The logic to consider a set of different financial indices is already used in accounting theory for evaluating of firms (Littleton and Zimmerman, 1962; Meigs and Larsen, 1976). Neely and Wilson (1992) support that the performance measurement systems involve a set of multidimensional performance measures. We will now examine the various indices in detail (from now on the terms `laboratory' and `research body=organisation' are used with the same meaning). 3.1. Financial indices A = Index of centralised funding attributed to the research body The index shows the financial dependence from public resources or from resources of headquarter. It is calculated as follow: the amounts are divided, in each laboratory, by the research personnel. Mathematically we have the formula: i Di Pi 100 where: = index of financial dependence on the headquarters of the body ith P Di = ij (sum) in the body ith of the j funds from the central body P Pi = i number of research personnel in the body ith i 2 {1, 2, ..., n} j = 1, 2, ..., m i B = Index of self-financing (measure of explicit technological transfer) The present index measures the body's capacity to gather external resources (self-financing) with technological transfer activities (patents, innovations, ...). The revenue generated by the activities of technological transfer is divided by the research personnel. The index of self-financing has the following formula: i Ei Pi 100 where: of self-financing of the laboratory ith i = Index P Ei = ij (sum) in the body ith of the j income deriving from the transfer of technological activities towards external subjects P Pi = i number of research personnel in the laboratory ith i 2 {1, 2, ..., n} j = 1, 2, ..., m # Blackwell Publishers Ltd 2001 Evaluating R&D performance 3.2. Indices of tacit technological transfer The technological transfer tacit is underestimated because it does not generate revenue but it is very important in the scientific activities of research bodies. The following indicators measure the tacit activities: * * number of personnel in training within the research bodies number of teaching courses held by the researchers X = Index of personnel in training This index measures the number of trainees in the laboratory, which also indicates the capacity for tacit transfer of knowledge to the beneficiaries (scholarship holders, PhDs, undergraduates, etc.). This index has the following formula: i Ti Pi 100 where: of training level at the laboratory ith i = Index P Ti = i number of personnel in training at the laboratory ith P Pi = i number of research personnel i 2 {1, 2, ..., n} = Index of teaching activity The present index measures teaching activities of a research organisation. It is an indicator of the capacity for informal transfer of knowledge, like the previous index, through researcher's teaching activities at outside institutes (universities, post-graduate schools, etc.). The index (i ) is built dividing the number of courses by the research personnel. The formula of the index is: i Ci Pi 100 total number is divided by the total of the research personnel. "i 3.3. Bibliometric indices Pi 100 where: of the body's national publications "i = Index P PNi = ij (sum) in the laboratory ith of the national publications P Pi = i number of research personnel of the body ith i 2 {1, 2, ..., n} j = 1, 2, ..., m = Index of the international publications The present index is calculated by adding the number of articles published in international journals, books published by foreign publishers and publication of the acts of international congresses. The total number is divided by the total of the research personnel. i PITi Pi 100 where: i = Index of the international publications by the laboratory ith P PNi = ij (sum) in the laboratory ith of the j international publications P Pi = i number of research personnel in the laboratory i 2 {1, 2, ..., n} j = 1, 2, ..., m 3.4. Technological index = technometric Index The index is the sum of the number of patents for inventions or discoveries (homologated and extended to different countries) by laboratory personnel. where: i = Index of teaching activities in the laboratory P Ci = i number of courses held by researchers in outside institutes P Pi = i number of research personnel i 2 {1, 2, ..., n} PNi i BRi where: index of the laboratory ith i = Technometric P BRi = ij (sum) in the laboratory ith of j patents i 2 {1, 2, ..., n} j = 1, 2, ..., m E = Index of national publications 4. The relev method The present index is calculated by adding the number of articles published in national journals, books published, publication of the acts of national congresses and internal reports published by the body. The The performance of public research organisations can be measured with techniques used for private laboratories but many researches have shown that the traditional financially based performance measure- # Blackwell Publishers Ltd 2001 R&D Management 31, 4, 2001 457 Mario Coccia ment systems have failed to measure and integrate all the factors critical (Kaplan, 1983, 1984; Eccles, 1991; Fisher, 1992). To deal with the new digital economy, new performance measurement systems have been proposed, such as the activity based costing (Cooper, 1989) and the Balanced Scorecard (Kaplan and Norton, 1996). Some researchers, rather than providing general frameworks of performance measurement system design, preferred proposing criteria for the design of performance measurement system (Globerson, 1985). As in the case of financial indicators, the classic method used for R&D evaluation, such as bibliometic analysis (Garfield, 1979; Moed et al., 1985; Narin, 1976), co-word analysis (Braam et al., 1991; Callon et al., 1983), impact factor (Luwel et al., 1999) are not sufficient to measure all the important aspects of public research bodies. Implementing a performance measurement system compatible with above-mentioned techniques usually produce unmanageable performance reports. As reported by Neely et al. (1995) one of the research issues in the future research agenda on performance measurement would be developing a technique to reduce the number of measures into a manageable set. Moreover, performance measurement systems usually involve a number of multidimensional performance measurement (Neely and Wilson, 1992). Rangone (1996) pointed out that a problem, which arises from that situation is the integration of those several measures expresses in heterogeneous units into a single unit. In response to the issues identified this study presents the relev method for quantifying the effect of the factor on performance. The relev methodology evaluates the R&D performance of public research bodies (central topic of the inquiry) on the basis of measurement of kkey indices representing the principal activities carried out. The seven indices previously described are to be considered elements of a set T associated with a research body. The basic model is kept fairly simple in order to contain the subjectivity within certain limits. Before moving on to an explanation of the methodology we will explain some definitions and the theoretical basis of the model. Definitions Evaluation is the most objective judgement possible, based on qualitative and quantitative measurements, assigned to an organisation on the efficiency and efficacy in pursuing its mission over a period of time fixed. The subjects to be evaluated may be individuals, groups, laboratories, projects, programmes and it is possible to use various methodologies: publications, citation index, interviews, impact factor, econometric estimates, etc. Performance is the result of the organisation in carrying out activities over an interval of time. 458 R&D Management 31, 4, 2001 Score is the sum of the points assigned to a subject or a team. It gives the value in pursing the goals. Knowledge scoring: score, which expresses the capacity of research body for producing and transferring knowledge. Presuppositions The following hypotheses are the theoretical basis for the model: * * * * * * The research body is a system of interacting and coordinated material and non-material elements for the production of knowledge (purpose), raw material for increasing the social well being of the nations; The weight of each index is the maximum value of the same in the vector, which is calculated on a series of organisations operating in the same research area, for instance chemistry. Therefore the weights vary among research areas and a similar characteristic has already used for the design of performance measurement systems in companies from Maskell (1992) and Suwignjio et al. (2000); The maximum value of the index shows the best performance; The index of financial dependence on the central body has a non-positive effect on the performance since these are resources not generated within the organisation, although it is an input; The capacity for self-financing, publications and patents, training personnel and teaching outside the Institute has a positive effect on the performance of the organisation. The operators are marked by the plus sign (); Since international publications generate greater diffusion of knowledge they are given double weighting with respect to those published nationally. These hypotheses are based on theoretical background (see Section 2) and on usual procedure diffused in R&D organisations: for instance, in generally, the international publications are more important than nation publications. The research laboratories evaluation (relev) methodology is built on the basis of the following steps: the first is to calculate the seven indices for each research body and construct seven tables by ranks, one for each index, with values in decreasing order from the top to the bottom. Both TA the Table relative to Index A ( 1 ; i ; :::; n ) where are the values obtained for each body per i = 1, ..., n (e.g. nine). Each classification contains n values for each laboratory. The present Table is built, also, with the other six indices: B ( 1 ; 2 ; :::; n ); X ( 1 ; 2 ; :::; n ); (1 ; 2 ; :::; n ); E ("1 ; "2 ; :::; "n ); ( 1 ; 2 ; :::; n ); ( 1 ; 2 ; :::; n ). # Blackwell Publishers Ltd 2001 Evaluating R&D performance Table TA Property 2 Laboratory i Absolute value 1 N i 1 The values of these indices are used in the construction of the linear function relev that summarises quantitative, qualitative and cost aspects (second step). Model We consider the seven indices, with the respective values of the n R&D bodies: A = ( 1 ; 2 ; :::; n ) B = ( 1 ; 2 ; :::; n ) X = (1 ; 2 ; :::; n ) = (1 ; 2 ; :::; n ) E = ("1 ; "2 ; :::; "n ) = (1 ; 2 ; :::; n ) = ( 1 ; 2 ; :::; n ) Definition Let i 2 {1; 2; :::; n} equal the number of R&D bodies, let A, B, X, , E, , equal the evaluation indices with the respective elements ( i ; i ; i ; i ; "i ; i ; i ). The research laboratories evaluation function relev is the following linear function: 0 1 0 1 1 1 @ A i@ A relev (i) 3 max A max B 0 1 0 1 1 1 A i @ A i@ max X max 0 1 0 1 1 1 A " i 2@ A i @ max E max i > 0; 0 se i 0 If X1 = (1=max A) i X2 = (1=max B) i ... X7 = (1 se i > 1; 0 se i = 0) then relev (i) 3 X1 X2 X3 X4 X5 2 X6 X7 Property 1 If i 2 {1; 2; :::; n} e j2 {1; 2; :::; 7}; 8 Xj 2 Xj 2 [0; 1] R. # Blackwell Publishers Ltd 2001 relev then Property 3 a i 1 se If i 2 {1; 2; :::; n} e j 2 {1; 2; :::; 7}; 8 Xj 2 relev (i) max = 10 ( relev (i) min = 2). relev (i) then If i 2 {1; 2; :::; n} e j 2 {1; 2; :::; 7}; 8 Xj than relev (i) average value = max min=2. A sub-model (0, 1) is applied to the vector , this has the value 1 if the number of patents is at least 1, the value 0 if there are no patents; the reason for this is to avoid penalising research bodies operating in the social or mathematical sciences which do not produce patents as do the other sciences (physics, chemistry, ...). Moreover every operators {X1, X2, X3, ..., X7} X1 has equal weight because we would simplify the model. Finally, on the basis of the values deriving from the evaluation function relev = {!1 ; !2 ; :::; !n } a table of classification is drawn, in decreasing order from the top to the bottom, where each position represents the performance of the scientific activities of the research bodies. In the final table is not important the knowledge score or the difference of value between the first and the second position, etc. The knowledge score is used only for classifying the labs in ranks. Therefore, the final output of relev method is a classification according to ordinal criteria. 5. An empirical application of the relev method on the CNR Institutes present in the Piemonte region The relev methodology was tested on the institutes of Italian National Research Council (CNR) located in Piemonte,1 bodies recognised at an international level for their research activities in three major areas: technology and its industrial uses, economics and environment. The data has been taken from the balance sheets of the institute in 1995 ± 99 period. Before analysing the results, which emerged from the methodology, for greater clarity, it is considered necessary to briefly describe the activities of the institute and of the individual bodies in Piemonte. The Consiglio Nazionale delle Ricerche Italiano (CNR) is the largest Italian public research institute with the institutional objective of promoting, coordinating and organising research in order to encourage scientific and technological progress. The institutional scientific activity is mainly carried out through the institutes, research bodies that are totally dependent on the CNR. Nine institutes operate in Piemonte, a highly industrialised region in north-western Italy. The results described below refer to these institutes which in the interests of brevity will be identified by their initials: IMGC (metrology), IMA (agricultural R&D Management 31, 4, 2001 459 Mario Coccia mechanisation and automation), ILM (metal machinability), IRSL (wool research), IFA (applied plant virology), IRPI (hydrogeological protection of the Po basin), III (hydrology), Ceris (industrial economics), ICGF (cosmogeophysics). The relev method, as described, first calculates the tables of the indices. Since seven indices are considered here, there will be seven Tables (1a ± 7a) each showing the values ( ; ; ; ; "; and ) of the various institutes (Appendix 1). The performance evaluation function for the institutes (relev) is formed of eight operators. At the start of the function the value 3 is used to obtain maximum values of 10. In this specific case we have preferred to attribute the denominator of each operator the maximum value for each index, considering all the research organisations and not only those operating in specific areas; the reason for this is the limited number of Institutes analysed (four in the technological field and five operating in five different areas). Checks have shown that this slight distortion to the hypothesis of the model has little effect on the results of the application. The operators, from the second to the eight, have a range between 0 (minimum) and 1 (maximum). The relev function calculated is: 0 1 0 1 1 1 @ A i@ A i relev (i) 3 34:9 32;386 0 1 0 1 1 1 A i @ A @ 0:97 1:09 0 1 0 1 1 1 A "i 2 @ A i i @ 3:31 2:24 1 se i > 0; 0 se i 0 The knowledge score from the function for each Institute is in the following Table 1 (final classification) where in the top there are the institutes with a high performance, while in the down the research organisations with a low performance. Table 2. Econometric analysis: variables. Institutes Y Score X Average number of employees1 IMGC III CERIS IRPI IMA ICGF IRSL IFA ILM 6.25 6.4 5.3 5.2 5.2 5.65 4.3 4.6 4.2 137 62 31 31 36 66 20 42 21 1 The average value is obtained by considering the total number of employees at the institute in the three year period (1995±99) including personnel in training. The statistical analysis study which follows, aims to investigate if the research performance (variable Y) depends from human resources (variable explanatory X). The Table 2 shows in the second column the knowledge score, instead the average number of employees in the three-year period (1995 ±99), in the third column. The coefficient of correlation r between the two variables X and Y is equal to 0.75. It shows a strong positive correlation: as the variable X grows there is a linear growth of the variable Y. The regression analysis gave the following results: (R 2 0:563, 4:436, 0:016, F(1; 7) 9:016, Sig. = 0.020). About 56% of total variance was explained. 6. The countercheck of the relev model Now, we consider the final ranking of the mathematical model relev according to ordinal criteria in Pareto sense of the term, where the institutes with an higher level of performance are at the top and those with a lower performance's level are at the bottom (Table 3). The R&D performance in the nine Cnr research organisation has been measured, also, with a qualitative Table 1. Computation knowledge score of relev function in CNR institutes. Institutes Relev function Knowledge score III IMGC ICGF CERIS IRPI IMA IFA IRSL ILM 3 3 3 3 3 3 3 3 3 6.38 6.25 5.65 5.28 5.21 5.12 4.64 4.28 4.20 460 R&D Management 31, 4, 2001 0.61 0.46 0.62 0.78 0.23 0.9 1 0.49 0.58 0.57 1 0.25 0.34 1 1 0.13 0.57 0.47 0.48 2 0 0.99 0.15 0.92 0.96 1 0.24 0.48 0.21 1 0.52 0.64 0.32 0.54 0.44 0.44 0.27 0.35 0.16 1 0.64 0.11 0.59 0.53 0.19 0.86 0.8 1 0.70 0.03 0.27 0.08 0.49 0.35 0.44 0 0.24 0.66 # Blackwell Publishers Ltd 2001 Evaluating R&D performance Table 3. Final ranking according to the two method. Ranks Institutes Relev model Delphi method III IMGC ICGF CERIS IRPI IMA IFA IRSL ILM 1 2 3 4 5 6 7 8 9 1 7 4 2 6 9 8 3 5 approach: the Delphi method (Coccia, 2000). It proposed questions formulated clearly and with accuracy to a group of researchers in order that they offer qualitative estimates about mains research activity carried out in the institutes (the same seven activities measured with the indices in the relev method: financial, tacit transfer, bibliometric and technology). The main feature of the general performance is captured with average values and a learning process in rounds. The score is used to classify the organisations in ranks, according to ordinal criteria like the previous one (Table 3). Then the comparison between the methods shows a Spearman's coefficient of rank correlation of about 0.32. Although the mathematical model is simple in the computation, the ranks are approximately similar to those obtained by Delphi method. The result from the relev function is a first robust indicator of general R&D performance. If a organisation, analyzed as a system, has a low knowledge score compared with other R&D organisations or it decreases in a time series (his position go towards the inferior part of the classification), the causes can be identified in each operators. The analysis of each operator, in his turn, shows the real cause: for example if the technological index (patents) decrease this is the signal of an organisational problem due to a poor work satisfaction=involvement or the good researchers are falling. 7. Conclusions The aim of the present study was to create a methodology for measuring the performance of public research organisations. The relev method has been built considering the whole of the activities (financial, scientific and technological) carried out in the research organisations. The method has a simple formulation and the knowledge score indicates the performance of the research bodies in consideration of quantitative, qualitative and cost aspects. Results are presented in a final ranking, where the Institutes with a higher level of R&D performance are at the top and those with a # Blackwell Publishers Ltd 2001 lower level of performance are at the bottom. From the statistical analysis we note that the component personnel is very important inasmuch as it contributes to increasing the performance of the research institutes. The human resources and their organisation create an environment, which positively influences the spiral of creation of knowledge (cognitive processes). Moreover the network relations, between the researchers, encourage the circulation of information and generate the phenomenon of cross-fertilisation with benefits for the outside environment. A complete study on the importance of human resources would require the involvement of many disciplines amongst which economics, psychology, law, sociology, etc. and would be worth going into in the future. The relev function is a basic-model for measuring the R&D performance and the methodology need be improved. The construction has emphasised the simplicity of the model, based on seven key indicators, and the minimising of the subjectivity. In support of this formulation it must be noted that the increased weight given to the quantity and to the costs is because the aim was to measure the performance of the research bodies, while the qualitative aspect is more important when evaluating the individual researchers. Future developments in the research could be: firstly, to find others indicators, which would not complicate the model excessively and would maintain the area of subjectivity within acceptable limits; secondly to test the model on a wide sample of public and private organisations and to do comparisons among research bodies of the same area (physics, economics, chemistry, biology, etc.); finally to calculate the weights that fit to the public and private research organisation with statistical techniques for instance discriminant analysis. Evaluation is the most objective judgement possible, based on qualitative and quantitative measurements, assigned to an organisation on the efficiency and efficacy in pursuing its mission over a period of time fixed. References Agrell, Per, J. and West, Martin B. (2001) A caveat on the measurement of productive efficiency. International Journal of Production Economics, 69, 1 ± 14. Altman, E.I. (1983) Corporate Financial Distress: A Complete Guide to Predicting, Avoiding and Dealing with Bankruptcy. John Wiley & Sons. Altman, E.I. (1968) Financial ratios, discriminant analysis and the prediction of corporate bankruptcy. Journal of Finance, 9. Altman, E.I., Aldeman, R. and Narayanan, P. (1977) Zeta analysis: a new model to identify bankruptcy risk of corporation. Journal of Banking and Finance, 6. Autio, E. and Laamanen, T. (1995) Measurement and evaluation of technology transfer: review of technology R&D Management 31, 4, 2001 461 Mario Coccia transfer mechanisms and indicators. International Journal of Technology Management, 10, 7=8, 643± 664. Azzone, G. and Maccarone, P. (1997) The emerging role to lean infrastructures technology transfer: the case of innovation Plaza project. Technovation, 17, 7, 391± 402. Boulding, K.M. (1985) The World as a Total System. Beverly Hills: Sage. Bozeman, B. (2001) Scientific and technical human capital: a capacity-based approach to research evaluation. Leveraging Research and Technology, R&D Management Conference Proceedings 2001. 7± 9 February, Wellington (NZ). Bozeman, B. and Melkers, J. (1993) Evaluating R&D impacts: methods and practice. Kluwer Academic. Braam, R.R., Moed, H.F. and van Raan, A.F.J. (1991) Comparing and combination of co-citation and co-word clustering. Part I: structural aspects. Journal of the American Society for Information Science, 42, 233± 251. Broadus, R.N. (1987) Toward a definition of bibliometrics. Scientometrics, 12, 373± 377. Brown, M.G. and Svenson, R.A. (1998) Measuring R&D productivity. Research Technology Management, 41, 6, 30 ± 35. Callon, M., Courtial, J.P., Turner, W.A. and Bauin, S. (1983) From translations to problematic networks: an introduction to co-word analysis. Social Science Information, 22, 191± 235. Coccia, M. and Rolfo, S. (1999) The technology transfer in the Italian national research council: the case of the institutes in the Piemonte region, 3rd International Conference on Technology Policy and Innovation ± Session New Tools and findings in Knowledge Management, University of Texas at Austin (USA) 31 August±2 September. Coccia, M. (2000) The measure of R&D performance with a qualitative approach. Working Paper Ceris±Cnr, 5, 2, 1±51. Cooper, R. (1989) The rise of activity based cost system: part IV ± what do activity based cost system look like? Journal of Cost Management, Spring, 34± 46. Coyle, R.G. (1977) Management System Dynamics. John Wiley & Sons. Eccles, R.G. (1991) Performance measurement manifesto. Harvard Business Review, 69, 131± 137. Fisher, J. (1992) Use of non-financial performance measures. Journal of Cost Management, 6, 31 ±38. Friedman, J.H., Altman, E.I. and Duen-Li Kao (1985) Introducing recursive partitioning algorithm for financial classification: the case of financial distress. Journal of Finance, 1. Forrester, J.W. (1977) Industrial Dynamics. Cambridge MA: MIT Press. Garfield, E. (1979) Citation Indexing ± its Theory and Applications in Science, Technology and Humanities. New York: Wiley. Geisler, E. and Rubenstein, A.H. (1989) University-industry relations: a review of major issues. In: Link, A. and Tassey, G. (eds), Cooperative Research and Development: the Industry-university-government Relationship. Kluwer Academic Publishers, 43 ± 62. Geisler, E. (1994) Key output indicators in performance evaluation of research and development organisations. Technological Forecasting and Social Change, 47, 2, 12 ± 20. Georghiou, L. (1998) Issue in the evaluation of innovation and technology policy. Evaluation, 4, 1, 37 ± 51. Globerson, S. (1985) Issue in developing a performance 462 R&D Management 31, 4, 2001 criteria system for an organisation, International Journal of Production Research, 24, 4, 639± 646. Griliches, Z. (1990) Patent statistics as economic indicator. A survey. Journal of Economic Literature, 28, 1661± 1707. Kaplan, R.S. (1983) Measuring manufacturing performance: a new challenge for managerial accounting research. The Accounting Review, 16, 4, 686± 705. Kaplan, R.S. (1984) Yesterday's accounting undermines production. Harvard Business Review, 62, 95 ± 101. Kaplan, R.S. and Norton, D.P. (1996) Translating Strategic into Action ± the Balanced Scorecard, Boston: Harvard Business Review. Kostoff, R.N. (1993) The Handbook of Research Impact Assessment. Arlington VA: Office of naval research. Littleton, A.C. and Zimmerman, V.K. (1962) Accounting Theory: Continuity and Change. NJ: Englewood Cliffs. Luwel, M., Noyons, C.M. and Moed, F. (1999) Bibliometric assessment of research performance in Flanders: policy background and implications. R&D Management, 29, 2, 133± 141. Maskell, B.H. (1992) Performance Measurement for World Class Manufacturing: a Model for American Companies, Cambridge: Productivity Press. Mechlin, G.F. and Berg, D. (1980) Evaluating research ± ROI is not enough. Harvard Business Review, September ± October, 93 ± 99. Meigs, W.B. and Larsen, E.J. (1976) Principles of Auditing. Homewood. Moed, H.F., Burger, W.J.M., Frankfort, J.G. and van Raan, A.F.J. (1985) The use of bibliometric data as tools for university research policy. Research Policy, 14, 131± 149. Narin, F. (1976) Evaluative Bibliometrics. The Use of Publication and Citation Data in the Evaluation of Scientific Activity. Washington DC: National Science Foundation. Neely, A. and Wilson, J.R. (1992) Measuring product goal congruence: an exploratory study. International Journal of Operations and Production Management, 12, 4, 45 ± 52. Neely, A., Gregory, M. and Platts, K. (1995) A performance measurement system design: a literature review and research agenda. International Journal of Operations and Production Management, 15, 4, 80 ± 116. Nonaka, I. (1994) A dynamic theory of organisational knowledge creation. Organisation Science, 5, 1, 14 ± 36. Noyons, E.C.M., Luwel, M. and Moed, H.F. (1999) Combining mapping and citation analysis for evaluative bibliometric purpose. A bibliometric study on recent developments in microelectronics, and on the performance of interuniversity microelectronics centre in Leuven from an international perspective. Journal of the American society for Information Science, 50, 115± 131. Pappas, R.A. and Remer, D.S. (1985) Measuring R&D productivity. Research Management, 28, 3, 15 ± 22. Patterson, W.C. (1983) Evaluating R&D performance at Alcoa labs. Research Management, 3. Polanyi, M. (1966) The Tacit Dimension. New York: Doubleday. Price, D.J. de Solla (1963) Little Science, Big Science. New York: Columbia University Press. Rangone, A. (1996) An analytical hierarchy process framework for comparing the overall performance of manufacturing departments. International Journal of Operation Production Management, 16, 8, 104± 119. # Blackwell Publishers Ltd 2001 Evaluating R&D performance Rantanen, H. (2001) Internal obstacles restraining productivity improvement in small Finnish industrial enterprises. International Journal of Production Economics, 69, 85 ± 91. Rubenstein, A.H. and Geisler, E. (1991) Evaluating the outputs and impact of R&D=innovation. International Journal of Technology Management, 181± 204. Suwignjio, P., Bititci, U.S. and Carrie, A.S. (2000) Quantitative models for performance measurement system. International Journal of Production Economics, 69, 231±241. Van Raan, A.F.J. (1993) Advanced bibliometric methods to assess research performance and scientific development: basic principles and recent practical applications. Research Evaluation, 3, 151± 166. Werner, B.M. and Souder, W.E. (1997) Measuring R&D performance-state of the art. Research and Technology Management, 40, 2, 34 ± 42. Whitley, R., Parish, T., Dressler, R. and Nicholson, G. (1998) Evaluating R&D performance using the new sales ratio. Research Technology Management, 41, 6, 20 ± 22. Whitley, R. and Frost, P.A. (1971) The measurement the performance in research. Human Relations, 24, 2, 161± 178. research personnel of each institute internationally (Table 6a). The Index , is a technometric index, representing the capacity of the various Institutes to realise innovation of products and processes measured by the number of patents taken out during the three year period (Table 7a). Table 2a. Computation Index B (self-financing) in CNR institutes. Institutes Self-financing * Personnel1 Index B IRSL IMGC III IMA ILM IRPI CERIS ICGF IFA 1,198,289 5,586,599 1,806,008 1,212,354 580,937 412,490 259,125 390,893 337,355 37 297 121 85 50 59 51 92 89 32,386 18,810 14,950 14,263 11,618 6,991 5,080 4,249 3,790 1 Research personnel at the institute, except for outside personnel and trainees. * Values 1,000,000 in Italian lira; 1936,27 Italian lira = 1 e (Euro). Appendix 1 The first Index, A, represents the funding supplied to each Institute by the headquarters in Rome (Table 1a). The Index B shows the capacity for self-financing (Table 2a). Following the financial indices, we move on to the indices, which express the Institutes' capacity for tacit transfer of knowledge. The Index X is an indicator, which expresses the personnel in training in each Institute (Table 3a). The Index is another indicator of tacit transfer and indicates the number of courses held by the researchers at outside institutes (Table 4a). The Index E is a bibliometric index, which represents the number of publications issued nationally (in Italy) by the personnel of each Institute (Table 5a). The Index , is a bibliometric index, which represents the number of publications issued by the Table 1a. Computation Index A (centralised funding in CNR institutes). Institutes Funding * Personnel1 Index A IRPI IMGC ILM IMA III IFA IRSL CERIS ICGF 1,001.8 5,119 864.5 1,610.2 2,640.5 2,004 1,035 1,763.4 3,210 59 297 50 85 121 89 37 51 93 17 17.2 17.3 18.9 21.5 22.5 28 34.6 34.9 1 Research personnel at the institute, except for outside personnel and trainees. * Values 1,000,000 in Italian lira; 1936,27 Italian lira = 1 e (Euro). # Blackwell Publishers Ltd 2001 Table 3a. Computation Index X (trainees) in CNR institutes. Institutes Trainees Personnel1 Index X IRPI CERIS IRSL III IFA ICGF IMGC IMA ILM 34 38 15 39 22 60 63 10 6 35 42 22 64 38 106 113 23 14 97 90 68 61 58 56 56 43 43 1 Research personnel employed at levels I, II, III, personnel on contract and outside personnel. Table 4a. Computation Index (courses held by researchers) in CNR Institutes. Institutes Course held by researchers Personnel1 Index IMGC CERIS III IFA IRPI ICGF IMA IRSL ILM 123 44 55 22 20 55 7 1 0 113 42 64 38 35 106 23 22 0 109 105 86 58 57 52 30 4 0 1 Research personnel employed at levels I, II, III, personnel on contract and outside personnel. R&D Management 31, 4, 2001 463 Mario Coccia Table 5a. Computation Index E (number of national publications) in CNR institutes. Institutes Number of national publications Personnel1 Index E CERIS IRPI ICGF IMA IRSL IMGC ILM III IFA 169 159 151 105 33 258 40 97 57 51 75 93 90 37 297 50 123 89 3.31 2.12 1.62 1.16 0.89 0.86 0.80 0.78 0.64 1 Research personnel at the institute except for personnel belonging to other organisations (e.g. universities). Table 6a. Computation Index (number international publications) in CNR institutes. Institutes Number of international publications Personnel1 Index ICGF III IFA ILM IMGC IRPI CERIS IMA IRSL 209 125 57 37 117 29 14 18 3 93 123 89 50 297 75 51 90 37 2.24 1.01 0.64 0.74 0.39 0.38 0.27 0.20 0.08 Table 7a. Number of patents ( ). Institutes Number of patents IMGC IMA III CERIS IFA IRSL ICGF ILM IRPI 1 1 1 0 0 0 0 0 0 Note 1. The model can be used in private R&D labs but it is necessary to change the indices and the weights of each operators because, for instance, the publications or teaching course are not good performance indicators for companies. 1 Research personnel at the Institute, except for personnel belonging to other organisations (e.g. universities). 464 R&D Management 31, 4, 2001 View publication stats # Blackwell Publishers Ltd 2001