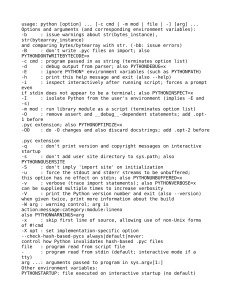

Pro Python System Administration - Learn to manage and monitor your network, web servers, and databases with Python ( PDFDrive )

Anuncio