AN INTRODUCTION TO

OPTIMIZATION

SOLUTIONS MANUAL

Fourth Edition

Edwin K. P. Chong and Stanislaw H. Żak

A JOHN WILEY & SONS, INC., PUBLICATION

1. Methods of Proof and Some Notation

1.1

A

F

F

T

T

B

F

T

F

T

not A

T

T

F

F

not B

T

F

T

F

A⇒B

T

T

F

T

(not B)⇒(not A)

T

T

F

T

1.2

A

F

F

T

T

B

F

T

F

T

not A

T

T

F

F

not B

T

F

T

F

A⇒B

T

T

F

T

not (A and (not B))

T

T

F

T

1.3

A

F

F

T

T

B

F

T

F

T

not (A and B)

T

T

T

F

not A

T

T

F

F

not B

T

F

T

F

(not A) or (not B))

T

T

T

F

1.4

A

F

F

T

T

B

F

T

F

T

A and B

F

F

F

T

A and (not B)

F

F

T

F

(A and B) or (A and (not B))

F

F

T

T

1.5

The cards that you should turn over are 3 and A. The remaining cards are irrelevant to ascertaining the

truth or falsity of the rule. The card with S is irrelevant because S is not a vowel. The card with 8 is not

relevant because the rule does not say that if a card has an even number on one side, then it has a vowel on

the other side.

Turning over the A card directly verifies the rule, while turning over the 3 card verifies the contraposition.

2. Vector Spaces and Matrices

2.1

We show this by contradiction. Suppose n < m. Then, the number of columns of A is n. Since rank A is

the maximum number of linearly independent columns of A, then rank A cannot be greater than n < m,

which contradicts the assumption that rank A = m.

2.2

.

⇒: Since there exists a solution, then by Theorem 2.1, rank A = rank[A..b]. So, it remains to prove that

rank A = n. For this, suppose that rank A < n (note that it is impossible for rank A > n since A has

only n columns). Hence, there exists y ∈ Rn , y 6= 0, such that Ay = 0 (this is because the columns of

1

A are linearly dependent, and Ay is a linear combination of the columns of A). Let x be a solution to

Ax = b. Then clearly x + y 6= x is also a solution. This contradicts the uniqueness of the solution. Hence,

rank A = n.

⇐: By Theorem 2.1, a solution exists. It remains to prove that it is unique. For this, let x and y be

solutions, i.e., Ax = b and Ay = b. Subtracting, we get A(x − y) = 0. Since rank A = n and A has n

columns, then x − y = 0 and hence x = y, which shows that the solution is unique.

2.3

>

n+1

, i = 1, . . . , k. Since k ≥ n + 2, then the vectors ā1 , . . . , āk must

Consider the vectors āi = [1, a>

i ] ∈ R

n+1

be linearly independent in R

. Hence, there exist α1 , . . . αk , not all zero, such that

k

X

αi ai = 0.

i=1

The first component of the above vector equation is

Pk

i=1 αi ai = 0, completing the proof.

Pk

i=1

αi = 0, while the last n components have the form

2.4

a. We first postmultiply M by the matrix

"

to obtain

"

M m−k,k

M k,k

I m−k

O

#"

Ik

−M m−k,k

Ik

−M m−k,k

#

O

I m−k

#

O

I m−k

"

O

=

M k,k

#

I m−k

.

O

Note that the determinant of the postmultiplying matrix is 1. Next we postmultiply the resulting product

by

"

#

O

Ik

I m−k O

to obtain

"

O

M k,k

I m−k

O

Notice that

"

det M = det

where

#"

I m−k

Ik

O

"

det

O

# "

Ik

Ik

=

O

O

O

M k,k

#!

O

Ik

O

I m−k

"

det

#

O

.

M k,k

O

I m−k

Ik

O

#!

,

#!

= ±1.

The above easily follows from the fact that the determinant changes its sign if we interchange columns, as

discussed in Section 2.2. Moreover,

"

#!

Ik

O

det

= det(I k ) det(M k,k ) = det(M k,k ).

O M k,k

Hence,

det M = ± det M k,k .

b. We can see this on the following examples. We assume, without loss of generality that M m−k,k = O and

let M k,k = 2. Thus k = 1. First consider the case when m = 2. Then we have

"

# "

#

O

I m−k

0 1

M=

=

.

M k,k

O

2 0

2

Thus,

det M = −2 = det (−M k,k ) .

Next consider the case when m = 3. Then

"

det

O

M k,k

0

#

0

I m−k

= det

O

· · ·

2

..

.

..

.

···

..

.

1

0

···

0

0

1

= 2 6= det (−M k,k ) .

· · ·

0

Therefore, in general,

det M 6= det (−M k,k )

However, when k = m/2, that is, when all sub-matrices are square and of the same dimension, then it is

true that

det M = det (−M k,k ) .

See [121].

2.5

Let

"

A

M=

C

B

D

#

and suppose that each block is k × k. John R. Silvester [121] showed that if at least one of the blocks is

equal to O (zero matrix), then the desired formula holds. Indeed, if a row or column block is zero, then the

determinant is equal to zero as follows from the determinant’s properties discussed Section 2.2. That is, if

A = B = O, or A = C = O, and so on, then obviously det M = 0. This includes the case when any three

or all four block matrices are zero matrices.

If B = O or C = O then

"

#

A B

det M = det

= det (AD) .

C D

The only case left to analyze is when A = O or D = O. We will show that in either case,

det M = det (−BC) .

Without loss of generality suppose that D = O. Following arguments of John R. Silvester [121], we premultiply M by the product of three matrices whose determinants are unity:

"

#"

#"

#"

# "

#

I k −I k I k O

I k −I k A B

−C O

=

.

O

Ik

Ik Ik

O

Ik

C O

A B

Hence,

"

A

det

C

B

O

#

"

=

−C

A

O

B

#

= det (−C) det B

= det (−I k ) det C det B.

Thus we have

"

A

det

C

#

B

= det (−BC) = det (−CB) .

O

3

2.6

We represent the given system of equations in the form Ax = b, where

x1

"

#

1 1 2 1

x2

A=

,

x = ,

and

x3

1 −2 0 −1

x4

Using elementary row operations yields

"

#

"

1 1 2 1

1

A=

→

1 −2 0 −1

0

"

1 1

[A, b] =

1 −2

2 1

0 −1

1

−3

#

"

1

1

→

−2

0

2

−2

1

−3

"

#

1

b=

.

−2

#

1

,

−2

2

−2

and

1

−2

#

1

,

−3

from which rank A = 2 and rank[A, b] = 2. Therefore, by Theorem 2.1, the system has a solution.

We next represent the system of equations as

"

#" # "

#

1 1

x1

1 − 2x3 − x4

=

1 −2 x2

−2 + x4

Assigning arbitrary values to x3 and x4 (x3 = d3 , x4 = d4 ), we get

" #

"

#−1 "

#

x1

1 1

1 − 2x3 − x4

=

x2

1 −2

−2 + x4

"

#"

#

1 −2 −1 1 − 2x3 − x4

= −

3 −1 1

−2 + x4

"

#

− 43 d3 − 31 d4

=

.

1 − 32 d3 − 32 d4

Therefore, a general solution is

1

4

4

− 3 d3 − 13 d4

−3

−3

0

x1

x 1 − 2 d − 2 d − 2

− 2

1

2

3 3

3 4 =

=

3 d3 + 3 d4 + ,

x3

1

0

0

d3

x4

d4

0

1

0

where d3 and d4 are arbitrary values.

2.7

1. Apply the definition of | − a|:

−a

| − a| =

0

−(−a)

−a if

=

0

if

a

if

if −a > 0

if −a = 0

if −a < 0

a<0

a=0

a>0

= |a|.

2. If a ≥ 0, then |a| = a. If a < 0, then |a| = −a > 0 > a. Hence |a| ≥ a. On the other hand, | − a| ≥ −a

(by the above). Hence, a ≥ −| − a| = −|a| (by property 1).

4

3. We have four cases to consider. First, if a, b ≥ 0, then a + b ≥ 0. Hence, |a + b| = a + b = |a| + |b|.

Second, if a, b ≥ 0, then a + b ≤ 0. Hence |a + b| = −(a + b) = −a − b = |a| + |b|.

Third, if a ≥ 0 and b ≤ 0, then we have two further subcases:

1. If a + b ≥ 0, then |a + b| = a + b ≤ |a| + |b|.

2. If a + b ≤ 0, then |a + b| = −a − b ≤ |a| + |b|.

The fourth case, a ≤ 0 and b ≥ 0, is identical to the third case, with a and b interchanged.

4. We first show |a − b| ≤ |a| + |b|. We have

|a − b| = |a + (−b)|

≤

|a| + | − b|

= |a| + |b|

by property 3

by property 1.

To show ||a| − |b|| ≤ |a − b|, we note that |a| = |a − b + b| ≤ |a − b| + |b|, which implies |a| − |b| ≤ |a − b|. On the

other hand, from the above we have |b| − |a| ≤ |b − a| = |a − b| by property 1. Therefore, ||a| − |b|| ≤ |a − b|.

5. We have four cases. First, if a, b ≥ 0, we have ab ≥ 0 and hence |ab| = ab = |a||b|. Second, if a, b ≤ 0,

we have ab ≥ 0 and hence |ab| = ab = (−a)(−b) = |a||b|. Third, if a ≤ 0, b ≤ 0, we have ab ≤ 0 and hence

|ab| = −ab = a(−b) = |a||b|. The fourth case, a ≤ 0 and b ≥ 0, is identical to the third case, with a and b

interchanged.

6. We have

|a + b|

≤

|a| + |b|

by property 3

≤ c + d.

7. ⇒: By property 2, −a ≤ |a| and a ≤ |a. Therefore, |a| < b implies −a ≤ |a| < b and a ≤ |a| < b.

⇐: If a ≥ 0, then |a| = a < b. If a < 0, then |a| = −a < b.

For the case when “<” is replaced by “≤”, we simply repeat the above proof with “<” replaced by “≤”.

8. This is simply the negation of property 7 (apply DeMorgan’s Law).

2.8

Observe that we can represent hx, yi2 as

"

hx, yi2 = x

>

#

3

y = (Qx)> (Qy) = x> Q2 y,

5

2

3

where

"

1

Q=

1

#

1

.

2

Note that the matrix Q = Q> is nonsingular.

1. Now, hx, xi2 = (Qx)> (Qx) = kQxk2 ≥ 0, and

hx, xi2 = 0 ⇔

⇔

⇔

kQxk2 = 0

Qx = 0

x=0

since Q is nonsingular.

2. hx, yi2 = (Qx)> (Qy) = (Qy)> (Qx) = hy, xi2 .

3. We have

hx + y, zi2

=

(x + y)> Q2 z

= x> Q2 z + y > Q2 z

= hx, zi2 + hy, zi2 .

5

4. hrx, yi2 = (rx)> Q2 y = rx> Q2 y = rhx, yi2 .

2.9

We have kxk = k(x − y) + yk ≤ kx − yk + kyk by the Triangle Inequality. Hence, kxk − kyk ≤ kx − yk. On

the other hand, from the above we have kyk − kxk ≤ ky − xk = kx − yk. Combining the two inequalities,

we obtain |kxk − kyk| ≤ kx − yk.

2.10

Let > 0 be given. Set δ = . Hence, if kx − yk < δ, then by Exercise 2.9, |kxk − kyk| ≤ kx − yk < δ = .

3. Transformations

3.1

Let v be the vector such that x are the coordinates of v with respect to {e1 , e2 , . . . , en }, and x0 are the

coordinates of v with respect to {e01 , e02 , . . . , e0n }. Then,

v = x1 e1 + · · · + xn en = [e1 , . . . , en ]x,

and

v = x01 e01 + · · · + x0n e0n = [e01 , . . . , e0n ]x0 .

Hence,

[e1 , . . . , en ]x = [e01 , . . . , e0n ]x0

which implies

x0 = [e01 , . . . , e0n ]−1 [e1 , . . . , en ]x = T x.

3.2

a. We have

1

0

0

0

[e1 , e2 , e3 ] = [e1 , e2 , e3 ] 3

−4

2

−1

5

4

5 .

3

Therefore,

1

0

0

0 −1

T = [e1 , e2 , e3 ] [e1 , e2 , e3 ] = 3

−4

2

−1

5

b. We have

−1

4

28

1

5 =

29

42

3

−11

1

[e1 , e2 , e3 ] = [e01 , e02 , e03 ] 1

3

2

−1

4

3

0 .

5

Therefore,

1

T = 1

3

3

0 .

5

2

−1

4

3.3

We have

2

[e1 , e2 , e3 ] = [e01 , e02 , e03 ] 1

−1

6

2

−1

2

3

0 .

1

−14

−19

13

−14

−7 .

7

Therefore, the transformation matrix from {e01 , e02 , e03 } to {e1 , e2 , e3 } is

2

2 3

T = 1 −1 0 ,

−1 2 1

Now, consider a linear transformation L : R3 → R3 , and let A be its representation with respect to

{e1 , e2 , e3 }, and B its representation with respect to {e01 , e02 , e03 }. Let y = Ax and y 0 = Bx0 . Then,

y 0 = T y = T (Ax) = T A(T −1 x0 ) = (T AT −1 )x0 .

Hence, the representation of the linear transformation with respect to {e01 , e02 , e03 } is

3 −10 −8

B = T AT −1 = −1

8

4 .

2 −13 −7

3.4

We have

1

0

[e01 , e02 , e03 , e04 ] = [e1 , e2 , e3 , e4 ]

0

0

Therefore, the transformation matrix from

1 1

0 1

T =

0 0

0 0

1

1

0

0

1

1

1

0

1

1

.

1

1

{e1 , e2 , e3 , e4 } to {e01 , e02 , e03 , e04 } is

−1

1 −1 0

0

1 1

0 1 −1 0

1 1

.

=

0 0

1 −1

1 1

0 0

0

1

0 1

Now, consider a linear transformation L : R4 → R4 , and let A be its representation with respect to

{e1 , e2 , e3 , e4 }, and B its representation with respect to {e01 , e02 , e03 , e04 }. Let y = Ax and y 0 = Bx0 .

Then,

y 0 = T y = T (Ax) = T A(T −1 x0 ) = (T AT −1 )x0 .

Therefore,

B = T AT −1

5

−3

=

−1

1

3

−2

0

1

4

−1

−1

1

3

−2

.

−2

4

3.5

Let {v 1 , v 2 , v 3 , v 4 } be a set of linearly independent eigenvectors of A corresponding to the eigenvalues λ1 ,

λ2 , λ3 , and λ4 . Let T = [v 1 , v 2 , v 3 , v 4 ]. Then,

AT

= A[v 1 , v 2 , v 3 , v 4 ] = [Av 1 , Av 2 , Av 3 , Av 4 ]

=

λ1

0

[λ1 v 1 , λ2 v 2 , λ3 v 3 , λ4 v 4 ] = [v 1 , v 2 , v 3 , v 4 ]

0

0

Hence,

λ1

AT = T 0

0

7

0

λ2

0

0

0 ,

λ3

0

λ2

0

0

0

0

λ3

0

0

0

.

0

λ4

or

λ1

T −1 AT = 0

0

0

λ2

0

0

0 .

λ3

Therefore, the linear transformation has a diagonal matrix form with respect to the basis formed by a linearly

independent set of eigenvectors.

Because

det(A) = (λ − 2)(λ − 3)(λ − 1)(λ + 1),

the eigenvalues are λ1 = 2, λ2 = 3, λ3 = 1, and λ4 = −1.

From Av i = λi v i , where v i 6= 0 (i = 1, 2, 3), the corresponding eigenvectors are

0

0

0

24

0

0

2

−12

v1 = ,

v2 = ,

v 3 = , and

v4 =

.

1

1

−9

1

0

1

1

9

Therefore, the basis we are interested in is

0

0

{v 1 , v 2 , v 3 } = ,

1

1

0

0

,

1

1

0

2

,

−9

1

24

−12

.

1

9

3.6

Suppose v 1 , . . . , v n are eigenvectors of A corresponding to λ1 , . . . , λn , respectively. Then, for each i =

1, . . . , n, we have

(I n − A)v i = v i − Av i = v i − λi v i = (1 − λi )v i

which shows that 1 − λ1 , . . . , 1 − λn are the eigenvalues of I n − A.

Alternatively, we may write the characteristic polynomial of I n − A as

πI n −A (1 − λ) = det((1 − λ)I n − (I n − A)) = det(−[λI n − A]) = (−1)n πA (λ),

which shows the desired result.

3.7

Let x, y ∈ V ⊥ , and α, β ∈ R. To show that V ⊥ is a subspace, we need to show that αx + βy ∈ V ⊥ . For this,

let v be any vector in V. Then,

v > (αx + βy) = αv > x + βv > y = 0,

since v > x = v > y = 0 by definition.

3.8

The null space of A is N (A) = x ∈ R3 : Ax = 0 . Using elementary row operations and back-substitution,

we can solve the system of equations:

4 −2 0

4 −2 0

4 −2 0

4x1 − 2x2 = 0

⇒

2 1 −1 → 0 2 −1 → 0 2 −1

2x2 − x3 = 0

2 −3 1

0 −2 1

0 0

0

1

x1

41

x = x2 = 2 x3 .

x3

1

⇒

x2 =

1

x3 ,

2

x1 =

1

1

x2 = x3

2

4

8

⇒

Therefore,

1

N (A) = 2 c : c ∈ R .

4

3.9

Let x, y ∈ R(A), and α, β ∈ R. Then, there exists v, u such that x = Av and y = Au. Thus,

αx + βy = αAv + βAu = A(αv + βu).

Hence, αx + βy ∈ R(A), which shows that R(A) is a subspace.

Let x, y ∈ N (A), and α, β ∈ R. Then, Ax = 0 and Ay = 0. Thus,

A(αx + βy) = αAx + βAy = 0.

Hence, αx + βy ∈ N (A), which shows that N (A) is a subspace.

3.10

Let v ∈ R(B), i.e., v = Bx for some x. Consider the matrix [A v]. Then, N (A> ) = N ([A v]> ), since if

u ∈ N (A> ), then u ∈ N (B > ) by assumption, and hence u> v = u> Bx = x> B > u = 0. Now,

dim R(A) + dim N (A> ) = m

and

dim R([A v]) + dim N ([A v]> ) = m.

Since dim N (A> ) = dim N ([A v]> ), then we have dim R(A) = dim R([A v]). Hence, v is a linear combination of the columns of A, i.e., v ∈ R(A), which completes the proof.

3.11

We first show V ⊂ (V ⊥ )⊥ . Let v ∈ V , and u any element of V ⊥ . Then u> v = v > u = 0. Therefore,

v ∈ (V ⊥ )⊥ .

We now show (V ⊥ )⊥ ⊂ V . Let {a1 , . . . , ak } be a basis for V , and {b1 , . . . , bl } a basis for (V ⊥ )⊥ . Define

A = [a1 · · · ak ] and B = [b1 · · · bl ], so that V = R(A) and (V ⊥ )⊥ = R(B). Hence, it remains to show

that R(B) ⊂ R(A). Using the result of Exercise 3.10, it suffices to show that N (A> ) ⊂ N (B > ). So let

x ∈ N (A> ), which implies that x ∈ R(A)⊥ = V ⊥ , since R(A)⊥ = N (A> ). Hence, for all y, we have

(By)> x = 0 = y > B > x, which implies that B > x = 0. Therefore, x ∈ N (B > ), which completes the proof.

3.12

Let w ∈ W ⊥ , and y be any element of V. Since V ⊂ W, then y ∈ W. Therefore, by definition of w, we have

w> y = 0. Therefore, w ∈ V ⊥ .

3.13

Let r = dim V. Let v 1 , . . . , v r be a basis for V, and V the matrix whose ith column is v i . Then, clearly

V = R(V ).

⊥

Let u1 , . . . , un−r be a basis for V ⊥ , and U the matrix whose ith row is u>

= R(U > ), and

i . Then, V

> ⊥

⊥ ⊥

V = (V ) = R(U ) = N (U ) (by Exercise 3.11 and Theorem 3.4).

3.14

a. Let x ∈ V. Then, x = P x + (I − P )x. Note that P x ∈ V, and (I − P )x ∈ V ⊥ . Therefore,

x = P x + (I − P )x is an orthogonal decomposition of x with respect to V. However, x = x + 0 is also an

orthogonal decomposition of x with respect to V. Since the orthogonal decomposition is unique, we must

have x = P x.

b. Suppose P is an orthogonal projector onto V. Clearly, R(P ) ⊂ V by definition. However, from part a,

x = P x for all x ∈ V, and hence V ⊂ R(P ). Therefore, R(P ) = V.

3.15

To answer the question, we have to represent the quadratic form with a symmetric matrix as

"

#

"

#!

"

#

1

1

1

−8

1

1

1

−7/2

x>

+

x = x>

x.

2 1 1

2 −8 1

−7/2

1

9

The leading principal minors are ∆1 = 1 and ∆2 = −45/4. Therefore, the quadratic form is indefinite.

3.16

The leading principal minors are ∆1 = 2, ∆2 = 0, ∆3 = 0, which are all nonnegative. However, the

eigenvalues of A are 0, −1.4641, 5.4641 (for example, use Matlab to quickly check this). This implies that

the matrix A is indefinite (by Theorem 3.7). An alternative way to show that A is not positive semidefinite

is to find a vector x such that x> Ax < 0. So, let x be an eigenvector of A corresponding to its negative

eigenvalue λ = −1.4641. Then, x> Ax = x> (λx) = λx> x = λkxk2 < 0. For this example, we can take

x = [0.3251, 0.3251, −0.8881]> , for which we can verify that x> Ax = −1.4643.

3.17

a. The matrix Q is indefinite, since ∆2 = −1 and ∆3 = 2.

b. Let x ∈ M. Then, x2 + x3 = −x1 , x1 + x3 = −x2 , and x1 + x2 = −x3 . Therefore,

x> Qx = x1 (x2 + x3 ) + x2 (x1 + x3 ) + x3 (x1 + x2 ) = −(x21 + x22 + x23 ).

This implies that the matrix Q is negative definite on the subspace M.

3.18

a. We have

0

f (x1 , x2 , x3 ) = x22 = [x1 , x2 , x3 ] 0

0

0

1

0

x1

0

0 x2 .

x3

0

Then,

0

Q = 0

0

0

1

0

0

0

0

and the eigenvalues of Q are λ1 = 0, λ2 = 1, and λ3 = 0. Therefore, the quadratic form is positive

semidefinite.

b. We have

1

f (x1 , x2 , x3 ) = x21 + 2x22 − x1 x3 = [x1 , x2 , x3 ] 0

− 21

0

2

0

− 12

x1

0 x2 .

x3

0

Then,

1

Q= 0

− 21

√

and the eigenvalues of Q are λ1 = 2, λ2 = (1 − 2)/2,

is indefinite.

0

2

0

− 21

0

0

and λ3 = (1 +

√

2)/2. Therefore, the quadratic form

c. We have

1

2

2

f (x1 , x2 , x3 ) = x1 + x3 + 2x1 x2 + 2x1 x3 + 2x2 x3 = [x1 , x2 , x3 ] 1

1

1

0

1

1

x1

1 x2 .

1

x3

Then,

1 1

Q = 1 0

1 1

√

and the eigenvalues of Q are λ1 = 0, λ2 = 1 − 3, and

indefinite.

10

1

1

1

λ3 = 1 +

√

3. Therefore, the quadratic form is

3.19

We have

f (x1 , x2 , x3 )

=

=

Let

4

Q = −2

6

−2

1

−3

4x21 + x22 + 9x23 − 4x1 x2 − 6x2 x3 + 12x1 x3

x1

4 −2 6

[x1 , x2 , x3 ] −2 1 −3 x2 .

6 −3 9

x3

6

−3 ,

9

x1

x = x2 = x1 e1 + x2 e2 + x3 e3 ,

x3

where e1 , e2 , and e3 form the natural basis for R3 .

Let v 1 , v 2 , and v 3 be another basis for R3 . Then, the vector x is represented in the new basis as x̃, where

x = [v 1 , v 2 , v 3 ]x̃ = V x̃.

Now, f (x) = x> Qx = (V x̃)> Q(V x̃) = x̃> (V > QV )x̃ = x̃> Q̃x̃, where

q̃11 q̃12 q̃13

Q̃ = q̃21 q̃22 q̃23

q̃31 q̃32 q̃33

and q̃ij = v i Qv j for i, j = 1, 2, 3.

We will find a basis {v 1 , v 2 , v 3 } such that q̃ij = 0 for i 6= j, and is of the form

v1

= α11 e1

v2

= α21 e1 + α22 e2

v3

= α31 e1 + α32 e2 + α33 e3

Because

q̃ij = v i Qv j = v i Q(αj1 e1 + . . . + αjj ej ) = αj1 (v i Qe1 ) + . . . + αjj (v i Qej ),

we deduce that if v i Qej = 0 for j < i, then v i Qv j = 0. In this case,

q̃ii = v i Qv i = v i Q(αi1 e1 + . . . + αii ei ) = αi1 (v i Qe1 ) + . . . + αii (v i Qei ) = αii (v i Qei ).

Our task therefore is to find v i (i = 1, 2, 3) such that

v i Qej

=

0,

v i Qei

=

1,

j<i

and, in this case, we get

α11

Q̃ = 0

0

0

α22

0

0

0 .

α33

Case of i = 1.

From v >

1 Qe1 = 1,

(α11 e1 )> Qe1 = α11 (e>

1 Qe1 ) = α11 q11 = 1.

Therefore,

1

4

α11

1

1

1

=

=

=

q11

∆1

4

⇒

11

0

v 1 = α11 e1 = .

0

0

Case of i = 2.

From v >

2 Qe1 = 0,

>

(α21 e1 + α22 e2 )> Qe1 = α21 (e>

1 Qe1 ) + α22 (e2 Qe1 ) = α21 q11 + α22 q21 = 0.

From v >

2 Qe2 = 1,

>

(α21 e1 + α22 e2 )> Qe2 = α21 (e>

1 Qe2 ) + α22 (e2 Qe2 ) = α21 q12 + α22 q22 = 1.

Therefore,

"

q11

q12

q21

q22

#"

# " #

α21

0

=

.

α22

1

But, since ∆2 = 0, this system of equations is inconsistent. Hence, in this problem v >

2 Qe2 = 0 should

be satisfied instead of v >

Qe

=

1

so

that

the

system

can

have

a

solution.

In

this

case,

the diagonal

2

2

matrix becomes

α11 0 0

Q̃ = 0 0 0 ,

0 0 α33

and the system of equations become

"

#"

# " #

"

# " #

1

q11 q21 α21

0

α21

=

⇒

= 2 α22 ,

1

q12 q22 α22

0

α22

where α22 is an arbitrary real number. Thus,

1

2

v 2 = α21 e1 + α22 e2 = 1 a,

0

where a is an arbitrary real number.

Case of i = 3.

Since in this case ∆3 = det(Q) = 0, we will have to apply the same reasoning of the previous case and

>

use the condition v >

3 Qe3 = 0 instead of v 3 Qe3 = 1. In this way the diagonal matrix becomes

α11 0 0

Q̃ = 0 0 0 .

0 0 0

>

Thus, from v >

3 Qe1 = 0, v 3 Qe2

q11 q21

q12 q22

q13 q23

= 0 and v >

3 Qe3 = 0,

q31

α31

α31

α31

q32 α32 = Q> α32 = Q α32

q33

α33

α33

α33

4 −2 6

α31

0

= −2 1 −3 α32 = 0 .

6 −3 9

α33

0

Therefore,

α31

α31

α32 = 2α31 + 3α33 ,

α33

α33

12

where α31 and α33 are arbitrary real numbers. Thus,

b

v 3 = α31 e1 + α32 e2 + α33 e3 = 2b + 3c ,

c

where b and c are arbitrary real numbers.

Finally,

1

4

a

2

V = [x1 , x2 , x3 ] = 0

0

a

0

b

2b + 3c ,

c

where a, b, and c are arbitrary real numbers.

3.20

We represent this quadratic form as f (x) = x> Qx, where

1 ξ −1

Q = ξ 1 2 .

−1 2 5

The leading principal minors of Q are ∆1 = 1, ∆2 = 1 − ξ 2 , ∆3 = −5ξ 2 − 4ξ. For the quadratic form to

be positive definite, all the leading principal minors of Q must be positive. This is the case if and only if

ξ ∈ (−4/5, 0).

3.21

The matrix Q = Q> > 0 can be represented as Q = Q1/2 Q1/2 , where Q1/2 = (Q1/2 )> > 0.

1. Now, hx, xiQ = (Q1/2 x)> (Q1/2 x) = kQ1/2 xk2 ≥ 0, and

kQ1/2 xk2 = 0

hx, xiQ = 0 ⇔

Q1/2 x = 0

x=0

⇔

⇔

since Q1/2 is nonsingular.

2. hx, yiQ = x> Qy = y > Q> x = y > Qx = hy, xiQ .

3. We have

hx + y, ziQ

=

(x + y)> Qz

= x> Qz + y > Qz

= hx, ziQ + hy, ziQ .

4. hrx, yiQ = (rx)> Qy = rx> Qy = rhx, yiQ .

3.22

We have

We first show that kAk∞

kAk∞ = max{kAxk∞ : kxk∞ = 1}.

Pn

≤ maxi k=1 |aik |. For this, note that for each x such that kxk∞ = 1, we have

kAxk∞

=

max

i

≤ max

i

≤ max

i

13

n

X

aik xk

k=1

n

X

|aik ||xk |

k=1

n

X

k=1

|aik |,

since |xk | ≤ maxk |xk | = kxk∞ = 1. Therefore,

n

X

kAk∞ ≤ max

i

|aik |.

k=1

Pn

n

To show

Pn that kAk∞ = maxi k=1 |aik |, it remains to find a x̃ ∈ R , kx̃k∞ = 1, such that kAx̃k∞ =

maxi k=1 |aik |. So, let j be such that

n

X

|ajk | = max

i

k=1

n

X

|aik |.

k=1

Define x̃ by

(

|ajk |/ajk

x̃k =

1

if ajk 6= 0

.

otherwise

Clearly kx̃k∞ = 1. Furthermore, for i 6= j,

n

X

aik x̃k ≤

k=1

n

X

|aik | ≤ max

i

k=1

and

n

X

n

X

n

X

ajk x̃k =

k=1

|aik | =

k=1

n

X

|ajk |

k=1

|ajk |.

k=1

Therefore,

kAx̃k∞ = max

i

n

X

n

X

aik x̃k =

k=1

|ajk | = max

i

k=1

n

X

|aik |.

k=1

3.23

We have

kAk1 = max{kAxk1 : kxk1 = 1}.

Pm

We first show that kAk1 ≤ maxk i=1 |aik |. For this, note that for each x such that kxk1 = 1, we have

kAxk1

=

≤

≤

n

m X

X

|aik ||xk |

i=1 k=1

n

X

max

k

≤ max

k

since

Pn

k=1

m

X

|xk |

k=1

≤

aik xk

i=1 k=1

m X

n

X

|aik |

i=1

m

X

!

|aik |

i=1

m

X

k=1

|aik |,

i=1

|xk | = kxk1 = 1. Therefore,

kAk1 ≤ max

k

14

m

X

i=1

n

X

|aik |.

|xk |

Pm

m

To show

Pmthat kAk1 = maxk i=1 |aik |, it remains to find a x̃ ∈ R , kx̃k1 = 1, such that kAx̃k1 =

maxk i=1 |aik |. So, let j be such that

m

X

|aij | = max

k

i=1

m

X

|aik |.

i=1

Define x̃ by

(

1

x̃k =

0

if k = j

.

otherwise

Clearly kx̃k1 = 1. Furthermore,

kAx̃k1 =

m X

n

X

aik x̃k =

i=1 k=1

m

X

|aij | = max

i=1

k

m

X

|aik |.

i=1

4. Concepts from Geometry

4.1

⇒: Let S = {x : Ax = b} be a linear variety. Let x, y ∈ S and α ∈ R. Then,

A(αx + (1 − α)y) = αAx + (1 − α)Ay = αb + (1 − α)b = b.

Therefore, αx + (1 − α)y ∈ S.

⇐: If S is empty, we are done. So, suppose x0 ∈ S. Consider the set S0 = S − x0 = {x − x0 : x ∈ S}.

Clearly, for all x, y ∈ S0 and α ∈ R, we have αx + (1 − α)y ∈ S0 . Note that 0 ∈ S0 . We claim that S0

is a subspace. To see this, let x, y ∈ S0 , and α ∈ R. Then, αx = αx + (1 − α)0 ∈ S0 . Furthermore,

1

1

2 x + 2 y ∈ S0 , and therefore x + y ∈ S0 by the previous argument. Hence, S0 is a subspace. Therefore, by

Exercise 3.13, there exists A such that S0 = N (A) = {x : Ax = 0}. Define b = Ax0 . Then,

S

= S0 + x0 = {y + x0 : y ∈ N (A)}

= {y + x0 : Ay = 0}

= {y + x0 : A(y + x0 ) = b}

= {x : Ax = b}.

4.2

Let u, v ∈ Θ = {x ∈ Rn : kxk ≤ r}, and α ∈ [0, 1]. Suppose z = αu + (1 − α)v. To show that Θ is convex,

we need to show that z ∈ Θ, i.e., kzk ≤ r. To this end,

kzk2

=

(αu> + (1 − α)v > )(αu + (1 − α)v)

= α2 kuk2 + 2α(1 − α)u> v + (1 − α)2 kvk2 .

Since u, v ∈ Θ, then kuk2 ≤ r2 and kvk2 ≤ r2 . Furthermore, by the Cauchy-Schwarz Inequality, we have

u> v ≤ kukkvk ≤ r2 . Therefore,

kzk2 ≤ α2 r2 + 2α(1 − α)r2 + (1 − α)2 r2 = r2 .

Hence, z ∈ Θ, which implies that Θ is a convex set, i.e., the any point on the line segment joining u and v

is also in Θ.

4.3

Let u, v ∈ Θ = {x ∈ Rn : Ax = b}, and α ∈ [0, 1]. Suppose z = αu + (1 − α)v. To show that Θ is convex,

we need to show that z ∈ Θ, i.e., Az = b. To this end,

Az

= A(αu + (1 − α)v)

= αAu + (1 − α)Av.

15

Since u, v ∈ Θ, then Au = b and Av = b. Therefore,

Az = αb + (1 − α)b = b,

and hence z ∈ Θ.

4.4

Let u, v ∈ Θ = {x ∈ Rn : x ≥ 0}, and α ∈ [0, 1]. Suppose z = αu + (1 − α)v. To show that Θ is convex,

we need to show that z ∈ Θ, i.e., z ≥ 0. To this end, write x = [x1 , . . . , xn ]> , y = [y1 , . . . , yn ]> , and

z = [z1 , . . . , zn ]> . Then, zi = αxi + (1 − α)yi , i = 1, . . . , n. Since xi , yi ≥ 0, and α, 1 − α ≥ 0, we have zi ≥ 0.

Therefore, z ≥ 0, and hence z ∈ Θ.

5. Elements of Calculus

5.1

Observe that

kAk k ≤ kAk−1 kkAk ≤ kAk−2 kkAk2 ≤ · · · ≤ kAkk .

Therefore, if kAk < 1, then limk→∞ kAk k = O which implies that limk→∞ Ak = O.

5.2

For the case when A has all real eigenvalues, the proof is simple. Let λ be the eigenvalue of A with largest

absolute value, and x the corresponding (normalized) eigenvector, i.e., Ax = λx and kxk = 1. Then,

kAk ≥ kAxk = kλxk = |λ|kxk = |λ|,

which completes the proof for this case.

In general, the eigenvalues of A and the corresponding eigenvectors may be complex. In this case, we

proceed as follows (see [41]). Consider the matrix

B=

A

,

kAk + ε

where ε is a positive real number. We have

kBk =

kAk

< 1.

kAk + ε

By Exercise 5.1, B k → O as k → ∞, and thus by Lemma 5.1, |λi (B)| < 1, i = 1, . . . , n. On the other hand,

for each i = 1, . . . , n,

λi (A)

λi (B) =

,

kAk + ε

and thus

|λi (B)| =

|λi (A)|

< 1.

kAk + ε

which gives

|λi (A)| < kAk + ε.

Since the above arguments hold for any ε > 0, we have |λi (A)| ≤ kAk.

5.3

a. ∇f (x) = (ab> + ba> )x.

b. F (x) = ab> + ba> .

16

5.4

We have

Df (x) = [x1 /3, x2 /2],

and

" #

dg

3

(t) =

.

dt

2

By the chain rule,

d

F (t)

dt

= Df (g(t))

dg

(t)

dt

=

" #

3

[(3t + 5)/3, (2t − 6)/2]

2

=

5t − 1.

5.5

We have

Df (x) = [x2 /2, x1 /2],

and

" #

∂g

4

(s, t) =

,

∂s

2

" #

∂g

3

(s, t) =

.

∂t

1

By the chain rule,

∂

f (g(s, t))

∂s

∂g

(s, t)

∂s

" #

1

4

[2s + t, 4s + 3t]

2

2

= Df (g(t))

=

=

8s + 5t,

and

∂

f (g(s, t))

∂t

∂g

(s, t)

∂t

" #

1

3

[2s + t, 4s + 3t]

2

1

= Df (g(t))

=

=

5s + 3t.

5.6

We have

Df (x) = [3x21 x2 x23 + x2 , x31 x23 + x1 , 2x31 x2 x3 + 1]

and

et + 3t2

dx

(t) = 2t .

dt

1

17

By the chain rule,

d

f (x(t))

dt

= Df (x(t))

dx

(t)

dt

et + 3t2

= [3x1 (t)2 x2 (t)x3 (t)2 + x2 (t), x1 (t)3 x3 (t)2 + x1 (t), 2x1 (t)3 x2 (t)x3 (t) + 1] 2t

1

= 12t(et + 3t2 )3 + 2tet + 6t2 + 2t + 1.

5.7

Let ε > 0 be given. Since f (x) = o(g(x)), then

lim

x→0

kf (x)k

= 0.

g(x)

Hence, there exists δ > 0 such that if kxk < δ, then

kf (x)k

< ε,

g(x)

which can be rewritten as

kf (x)k ≤ εg(x).

5.8

By Exercise 5.7, there exists δ > 0 such that if kxk < δ, then |o(g(x))| < g(x)/2. Hence, if kxk < δ, x 6= 0,

then

1

f (x) ≤ −g(x) + |o(g(x))| < −g(x) + g(x)/2 = − g(x) < 0.

2

5.9

We have that

{x : f1 (x) = 12} = {x : x21 − x22 = 12},

and

{x : f2 (x) = 16} = {x : x2 = 8/x1 }.

To find the intersection points, we substitute x2 = 8/x1 into x21 − x22 = 12 to get x41 − 12x21 − 64 = 0.

Solving gives x21 = 16, −4. Clearly, the only two possibilities for x1 are x1 = +4, −4, from which we obtain

x2 = +2, −2. Hence, the intersection points are located at [4, 2]> and [−4, −2]> .

The level sets associated with f1 (x1 , x2 ) = 12 and f2 (x1 , x2 ) = 16 are shown as follows.

18

x2

f2(x1,x2) = 16

f1(x1,x2) = 12

f1(x1,x2) = 12

3

(4,2)

2

1

1

− 12

2 3

x1

12

(−4,−2)

f2(x1,x2) = 16

5.10

a. We have

1

f (x) = f (xo ) + Df (xo )(x − xo ) + (x − xo )> D2 f (xo )(x − xo ) + · · · .

2

We compute

Df (x)

D2 f (x)

[e−x2 , −x1 e−x2 + 1],

"

#

0

−e−x2

=

.

−e−x2 x1 e−x2

=

Hence,

=

"

#

1

0

x1 − 1

2 + [1, 0]

+ [x1 − 1, x2 ]

2

x2

−1

=

1

1 + x1 + x2 − x1 x2 + x22 + · · · .

2

"

f (x)

#"

−1

1

#

x1 − 1

+ ···

x2

b. We compute

Df (x)

D2 f (x)

[4x3 + 4x1 x22 , 4x21 x2 + 4x32 ],

" 1

#

12x21 + 4x22

8x1 x2

=

.

8x1 x2

4x21 + 12x22

=

Expanding f about the point xo yields

"

"

#

1

16

x1 − 1

f (x) = 4 + [8, 8]

+ [x1 − 1, x2 − 1]

2

8

x2 − 1

=

8

16

8x21 + 8x22 − 16x1 − 16x2 + 8x1 x2 + 12 + · · · .

19

#"

#

x1 − 1

+ ···

x2 − 1

c. We compute

Df (x)

D2 f (x)

[ex1 −x2 + ex1 +x2 + 1, −ex1 −x2 + ex1 +x2 + 1],

"

#

ex1 −x2 + ex1 +x2 −ex1 −x2 + ex1 +x2

=

.

−ex1 −x2 + ex1 +x2 ex1 −x2 + ex1 +x2

=

Expanding f about the point xo yields

=

"

#"

#

#

1

2e 0

x1 − 1

x1 − 1

+ ···

2 + 2e + [2e + 1, 1]

+ [x1 − 1, x2 ]

2

x2

0 2e

x2

=

1 + x1 + +x2 + e(1 + x21 + x22 ) + · · · .

"

f (x)

20

6. Basics of Unconstrained Optimization

6.1

a. In this case, x∗ is definitely not a local minimizer. To see this, note that d = [1, −2]> is a feasible

direction at x∗ . However, d> ∇f (x∗ ) = −1, which violates the FONC.

b. In this case, x∗ satisfies the FONC, and thus is possibly a local minimizer, but it is impossible to be

definite based on the given information.

c. In this case, x∗ satisfies the SOSC, and thus is definitely a (strict) local minimizer.

d. In this case, x∗ is definitely not a local minimizer. To see this, note that d = [0, 1]> is a feasible direction

at x∗ , and d> ∇f (x∗ ) = 0. However, d> F (x∗ )d = −1, which violates the SONC.

6.2

Because there are no constraints on x1 or x2 , we can utilize conditions for unconstrained optimization. To

proceed, we first compute the function gradient and find the critical points, that is, the points that satisfy

the FONC,

∇f (x1 , x2 ) = 0.

The components of the gradient ∇f (x1 , x2 ) are

∂f

= x21 − 4

∂x1

Thus there are four critical points:

" #

" #

2

2

(1)

(2)

x =

, x =

,

4

−4

∂f

= x22 − 16.

∂x2

and

"

x

(3)

#

−2

=

,

4

We next compute the Hessian matrix of the function f :

"

2x1

F (x) =

0

"

and x

(4)

#

−2

=

.

−4

#

0

.

2x2

Note that F (x(1) ) > 0 and therefore, x(1) is a strict local minimizer. Next, F (x(4) ) < 0 and therefore,

x(4) is a strict local maximizer. The Hessian is indefinite at x(2) and x(3) and so these points are neither

maximizer nor minimizers.

6.3

Suppose x∗ is a global minimizer of f over Ω, and x∗ ∈ Ω0 ⊂ Ω. Let x ∈ Ω0 . Then, x ∈ Ω and therefore

f (x∗ ) ≤ f (x). Hence, x∗ is a global minimizer of f over Ω0 .

6.4

Suppose x∗ is an interior point of Ω. Therefore, there exists ε > 0 such that {y : ky − x∗ k < ε} ⊂ Ω. Since

x∗ is a local minimizer of f over Ω, there exists ε0 > 0 such that f (x∗ ) ≤ f (x) for all x ∈ {y : ky −x∗ k < ε0 }.

Take ε00 = min(ε, ε0 ). Then, {y : ky − x∗ k < ε00 } ⊂ Ω0 , and f (x∗ ) ≤ f (x) for all x ∈ {y : ky − x∗ k < ε00 }.

Thus, x∗ is a local minimizer of f over Ω0 .

To show that we cannot make the same conclusion if x∗ is not an interior point, let Ω = {0}, Ω0 = [−1, 1],

and f (x) = x. Clearly, 0 ∈ Ω is a local minimizer of f over Ω. However, 0 ∈ Ω0 is not a local minimizer of f

over Ω0 .

6.5

a. The TONC is: if f 00 (0) = 0, then f 000 (0) = 0. To prove this, suppose f 00 (0) = 0. Now, by the FONC, we

also have f 0 (0) = 0. Hence, by Taylor’s theorem,

f (x) = f (0) +

f 000 (0) 3

x + o(x3 ).

3!

Since 0 is a local minimizer, f (x) ≥ f (0) for all x sufficiently close to 0. Hence, for all such x,

f 000 (0) 3

x ≥ o(x3 ).

3!

21

Now, if x > 0, then

o(x3 )

,

x3

which implies that f 000 (0) ≥ 0. On the other hand, if x < 0, then

f 000 (0) ≥ 3!

f 000 (0) ≤ 3!

o(x3 )

,

x3

which implies that f 000 (0) ≤ 0. This implies that f 000 (0) = 0, as required.

b. Let f (x) = −x4 . Then, f 0 (0) = 0, f 00 (0) = 0, and f 000 (0) = 0, which means that the FONC, SONC, and

TONC are all satisfied. However, 0 is not a local minimizer: f (x) < 0 for all x 6= 0.

c. The answer is yes. To see this, we first write

f (x) = f (0) + f 0 (0)x +

f 00 (0) 2 f 000 (0) 3

x +

x .

2

3!

Now, if the FONC is satisfied, then

f (x) = f (0) +

f 00 (0) 2 f 000 (0) 3

x +

x .

2

3!

Moreover, if the SONC is satisfied, then either (i) f 00 (0) > 0 or (ii) f 00 (0) = 0. In the case (i), it is clear from

the above equation that f (x) ≥ f (0) for all x sufficiently close to 0 (because the third term on the right-hand

side is o(x2 )). In the case (ii), the TONC implies that f (x) = f (0) for all x. In either case, f (x) ≥ f (0) for

all x sufficiently close to 0. This shows that 0 is a local minimizer.

6.6

a. The TONC is: if f 0 (0) = 0 and f 00 (0) = 0, then f 000 (0) ≥ 0. To prove this, suppose f 0 (0) = 0 and

f 00 (0) = 0. By Taylor’s theorem, for x ≥ 0,

f (x) = f (0) +

f 000 (0) 3

x + o(x3 ).

3!

Since 0 is a local minimizer, f (x) ≥ f (0) for sufficiently small x ≥ 0. Hence, for all x ≥ 0 sufficiently small,

f 000 (0) ≥ 3!

o(x3 )

.

x3

This implies that f 000 (0) ≥ 0, as required.

b. Let f (x) = −x4 . Then, f 0 (0) = 0, f 00 (0) = 0, and f 000 (0) = 0, which means that the FONC, SONC, and

TONC are all satisfied. However, 0 is not a local minimizer: f (x) < 0 for all x > 0.

6.7

For convenience, let z 0 = x0 + arg minx∈Ω f (x). Thus we want to show that z 0 = arg miny∈Ω0 f (y); i.e., for

all y ∈ Ω0 , f (y − x0 ) ≥ f (z 0 − x0 ). So fix y ∈ Ω0 . Then, y − x0 ∈ Ω. Hence,

f (y − x0 ) ≥ min f (x)

x∈Ω

= f arg min f (x)

x∈Ω

= f (z 0 − x0 ),

which completes the proof.

6.8

a. The gradient and Hessian of f are

"

∇f (x)

=

F (x)

=

1

2

3

"

1

2

3

22

#

" #

3

3

x+

7

5

#

3

.

7

Hence, ∇f ([1, 1]> ) = [11, 25]> , and F ([1, 1]> ) is as shown above.

b. The direction of maximal rate of increase is the direction of the gradient. Hence, the directional derivative

with respect to a unit vector in this direction is

∇f (x)

k∇f (x)k

>

∇f (x) =

At x = [1, 1]> , we have k∇f ([1, 1]> )k =

√

(∇f (x))> ∇f (x)

= k∇f (x)k.

k∇f (x)k

112 + 252 = 27.31.

c. The FONC in this case is ∇f (x) = 0. Solving, we get

"

#

3/2

x=

.

−1

The point above does not satisfy the SONC because the Hessian is not positive semidefinite (its determinant

is negative).

6.9

a. A differentiable function f decreases most rapidly in the direction of the negative gradient. In our problem,

∇f (x) =

h

∂f

∂x1

∂f

∂x2

i>

h

= 2x1 x2 + x32

x21 + 3x1 x22

i>

.

Hence, the direction of most rapid decrease is

h

−∇f x(0) = − 5

10

i>

.

b. The rate of increase of f at x(0) in the direction −∇f x(0) is

∇f x

(0)

> −∇f x(0) √

√

= −k∇f x(0) k = − 125 = −5 5.

k∇f x(0) k

c. The rate of increase of f at x(0) in the direction d is

∇f x

(0)

" #

i 3 1

= 11.

10

4 5

> d

h

= 5

kdk

6.10

a. We can rewrite f as

"

1 > 4

f (x) = x

2

4

#

" #

4

> 3

x+x

+ 7.

2

4

The gradient and Hessian of f are

∇f (x)

F (x)

"

4

4

"

4

4

=

=

#

" #

4

3

x+

,

2

4

#

4

.

2

Hence ∇f ([0, 1]> ) = [7, 6]> . The directional derivative is

[1, 0]> ∇f ([0, 1]> ) = 7.

23

b. The FONC in this case is ∇f (x) = 0. The only point satisfying the FONC is

" #

1 −5

∗

x =

.

4 2

The point above does not satisfy the SONC because the Hessian is not positive semidefinite (its determinant

is negative). Therefore, f does not have a minimizer.

6.11

a. Write the objective function as f (x) = −x22 . In this problem the only feasible directions at 0 are of the

form d = [d1 , 0]> . Hence, d> ∇f (0) = 0 for all feasible directions d at 0.

b. The point 0 is a local maximizer, because f (0) = 0, while any feasible point x satisfies f (x) ≤ 0.

The point 0 is not a strict local maximizer because for any x of the form x = [x1 , 0]> , we have f (x) =

0 = f (0), and there are such points in any neighborhood of 0.

The point 0 is not a local minimizer because for any point x of the form x = [x1 , x21 ] with x1 > 0, we

have f (x) = −x41 < 0, and there are such points in any neighborhood of 0. Since 0 is not a local minimizer,

it is also not a strict local minimizer.

6.12

a. We have ∇f (x∗ ) = [0, 5]> . The only feasible directions at x∗ are of the form d = [d1 , d2 ]> with d2 ≥ 0.

Therefore, for such feasible directions, d> ∇f (x∗ ) = 5d2 ≥ 0. Hence, x∗ = [0, 1]> satisfies the first order

necessary condition.

b. We have F (x∗ ) = O. Therefore, for any d, d> F (x∗ )d ≥ 0. Hence, x∗ = [0, 1]> satisfies the second order

necessary condition.

c. Consider points of the form x = [x1 , −x21 + 1]> , x1 ∈ R. Such points are in Ω, and are arbitrarily close to

x∗ . However, for such points x 6= x∗ ,

f (x) = 5(−x21 + 1) = 5 − 5x21 < 5 = f (x∗ ).

Hence, x∗ is not a local minimizer.

6.13

a. We have ∇f (x∗ ) = −[3, 0]> . The only feasible directions at x∗ are of the form d = [d1 , d2 ]> with d1 ≤ 0.

Therefore, for such feasible directions, d> ∇f (x∗ ) = 3d1 ≥ 0. Hence, x∗ = [2, 0]> satisfies the first order

necessary condition.

b. We have F (x∗ ) = O. Therefore, for any d, d> F (x∗ )d ≥ 0. Hence, x∗ = [2, 0]> satisfies the second order

necessary condition.

c. Yes, x∗ is a local minimizer. To see this, notice that any feasible point x = [x1 , x2 ]> 6= x∗ is such that

x1 < 2. Hence, for such points x 6= x∗ ,

f (x) = −3x1 > −6 = f (x∗ ).

In fact, x∗ is a strict local minimizer.

6.14

a. We have ∇f (x) = [0, 1], which is nonzero everywhere. Hence, no interior point satisfies the FONC.

Moreover, any boundary point with a feasible direction d such that d2 < 0 cannot be satisfy the FONC,

because for such a d, d> ∇f (x) = d2 < 0. By drawing a picture, it is easy to see that the only boundary

point remaining is x∗ = [0, 1]> . For this point, any feasible direction satisfies d2 ≥ 0. Hence, for any feasible

direction, d> ∇f (x∗ ) = d2 ≥ 0. Hence, x∗ = [0, 1]> satisfies the FONC, and is the only such point.

b. We have F (x) = O. So any point (and in particular x∗ = [0, 1]> ) satisfies the SONC.

p

c. The point x∗ = [0, 1]> is not a local minimizer. To see this, consider points of the form x = [ 1 − x22 , x2 ]>

where x2 ∈ [1/2, 1). It is clear that such points are feasible, and are arbitrarily close to x∗ = [0, 1]> . However,

for such points, f (x) = x2 < 1 = f (x∗ ).

24

6.15

a. We have ∇f (x∗ ) = [3, 0]> . The only feasible directions at x∗ are of the form d = [d1 , d2 ]> with d1 ≥ 0.

Therefore, for such feasible directions, d> ∇f (x∗ ) = 3d1 ≥ 0. Hence, x∗ = [2, 0]> satisfies the first order

necessary condition.

b. We have F (x∗ ) = O. Therefore, for any d, d> F (x∗ )d ≥ 0. Hence, x∗ = [2, 0]> satisfies the second order

necessary condition.

c. Consider points of the form x = [−x22 + 2, x2 ]> , x2 ∈ R. Such points are in Ω, and could be arbitrarily

close to x∗ . However, for such points x 6= x∗ ,

f (x) = 3(−x22 + 2) = 6 − 6x22 < 6 = f (x∗ ).

Hence, x∗ is not a local minimizer.

6.16

a. We have ∇f (x∗ ) = 0. Therefore, for any feasible direction d at x∗ , we have d> ∇f (x∗ ) = 0. Hence, x∗

satisfies the first-order necessary condition.

b. We have

"

8

F (x∗ ) =

0

#

0

.

−2

Any feasible direction d at x∗ has the form d = [d1 , d2 ]> where d2 ≤ 2d1 , d1 , d2 ≥ 0. Therefore, for any

feasible direction d at x∗ , we have

d> F (x∗ )d = 8d21 − 2d22 ≥ 8d21 − 2(2d1 )2 = 0.

Hence, x∗ satisfies the second-order necessary condition.

c. We have f (x∗ ) = 0. Any point of the form x = [x1 , x21 + 2x1 ], x1 > 0, is feasible and has objective

function value given by

f (x) = 4x21 − (x21 + 2x1 )2 = −(x41 + 4x31 ) < 0 = f (x∗ ),

Moreover, there are such points in any neighborhood of x∗ . Therefore, the point x∗ is not a local minimizer.

6.17

a. We have ∇f (x∗ ) = [1/x∗1 , 1/x∗2 ]> . If x∗ were an interior point, then ∇f (x∗ ) = 0. But this is clearly

impossible. Therefore, x∗ cannot possibly be an interior point.

b. We have F (x) = − diag[1/x21 , 1/x22 ], which is negative definite everywhere. Therefore, the second-order

necessary condition is satisfied everywhere. (Note that because we have a maximization problem, negative

definiteness is the relevant condition.)

6.18

Given x ∈ R, let

f (x) =

n

X

(x − xi )2 ,

i=1

so that x̄ is the minimizer of f . By the FONC,

f 0 (x̄) = 0,

and hence

n

X

2(x̄ − xi ) = 0,

i=1

which on solving gives

n

1X

x̄ =

xi .

n i=1

25

6.19

Let θ1 be the angle from the horizontal to the bottom of the picture, and θ2 the angle from the horizontal

to the top of the picture. Then, tan(θ) = (tan(θ2 ) − tan(θ1 ))/(1 + tan(θ2 ) tan(θ1 )). Now, tan(θ1 ) = b/x and

tan(θ2 ) = (a + b)/x. Hence, the objective function that we wish to maximize is

f (x) =

We have

(a + b)/x − b/x

a

=

.

2

1 + b(a + b)/x

x + b(a + b)/x

a2

f (x) = −

(x + b(a + b)/x)2

0

b(a + b)

1−

x2

.

Let x∗ be the optimal distance. Then, by the FONC, we have f 0 (x∗ ) = 0, which gives

1−

b(a + b)

(x∗ )2

=

x∗

=

⇒

0

p

b(a + b).

6.20

The squared distance from the sensor to the baby’s heart is 1 + x2 , while the squared distance from the

sensor to the mother’s heart is 1 + (2 − x)2 . Therefore, the signal to noise ratio is

f (x) =

1 + (2 − x)2

.

1 + x2

We have

f 0 (x)

−2(2 − x)(1 + x2 ) − 2x(1 + (2 − x)2 )

(1 + x2 )2

2

4(x − 2x − 1)

.

(1 + x2 )2

=

=

By the FONC, at the optimal position x∗ , we√have f 0 (x∗ ) = 0. Hence, either x∗ = 1 −

From the figure, it easy to see that x∗ = 1 − 2 is the optimal position.

√

2 or x∗ = 1 +

6.21

a. Let x be the decision variable. Write the total travel time as f (x), which is given by

p

√

1 + (d − x)2

1 + x2

f (x) =

+

.

v1

v2

Differentiating the above expression, we get

f 0 (x) =

v1

√

x

d−x

− p

.

2

1+x

v2 1 + (d − x)2

By the first order necessary condition, the optimal path satisfies f 0 (x∗ ) = 0, which corresponds to

x∗

d − x∗

p

= p

,

v1 1 + (x∗ )2

v2 1 + (d − x∗ )2

or sin θ1 /v1 = sin θ2 /v2 . Upon rearranging, we obtain the desired equation.

b. The second derivative of f is given by

f 00 (x) =

1

1

+

.

2

3/2

v1 (1 + x )

v2 (1 + (d − x)2 )3/2

Hence, f 00 (x∗ ) > 0, which shows that the second order sufficient condition holds.

26

√

2.

6.22

a. We have f (x) = U1 (x1 ) + U2 (x2 ) and Ω = {x : x1 , x2 ≥ 0, x1 + x2 ≤ 1}. A picture of Ω looks like:

x2

1 0

1

x1

b. We have ∇f (x) = [a1 , a2 ]> . Because ∇f (x) 6= 0, for all x, we conclude that no interior point satisfies

the FONC. Next, consider any feasible point x for which x2 > 0. At such a point, the vector d = [1, −1]>

is a feasible direction. But then d> ∇f (x) = a1 − a2 > 0 which means that FONC is violated (recall that

the problem is to maximize f ). So clearly the remaining candidates are those x for which x2 = 0. Among

these, if x1 < 1, then d = [0, 1]> is a feasible direction, in which case we have d> ∇f (x) = a2 > 0. This

leaves the point x = [1, 0]> . At this point, any feasible direction d satisfies d1 ≤ 0 and d2 ≤ −d1 . Hence,

for any feasible direction d, we have

d> ∇f (x) = d1 a1 + d2 a2 ≤ d1 a1 + (−d1 )a2 = d1 (a1 − a2 ) ≤ 0.

So, the only feasible point that satisfies the FONC is [1, 0]> .

c. We have F (x) = O ≤ 0. Hence, any point satisfies the SONC (again, recall that the problem is to

maximize f ).

6.23

We have

"

#

4(x1 − x2 )3 + 2x1 − 2

∇f (x) =

.

−4(x1 − x2 )3 − 2x2 + 2

Setting ∇f (x) = 0 we get

4(x1 − x2 )3 + 2x1 − 2

=

0

3

=

0.

−4(x1 − x2 ) − 2x2 + 2

Adding the two equations, we obtain x1 = x2 , and substituting back yields

x1 = x2 = 1.

Hence, the only point satisfying the FONC is [1, 1]> .

We have

"

12(x1 − x2 )2 + 2

F (x) =

−12(x1 − x2 )2

Hence

"

2

F ([1, 1] ) =

0

>

#

−12(x1 − x2 )2

.

12(x1 − x2 )2 − 2

0

−2

#

Since F ([1, 1]> ) is not positive semidefinite, the point [1, 1]> does not satisfy the SONC.

6.24

Suppose d is a feasible direction at x. Then, there exists α0 > 0 such that x + αd ∈ Ω for all α ∈ [0, α0 ].

Let β > 0 be given. Then, x + α(βd) ∈ Ω for all α ∈ [0, α0 /β]. Since α0 /β > 0, by definition βd is also a

feasible direction at x.

6.25

⇒: Suppose d is feasible at x ∈ Ω. Then, there exists α > 0 such that x + αd ∈ Ω, that is, A(x + αd) = b.

Since Ax = b and α 6= 0, we conclude that Ad = 0.

27

⇐: Suppose Ad = 0. Then, for any α ∈ [0, 1], we have αAd = 0. Adding this equation to Ax = b, we

obtain A(x + αd) = b, that is, x + αd ∈ Ω for all α ∈ [0, 1]. Therefore, d is a feasible direction at x.

6.26

The vector d = [1, 1]> is a feasible direction at 0. Now,

d> ∇f (0) =

∂f

∂f

(0) +

(0).

∂x1

∂x2

Since ∇f (0) ≤ 0 and ∇f (0) 6= 0, then

d> ∇f (0) < 0.

Hence, by the FONC, 0 is not a local minimizer.

6.27

◦

◦

We have ∇f (x) = c 6= 0. Therefore, for any x ∈Ω, we have ∇f (x) 6= 0. Hence, by Corollary 6.1, x ∈Ω

cannot be a local minimizer (and therefore it cannot be a solution).

6.28

The objective function is f (x) = −c1 x1 − c2 x2 . Therefore, ∇f (x) = [−c1 , −c2 ]> 6= 0 for all x. Thus,

S by

FONC, the optimal solution x∗ cannot lie in the interior of the feasible set. Next, for all x ∈ L1 L2 ,

d = [1, 1]> is a feasible direction.

Therefore, d> ∇f (x) = −c1 − c2 < 0. Hence, by FONC, the optimal

S

solution x∗ cannot lie in L1 L2 . Lastly, for all x ∈ L3 , d = [1, −1]> is a feasible direction. Therefore,

d> ∇f (x) = c2 − c1 < 0. Hence, by FONC, the optimal solution x∗ cannot lie in L3 . Therefore, by

elimination, the unique optimal feasible solution must be [1, 0]> .

6.29

a. We write

n

1X 2 2

a xi + b2 + yi2 + 2xi ab − 2xi yi a − 2yi b

f (a, b) =

n i=1

!

!

n

n

1X 2

1X

2

2

= a

x +b +2

xi ab

n i=1 i

n i=1

!

!

!

n

n

n

1X

1X 2

1X

xi yi a − 2

yi b +

y

−2

n i=1

n i=1

n i=1 i

#" #

" P

Pn

n

1

x2i n1 i=1 xi a

i=1

n

= [a b] 1 Pn

1

b

i=1 xi

n

" n

#

"

#

n

n

1X

1X

1X 2

a

−2

xi yi ,

yi

y

+

n i=1

n i=1

n i=1 i

b

= z > Qz − 2c> z + d,

where z, Q, c and d are defined in the obvious way.

b. If the point z ∗ = [a∗ , b∗ ]> is a solution, then byPthe FONC, we have ∇f (z ∗ ) = 2Qz ∗ − 2c = 0,

n

which means Qz ∗ = c. Now, since X 2 − (X)2 = n1 i=1 (xi − X)2 , and the xi are not all equal, then

det Q = X 2 − (X)2 6= 0. Hence, Q is nonsingular, and hence

"

#"

# XY −(X)(Y )

1

XY

1

−X

X 2 −(X)2

.

z ∗ = Q−1 c =

= (X 2 )(Y

)−(X)(XY )

2

2

2

−X

X

Y

X − (X)

2

2

X −(X)

Since Q > 0, then by the SOSC, the point z ∗ is a strict local minimizer. Since z ∗ is the only point satisfying

the FONC, then z ∗ is the only local minimizer.

c. We have

a∗ X + b∗ =

XY − (X)(Y )

X2

−

(X)2

X+

28

(X 2 )(Y ) − (X)(XY )

X 2 − (X)2

=Y.

6.30

Given x ∈ Rn , let

p

f (x) =

1X

kx − x(i) k2

p i=1

be the average squared error between x and x(1) , . . . , x(p) . We can rewrite f as

p

f (x)

1X

(x − x(i) )> (x − x(i) )

p i=1

!>

p

1

1 X (i)

>

x + kx(i) k2 .

x

= x x−2

p i=1

p

=

So f is a quadratic function. Since x̄ is the minimizer of f , then by the FONC, ∇f (x̄) = 0, i.e.,

p

2x̄ − 2

1 X (i)

x = 0.

p i=1

Hence, we get

p

1 X (i)

x̄ =

x ,

p i=1

i.e., x̄ is just the average, or centroid, or center of gravity, of x(1) , . . . , x(p) .

The Hessian of f at x̄ is

F (x̄) = 2I n ,

which is positive definite. Hence, by the SOSC, x̄ is a strict local minimizer of f (in fact, it is a strict global

minimizer because f is a convex quadratic function).

6.31

Fix any x ∈ Ω. The vector d = x − x∗ is feasible at x∗ (by convexity of Ω). By Taylor’s formula, we have

f (x) = f (x∗ ) + d> ∇f (x∗ ) + o(kdk) ≥ f (x∗ ) + ckdk + o(kdk).

Therefore, for all x sufficiently close to x∗ , we have f (x) > f (x∗ ). Hence, x∗ is a strict local minimizer.

6.32

Since f ∈ C 2 , F (x∗ ) = F > (x∗ ). Let d 6= 0 be a feasible directions at x∗ . By Taylor’s theorem,

f (x∗ + d) − f (x∗ ) =

1 >

d ∇f (x∗ ) + d> F (x∗ )d + o(kdk2 ).

2

Using conditions a and b, we get

f (x∗ + d) − f (x∗ ) ≥ ckdk2 + o(kdk2 ),

Therefore, for all d such that kdk is sufficiently small,

f (x∗ + d) > f (x∗ ),

and the proof is completed.

6.33

Necessity follows from the FONC. To prove sufficiency, we write f as

f (x) =

1

1

(x − x∗ )> Q(x − x∗ ) − x∗> Qx∗

2

2

where x∗ = Q−1 b is the unique vector satisfying the FONC. Clearly, since

Q > 0, then

1

f (x) ≥ f (x∗ ) = − x∗> Qx∗ ,

2

29

1 ∗>

Qx∗

2x

is a constant, and

and f (x) = f (x∗ ) if and only if x = x∗ .

6.34

Write u = [u1 , . . . , un ]. We have

xn

= axn−1 + bun

= a(axn−2 + bun−1 ) + bun

= a2 xn−2 + abun−1 + bun

..

.

= an x0 + an−1 bu1 + · · · + abun−1 + bun

= c> u,

where c = [an−1 b, . . . , ab, b]> . Therefore, the problem can be written as

minimize ru> u − qc> u,

which is a positive definite quadratic in u. The solution is therefore

u=

q

c,

2r

or, equivalently, ui = qan−i b/(2r), i = 1, . . . , n.

7. One Dimensional Search Methods

7.1

√

The range reduction factor for 3 iterations of the Golden Section method is (( 5−1/2)3 = 0.236, while that of

the Fibonacci method (with ε = 0) is 1/F3+1 = 0.2. Hence, if the desired range reduction factor is anywhere

between 0.2 and 0.236 (e.g., 0.21), then the Golden Section method requires at least 4 iterations, while the

Fibonacci method requires only 3. So, an example of a desired final uncertainty range is 0.21×(8−5) = 0.63.

7.2

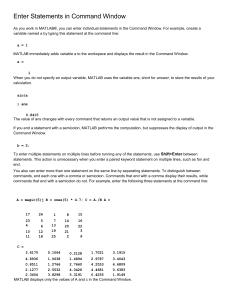

a. The plot of f (x) versus x is as below:

3.2

3.1

3

2.9

f(x)

2.8

2.7

2.6

2.5

2.4

2.3

1

1.1

1.2

1.3

1.4

1.5

x

1.6

1.7

1.8

1.9

2

b. The number of steps needed for the Golden Section method is computed from the inequality:

0.61803N ≤

0.2

2−1

⇒

30

N ≥ 3.34.

Therefore, the fewest possible number of steps is 4. Applying 4 steps of the Golden Section method, we end

up with an uncertainty interval of [a4 , b0 ] = [1.8541, 2.000]. The table with the results of the intermediate

steps is displayed below:

Iteration k

ak

bk

f (ak )

f (bk )

New uncertainty interval

1

2

3

4

1.3820

1.6180

1.7639

1.8541

1.6180

1.7639

1.8541

1.9098

2.6607

2.4292

2.3437

2.3196

2.4292

2.3437

2.3196

2.3171

[1.3820,2]

[1.6180,2]

[1.7639,2]

[1.8541,2]

c. The number of steps needed for the Fibonacci method is computed from the inequality:

1 + 2ε

0.2

≤

FN +1

2−1

⇒

N ≥ 4.

Therefore, the fewest possible number of steps is 4. Applying 4 steps of the Fibonacci method, we end up

with an uncertainty interval of [a4 , b0 ] = [1.8750, 2.000]. The table with the results of the intermediate steps

is displayed below:

Iteration k

ρk

ak

bk

f (ak )

f (bk )

New unc. int.

1

2

3

4

0.3750

0.4

0.3333

0.45

1.3750

1.6250

1.7500

1.8750

1.6250

1.7500

1.8750

1.8875

2.6688

2.4239

2.3495

2.3175

2.4239

2.3495

2.3175

2.3169

[1.3750,2]

[1.6250,2]

[1.7500,2]

[1.8750,2]

d. We have f 0 (x) = 2x − 4 sin x, f 00 (x) = 2 − 4 cos x. Hence, Newton’s algorithm takes the form:

x(k+1) = x(k) −

x(k) − 2 sin x(k)

.

1 − 2 cos x(k)

Applying 4 iterations with x(0) = 1, we get x(1) = −7.4727, x(2) = 14.4785, x(3) = 6.9351, x(4) = 16.6354.

Apparently, Newton’s method is not effective in this case.

7.3

a. We first create the M-file f.m as follows:

% f.m

function y=f(x)

y=8*exp(1-x)+7*log(x);

The MATLAB commands to plot the function are:

fplot(’f’,[1 2]);

xlabel(’x’);

ylabel(’f(x)’);

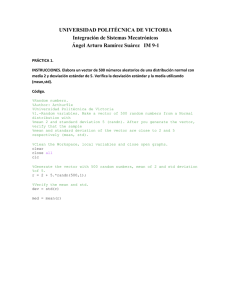

The resulting plot is as follows:

31

8

7.95

7.9

f(x)

7.85

7.8

7.75

7.7

7.65

1

1.1

1.2

1.3

1.4

1.5

x

1.6

1.7

1.8

1.9

2

b. The MATLAB routine for the Golden Section method is:

%Matlab routine for Golden Section Search

left=1;

right=2;

uncert=0.23;

rho=(3-sqrt(5))/2;

N=ceil(log(uncert/(right-left))/log(1-rho)) %print N

lower=’a’;

a=left+(1-rho)*(right-left);

f_a=f(a);

for i=1:N,

if lower==’a’

b=a

f_b=f_a

a=left+rho*(right-left)

f_a=f(a)

else

a=b

f_a=f_b

b=left+(1-rho)*(right-left)

f_b=f(b)

end %if

if f_a<f_b

right=b;

lower=’a’

else

left=a;

lower=’b’

end %if

New_Interval = [left,right]

end %for i

%------------------------------------------------------------------

Using the above routine, we obtain N = 4 and a final interval of [1.528, 1.674]. The table with the results

of the intermediate steps is displayed below:

32

Iteration k

ak

bk

f (ak )

f (bk )

New uncertainty interval

1

2

3

4

1.382

1.618

1.528

1.618

1.618

1.764

1.618

1.674

7.7247

7.6805

7.6860

7.6805

7.6805

7.6995

7.6805

7.6838

[1.382,2]

[1.382,1.764]

[1.528,1.764]

[1.528,1.674]

c. The MATLAB routine for the Fibonacci method is:

%Matlab routine for Fibonacci Search technique

left=1;

right=2;

uncert=0.23;

epsilon=0.05;

F(1)=1;

F(2)=1;

N=0;

while F(N+2) < (1+2*epsilon)*(right-left)/uncert

F(N+3)=F(N+2)+F(N+1);

N=N+1;

end %while

N %print N

lower=’a’;

a=left+(F(N+1)/F(N+2))*(right-left);

f_a=f(a);

for i=1:N,

if i~=N

rho=1-F(N+2-i)/F(N+3-i)

else

rho=0.5-epsilon

end %if

if lower==’a’

b=a

f_b=f_a

a=left+rho*(right-left)

f_a=f(a)

else

a=b

f_a=f_b

b=left+(1-rho)*(right-left)

f_b=f(b)

end %if

if f_a<f_b

right=b;

lower=’a’

else

left=a;

lower=’b’

end %if

New_Interval = [left,right]

end %for i

%------------------------------------------------------------------

33

Using the above routine, we obtain N = 3 and a final interval of [1.58, 1.8]. The table with the results of

the intermediate steps is displayed below:

7.4

Now, ρk = 1 −

Iteration k

ρk

ak

bk

f (ak )

f (bk )

New uncertainty interval

1

2

3

0.4

0.333

0.45

1.4

1.6

1.58

1.6

1.8

1.6

7.7179

7.6805

7.6812

7.6805

7.7091

7.6805

[1.4,2]

[1.4,1.8]

[1.58,1.8]

FN −k+1

FN −k+2 .

Hence,

1−

ρk

1 − ρk

1 − FN −k+1 /FN −k+2

FN −k+1 /FN −k+2

FN −k+2 − FN −k+1

= 1−

FN −k+1

FN −k

= 1−

FN −k+1

= ρk+1

=

1−

To show that 0 ≤ ρk ≤ 1/2, we proceed by induction. Clearly ρ1 = 1/2 satisfies 0 ≤ ρ1 ≤ 1/2. Suppose

0 ≤ ρk ≤ 1/2, where k ∈ {1, . . . , N − 1}. Then,

1

≤ 1 − ρk ≤ 1

2

and hence

1≤

1

≤ 2.

1 − ρk

Therefore,

1

ρk

≤

≤ 1.

2

1 − ρk

Since ρk+1 = 1 −

ρk

1−ρk ,

then

0 ≤ ρk+1 ≤

1

2

as required.

7.5

We proceed by induction. For k = 2, we have F0 F3 − F1 F2 = (1)(3) − (1)(2) = 1 = (−1)2 . Suppose

Fk−2 Fk+1 − Fk−1 Fk = (−1)k . Then,

Fk−1 Fk+2 − Fk Fk+1

= Fk−1 (Fk+1 + Fk ) − (Fk−1 + Fk−2 )Fk+1

= Fk−1 Fk − Fk−2 Fk+1

= −(−1)k

= (−1)k+1 .

7.6

Define yk = Fk and zk = Fk−1 . Then, we have

"

#

" #

yk+1

yk

=A

,

zk+1

zk

where

"

1

A=

1

34

#

1

,

0

with initial condition

"

We can write

# " #

y0

1

=

.

z0

0

"

" #

#

yn

n 1

Fn = yn = [1, 0]

= [1, 0]A

.

zn

0

Since A is symmetric, it can be diagonalized as

" #"

u> λ 1

A=

v>

0

where

and

#

0 h

u

λ2

v

i

√

1− 5

λ2 =

,

2

√

1+ 5

,

λ1 =

2

q √

1 2/( 5 − 1)

u = − 1/4 q √

,

5

( 5 − 1)/2

q√

1 ( 5 − 1)/2

q √

v = 1/4

.

5

− 2/( 5 − 1)

Therefore, we have

"

Fn

= u>

λ1

0

0

λ2

#n

u

= u21 λn1 + u22 λn2

√ !n+1

1 1+ 5

−

= √

2

5

√ !n+1

1− 5

.

2

7.7

The number log(2) is the root of the equation g(x) = 0, where g(x) = exp(x) − 2. The derivative of g is

g 0 (x) = exp(x). Newton’s method applied to this root finding problem is

x(k+1) = x(k) −

exp(x(k) ) − 2

= x(k) − 1 + 2 exp(−x(k) ).

exp(x(k) )

Performing two iterations, we get x(1) = 0.7358 and x(2) = 0.6940.

7.8

a. We compute g 0 (x) = 2ex /(ex + 1)2 . Therefore Newton’s method of tangents for this problem takes the

form

(k)

x(k+1)

= x(k) −

(k)

(ex − 1)/(ex + 1)

2ex(k) /(ex(k) + 1)2

(k)

(e2x − 1)

2ex(k)

(k)

= x − sinh x(k) .

= x(k) −

b. By symmetry, we need x(1) = −x(0) for cycling. Therefore, x(0) must satisfy

−x(0) = x(0) − sinh x(0) .

The algorithm cycles if x(0) = c, where c > 0 is the solution to 2c = sinh c.

35

c. The algorithm converges to 0 if and only if |x(0) | < c, where c is from part b.

7.9

The quadratic function that matches the given data x(k) , x(k−1) , x(k−2) , f (x(k) ), f (x(k−1) ), and f (x(k−2) )

can be computed by solving the following three linear equations for the parameters a, b, and c:

a(x(k−i) )2 + bx(k−i) + c = f (x(k−i) ),

i = 0, 1, 2.

Then, the algorithm is given by x(k+1) = −b/2a (so, in fact, we only need to find the ratio of a and b).

With some elementary algebra (e.g., using Cramer’s rule without needing to calculate the determinant in

the denominator), the algorithm can be written as:

x(k+1) =

σ12 f (x(k) ) + σ20 f (x(k−1) ) + σ01 f (x(k−2) )

2(δ12 f (x(k) ) + δ20 f (x(k−1) ) + δ01 f (x(k−2) ))

where σij = (x(k−i) )2 − (x(k−j) )2 and δij = x(k−i) − x(k−j) .

7.10

a. A MATLAB routine for implementing the secant method is as follows.

function [x,v] = secant(g,xcurr,xnew,uncert);

%Matlab routine for finding root of g(x) using secant method

%

% secant;

% secant(g);

% secant(g,xcurr,xnew);

% secant(g,xcurr,xnew,uncert);

%

% x=secant;

% x=secant(g);

% x=secant(g,xcurr,xnew);

% x=secant(g,xcurr,xnew,uncert);

%

% [x,v]=secant;

% [x,v]=secant(g);

% [x,v]=secant(g,xcurr,xnew);

% [x,v]=secant(g,xcurr,xnew,uncert);

%

%The first variant finds the root of g(x) in the M-file g.m, with

%initial conditions 0 and 1, and uncertainty 10^(-5).

%The second variant finds the root of the function in the M-file specified

%by the string g, with initial conditions 0 and 1, and uncertainty 10^(-5).

%The third variant finds the root of the function in the M-file specified

%by the string g, with initial conditions specified by xcurr and xnew, and

%uncertainty 10^(-5).

%The fourth variant finds the root of the function in the M-file specified

%by the string g, with initial conditions specified by xcurr and xnew, and

%uncertainty specified by uncert.

%

%The next four variants returns the final value of the root as x.

%The last four variants returns the final value of the root as x, and

%the value of the function at the final value as v.

if nargin < 4

uncert=10^(-5);

if nargin < 3

if nargin == 1

xcurr=0;

xnew=1;

elseif nargin == 0

36

g=’g’;

else

disp(’Cannot have 2 arguments.’);

return;

end

end

end

g_curr=feval(g,xcurr);

while abs(xnew-xcurr)>xcurr*uncert,

xold=xcurr;

xcurr=xnew;

g_old=g_curr;

g_curr=feval(g,xcurr);

xnew=(g_curr*xold-g_old*xcurr)/(g_curr-g_old);

end %while

%print out solution and value of g(x)

if nargout >= 1

x=xnew;

if nargout == 2

v=feval(g,xnew);

end

else

final_point=xnew

value=feval(g,xnew)

end %if

%------------------------------------------------------------------

b. We get a solution of x = 0.0039671, with corresponding value g(x) = −9.908 × 10−8 .

7.11

function alpha=linesearch_secant(grad,x,d)

%Line search using secant method

epsilon=10^(-4); %line search tolerance

max = 100; %maximum number of iterations

alpha_curr=0;

alpha=0.001;

dphi_zero=feval(grad,x)’*d;

dphi_curr=dphi_zero;

i=0;

while abs(dphi_curr)>epsilon*abs(dphi_zero),

alpha_old=alpha_curr;

alpha_curr=alpha;

dphi_old=dphi_curr;

dphi_curr=feval(grad,x+alpha_curr*d)’*d;

alpha=(dphi_curr*alpha_old-dphi_old*alpha_curr)/(dphi_curr-dphi_old);

i=i+1;

if (i >= max) & (abs(dphi_curr)>epsilon*abs(dphi_zero)),

disp(’Line search terminating with number of iterations:’);

disp(i);

break;

end

end %while

%------------------------------------------------------------------

37

7.12

a. We could carry out the bracketing using the one-dimensional function φ0 (α) = f (x(0) + αd(0) ), where

d(0) is the negative gradient at x(0) , as described in Section 7.8. The decision variable would be α. However,

here we will directly represent the points in R2 (which is equivalent, though unnecessary in general).

The uncertainty interval is calculated by the following procedure:

"

#

"

#

1 > 2 1

2 1

x,

∇f (x) =

x

f (x) = x

2

1 2

1 2

Therefore,

"

1

2

"

0.8

−0.25

2

d = −∇f (x(0) ) = −

1

#"

# "

#

0.8

−1.35

=

−0.25

−0.3

As the problem requires, we use ε = 0.075.

First, we begin calculating f (x(0) ) and x(1) :

f (x(0) ) = f

#!

= 0.5025,

"

x(1)

#

"

# "

#

0.8

−1.35

0.6987

= x(0) + εd =

+ 0.075

=

−0.25

−0.3

−0.2725

Then, we proceed as follows to find the uncertainty interval:

"

#!

0.6987

(1)

f (x ) = f

= 0.3721

−0.2725

"

#

"

# "

#

0.8

−1.35

0.5975

x = x + 2εd =

+ 0.15

=

−0.25

−0.3

−0.2950

"

#!

0.5975

f (x(2) ) = f

= 0.2678

−0.2950

"

#

"

# "

#

0.8

−1.35

0.3950

(3)

(0)

x = x + 4εd =

+ 0.3

=

−0.25

−0.3

−0.3400

"

#!

0.3950

f (x(3) ) = f

= 0.1373

−0.3400

"

#

"

# "

#

0.8

−1.35

−0.0100

(4)

(0)

x = x + 8εd =

+ 0.6

=

−0.25

−0.3

−0.4300

"

#!

−0.0100

f (x(4) ) = f

= 0.1893

−0.4300

(2)

(0)

Between f (x(3) ) and ""

f (x(4) ) the# function

increases,

which

"

##

" means

# that the minimizer must occur on the

0.5975

−0.0100

−1.35

interval [x(2) , x(4) ] =

,

, with d =

.

−0.2950

−0.4300

−0.3

MATLAB code to solve the problem is listed next.

% Coded by David Schvartzman

38

% In our case we have:

Q = [2 1; 1 2];

x0 = [0.8; -.25];

e = 0.075;

f = zeros(1,10);

X = zeros(2,10);

x1 = x0;

d = -Q*x1;

f(1) = 0.5*(x1’)*Q*x1;

for i=2:10

X(:,i) = x1+e*d;

f(i) = 0.5*X(:,i)’*Q*X(:,i);

e = 2*e;