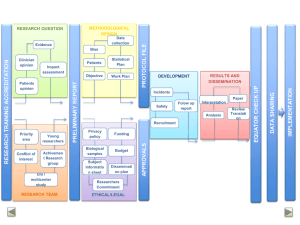

Being Ethical: How to Beat Bias in AI and Be Transparent IN ASSOCIATION WITH: TABLE OF CONTENTS 4 INTRODUCTION 5 THE IMPORTANCE OF DATA 7 THE ROAD TOWARD ETHICAL AI 8 THE FUTURE IS ETHICAL 10 METHODOLOGY 10 ACKNOWLEDGMENTS COPYRIGHT © 2019 FORBES INSIGHTS | 3 INTRODUCTION Few leaders in business and government dispute the power of artificial intelligence (AI) to change the world. Transformation and disruption are, after all, already happening in ways large and small, visible and invisible, across the business world and society at large, at unprecedented speed. The question is now: How do we manage AI for the common good? The issues at the heart of ethics in AI have been percolating over the past few years as companies across industries have begun to deploy and make decisions off machine learning (ML) applications like image recognition and natural language processing (NLP). The benefits and potential to be unlocked are staggering, but it’s becoming increasingly apparent that many machine-driven decisions—and the collection and use of data to train and fuel them—is happening outside of full human control or without established best practices. Among tech-forward brands and leaders, there is a growing call to arms toward fairness, accountability and transparency. The urgency around these issues runs deep. After all, any business wants its decisions—especially the ones that have a significant impact on individual customers—to be accurate and free of bias. Business leaders and regulators need to know the why behind a machine-driven decision, and endcustomers want to see transparency in this process and accountability for the results. AI can be a boon for this if it is explainable. “We’re seeing a gradual recognition that, with the deployment of more AI, ethical issues should be considered,” says Kay Firth-Butterfield, head of AI and machine learning at the World Economic Forum. “If we don’t establish firm foundations around the technology, we will just build more problems for ourselves. We have to tackle the core issues around the deployment of AI for the benefit of humanity.” AI is now at a crossroads—and best practices and guidelines are needed to guide these powerful technologies down the right path. While many organizations are already taking action—79% said they’re employing tactics to ensure algorithms are built without bias, like having diverse teams working on their development, according to a Forbes Insights survey of more than 500 executives—more needs to be done. Less than half have revised their data policies to be proactive on transparency and even fewer have appointed a data steward and/or ethics engineer to oversee the results of their AI models. This report is about the challenges business leaders face in building ethical systems and how to go about solving them. 4 | BEING ETHICAL: HOW TO BEAT BIAS IN AI AND BE TRANSPARENT THE IMPORTANCE OF DATA Kathy Baxter is a prominent voice in the development of ethical technology. As the architect of the ethical AI practice at Salesforce, she develops research-informed best practices to educate Salesforce employees, customers and the industry on the development of ethical AI. Like Firth-Butterfield, she sees plenty of discussions and awareness around ethical issues. “But we’re not seeing as much action as I might hope, and part of the problem,” she says, “is that building ethical AI is hard—it’s a pretty tough nut to crack.” Data is at the heart of ethics in AI for obvious reasons—it’s the fuel that makes the engine run. Solving for the challenges around data, which every business should do, will go a long way toward solving for ethics, notably the core concerns around bias and privacy. As seen in Figure 1, the Forbes Insights survey reflects the challenge behind this priority. Forty-four percent say that managing data in a privacy-compliant environment is a challenge, and almost half report that determining the right data to collect and analyze is another barrier. AI technologies aren’t smart enough (yet) to weed out the biases either programed into them or fed into their systems with poor training data— data that isn’t accurate or inclusive (or exclusive, if relevant) for the model being designed. This bias that finds its way into algorithms is an issue for humans and society to solve. “We can’t expect AI, as amazing as it is, to remove bias that exists in our society. We have hundreds of years of decisions being made either implicitly or explicitly against certain groups,” Baxter says. “As long as that exists, it would be simply naive to think that any technology, including AI, could solve the bias that exists in our technology.” Figure 1: Data Challenges With respect to data, which of these statements reflect your view of your organization’s state of readiness for effective AI? (Select all that apply) Ethical issues come into play when you’re capturing and using personal and product data, which must be addressed and solved for in order to see the continued advancement of AI technologies and IoT 47% There’s a gap between the goals or vision for AI technologies at our organization and our ability to capture the data we want 45% We must buy most of the data we use to gain insights and/or train algorithms 29% Low-quality, incomplete or inaccurate data is holding us back from effective AI deployments 23% We don’t have access to quality data from the source (e.g., sensors on devices, machinery, real-time consumer behavior) 21% What specific challenges does your organization have with extracting value from the data you already have? (Select all that apply) Determining the right data to collect and analyze 47% Managing data in a privacy-compliant environment 44% Measuring and benchmarking data quality and accuracy 43% Integrating multiple sources of data 42% Prioritizing data to generate insights 39% Obtaining fresh, relevant data 37% Characterizing data segments 33% Baxter cites vehicle crash test dummies as an example of an unwitting societal bias that ends up baked into product design—more specifically, it is sampling bias that excludes an entire gender. The standard in accident COPYRIGHT © 2019 FORBES INSIGHTS | 5 testing for decades was a dummy modeled after the average male body. But a study in 2011 by the University of Virginia’s Center for Applied Biomechanics found that, in one car model, women had a 47% greater chance of being injured in a 35-mph collision. 1 The design of the vehicle had not factored in the physiology of the female body. Because the crash test dummies were all built for a male, the data was built around an assumption that proved to be wrong and unfair—and dangerous. “They didn’t have a similar female weight and height and dimension crash test dummy,” Baxter says. “Once they started using a female crash test dummy, the results looked very different in evaluations of cars.” Bias, she notes, doesn’t have to be explicit. “It comes as default thinking that has existed in different industries for many decades. If we want to come up with a solution that creates a world we want to see, not the world as it exists, we’re going to have to put a lot of resources into making that happen.” “ [Bias] comes as default thinking that has existed in different industries for many decades. If we want to come up with a solution that creates a world we want to see, not the world as it exists, we’re going to have to put a lot of resources into making that happen.” KATHY BAXTER, ARCHITECT, ETHICAL AI PRACTICE, SALESFORCE Here are two big steps to improve the data you’re working with and limit the possibility of introducing bias in your AI systems. 1. Clean training data. The design of the model is important, “and then, of course, the training data is a big source of bias in AI,” Baxter says. “That’s where history creates that bias that we see in the outcome. If you train a model to make recommendations for loan applications based on how you’ve always approved loans, it’s just going to replicate and magnify what humans already did.” 2. Monitor the impact. Although Salesforce doesn’t collect data from consumers, it works with its clients to help them understand the implications of the data they feed into Einstein, the company’s flagship software program. “They may not have thought about the data they’ve been collecting over years or decades in terms of bias,” Baxter says. “Having to go through and look at their data in a very different light is something new for them. We will raise a flag if we believe a variable might lead to bias, and we find ways to provide in-app education to empower customers in the moment to understand their models and the implications of their decisions.” Yet the first step—clean your training data—is easier said than done. How do you actually go about it so it doesn’t bias the outcomes? Baxter points to the big W’s around data quality and accuracy as the road to finding and eliminating bias. For each of the questions below, consider why there is a challenge and get to the root of the problem. • W HERE IS YOUR DATA COMING FROM? That question is just the tip of the ethics iceberg. Ask who was involved in getting your data and who interacted with it. Look at the sources—is this first-party data or third-party data from a vendor?—and who collected it, categorized it or labeled it. All of this information may affect the credibility of the data you’re feeding into your algorithms. 1 Vulnerability of Female Driver Involved in Motor Vehicle Crashes, https://www.ncbi.nlm.nih.gov/pmc/articles/PMC3222446/ 6 | BEING ETHICAL: HOW TO BEAT BIAS IN AI AND BE TRANSPARENT • W HEN IS YOUR DATA COMING? This is about data freshness (which, back to the previous figure, 37% of executives say is challenging to obtain). Digging deeper, consider the impact of an organization’s decision if the data used in its AI is old—hours or days old, even weeks or months. Decisions based on the AI might be inaccurate; worse, they might be unfair to the customer. At scale, the bias from such errors can have a big impact on results and even brand reputation. • W HO IS THE DATA FROM OR ABOUT? This is about representation and being sure your data sets reflect the full and accurate panorama of data about your target—customers or other users. Capturing or feeding training data missing a subset or specific type of target will produce skewed results that, again, may have unintended consequences. • W HAT VARIABLES ARE YOU USING? This is where using or failing to use categories like race, ethnicity, gender, age, sexual orientation and other data can produce biased results. Think back to the crash test dummy example that produced bias by excluding gender data. Additionally, any data that’s not directly relevant to the end goal but could influence outcomes should be weeded out. THE ROAD TOWARD ETHICAL AI Talk to top technology thinkers and leaders and you’ll hear that algorithmic bias is solvable—and it is. But as Baxter and Firth-Butterfield reiterate, it requires action. Black-box insights and actions won’t work for business leaders. And they won’t work for regulators in many industries. Decisions with real-world importance—a loan decision for a family or a business; a medical decision on surgery for a patient—have to be understandable and traceable. The answer won’t be found in the millions of lines of code in a neural network. “We could open up the hood of an AI and take a look at the code, the model, but it really isn’t going to tell you anything,” Baxter says. “There’s a lot you have to do for it to be understandable. It isn’t a one size fits all. You need to have different solutions for different types of AI.” Enter explainable AI, a topic that inspires different responses and some confusion. One camp of scientists maintains that humans can never understand these algorithms because they’re far too complex. A solution on that side of the argument calls for the creation of guardian algorithms to look after standard COPYRIGHT © 2019 FORBES INSIGHTS | 7 algorithms. The other camp says that black-box stance is wrong and that humans need to be in the loop. “It is possible to create transparency,” Baxter says. “That camp seems to be gaining ascendency at the moment—and that’s probably just because we’ve encountered the problems. Now more people have actually started to think about how we can do this.” Figure 2: What new roles do you see being formed now or in the near future as a result of AI technologies? (Select all that apply) 55% 54% Explainability means a leader or team can see what went right—or, more important, what went wrong—and take ownership for it. A brand can own the results and reassure customers, even attract them to its brand by its positions on ethical issues. Many are hiring for new positions created around the imperative to understand and mold algorithms. Explainability Trainers Look at the new jobs coming online for proof of analysts to to teach AI the urgency around transparency—explainability determine the systems how impact of outputs to perform analysts who determine the impact of outcomes, from AI systems trainers who monitor the inputs, controllers who mold bots and virtual agents (Figure 2). This is the future. And it only shows how important the human hand is—and will continue to be—as AI advances. Ultimately, it will be the technologies that ensures trust in these systems. 44% 36% Algorithm forensics analysts Bot controllers human involvement in these THE FUTURE IS ETHICAL The full value to be unlocked by AI can emerge only when business leaders and all stakeholders—consumers and society at large—place their faith in the results produced by machines. But that will happen only when they see action from the top, at the board level in organizations and from the governments that represent them. And, for the time being, it may depend on active human management of these deep, mysterious networks. As you continue your AI journey, be sure to consider the following: • L EADERSHIP DEFINES ETHICS: The touchstone of a work culture created around ethical AI is leadership, the same quality that is essential in the adoption and development of innovative technology in the first place. Diversity and a rigorous approach to data across an organization are de rigueur, but a truly ethical culture is one where values and approaches permeate an organization. This in turn will attract talented people with the right mindset. You end up with a virtuous circle. ow do you structure a team to develop great, ethical AI? “It’s about culture,” Baxter says. “We have an agileH meets-AI approach, in which we make sure that teams are thinking through the potential implications of AI and 8 | BEING ETHICAL: HOW TO BEAT BIAS IN AI AND BE TRANSPARENT the potential unintended use cases. Then we go through the results and develop a maturity model that allows us to evaluate the results and see how we’re getting better over time. We want to keep ourselves honest and to hold ourselves accountable; we have to find ways to be able to say we are doing better today than yesterday. Having that maturity model is one way of being able to measure improvement or measure change over time.” • D IVERSITY IS IMPERATIVE. “The most important action is diversity. We need to be training more people from minorities and more women to create algorithms because the actual training of the algorithms carries the biases of the people who create them,” says Firth-Butterfield, adding that it’s important for “the teams who create algorithms to look like our society and thus reflect it. This is also true internationally, which means that countries and regions need to be training their own AI developers.” axter agrees: “Diversity is huge, and it’s not just diversity of gender and race or age. It’s also about experience B and background. When I’m in meetings and thinking about products and features and impact, I feel a responsibility to make sure that I’m always representing the people who are not in the room. We have to be able to think about everybody, and you can only do that if there’s representation in the room.” • M ODELS CAN ALWAYS BE IMPROVED. Ethics is reflected in how an AI is built, the parameters that define the problem to be solved. The models that are the foundation of machine learning systems have to be free of implicit or explicit bias—and that’s not easy to do. Baxter talks about the potential presence of proxy variables—data that might not be directly relevant to the goal but influences outcomes. In the U.S., she notes, zip code and income can be a proxy for race, for example. • E THICS CAN BE TAUGHT. The World Economic Forum is creating a repository of curricula from professors who are teaching their AI students about the social implications of AI, including bias, at the undergraduate and graduate level. “We’re inviting professors to upload their curricula into the forum repository so that we can then offer it to other professors around the world who want to set up curricula like this,” Firth-Butterfield says. Yet ethics can be taught outside the classroom as well. Consider hosting workshops or forums at your organization where ethics becomes a focal point. I want to make sure that we think about AI for good,” Baxter “ says. “But we also have to make sure that we don’t end up with tech worship, a belief that because it’s technology, it’s neutral and beneficent and that we can take our hands off the wheels and everything will go well. There’s tremendous power to create a much more fair and equitable world. But it takes effort, and a lot of thought, to be able to make sure that happens.” To learn more about how companies are leading with AI today, visit Forbes AI. “ I want to make sure that we think about AI for good . But we also have to make sure that we don’t end up with tech worship, a belief that because it’s technology, it’s neutral and beneficent and that we can take our hands off the wheels and everything will go well. There’s tremendous power to create a much more fair and equitable world. But it takes effort, and a lot of thought, to be able to make sure that happens.” KATHY BAXTER, ARCHITECT, ETHICAL AI PRACTICE, SALESFORCE COPYRIGHT © 2019 FORBES INSIGHTS | 9 METHODOLOGY The findings in this report are based on a Forbes Insights survey of 510 executives who lead organizations in the United States. Nearly half (48%) are C-suite executives, representing CEOs (15%), CIOs (10%), CTOs (9%), CISOs (3%) and other C-suite positions (11%). All executives are involved in the development and implementation of their organization’s AI strategy. The executives work in a variety of sectors, including energy, financial services, retail, healthcare, communications, manufacturing, transportation, automotive and distribution. All represent organizations with $500 million or more in annual revenue, with 74% coming from organizations with more than $1 billion in annual revenue. 15% CEOs 10% CIOs EXECUTIVES 9% CTOs 11% Other C-suite positions 3% CISOs ACKNOWLEDGMENTS Forbes Insights and Intel would like to thank the following individuals for their time and expertise: • Kathy Baxter, Architect, Ethical AI Practice, Salesforce • Kay Firth-Butterfield, Head of Artificial Intelligence and Machine Learning, World Economic Forum 10 | BEING ETHICAL: HOW TO BEAT BIAS IN AI AND BE TRANSPARENT 48% C-SUITE EXECUTIVES ABOUT FORBES INSIGHTS Forbes Insights is the strategic research and thought leadership practice of Forbes Media, a global media, branding and technology company whose combined platforms reach nearly 94 million business decision makers worldwide on a monthly basis. By leveraging proprietary databases of senior-level executives in the Forbes community, Forbes Insights conducts research on a wide range of topics to position brands as thought leaders and drive stakeholder engagement. Research findings are delivered through a variety of digital, print and live executions, and amplified across Forbes’ social and media platforms. EDITORIAL & RESEARCH SALES Erika Maguire North America Brian McLeod VICE PRESIDENT [email protected] EXECUTIVE EDITORIAL DIRECTOR Kasia Wandycz Moreno EDITORIAL DIRECTOR Hugo S. Moreno EDITORIAL DIRECTOR Ross Gagnon RESEARCH DIRECTOR Scott McGrath RESEARCH ANALYST Nick Lansing REPORT AUTHOR Zehava Pasternak DESIGNER PROJECT MANAGEMENT Matthew Muszala EXECUTIVE DIRECTOR [email protected] William Thompson DIRECTOR [email protected] Kimberly Kurata MANAGER [email protected] Europe Charles Yardley SVP MANAGING DIRECTOR [email protected] DIRECTOR, ACCOUNT MANAGEMENT Asia Will Adamopoulos Tori Kreher [email protected] Casey Zonfrilli PRESIDENT & PUBLISHER, FORBES ASIA PROJECT MANAGER Brian Lee PROJECT MANAGER Todd Della Rocca PROJECT MANAGER 499 Washington Blvd. Jersey City, NJ 07310 | 212.367.2662 | https://forbes.com/forbes-insights/ 12 | BEING ETHICAL: HOW TO BEAT BIAS IN AI AND BE TRANSPARENT